-

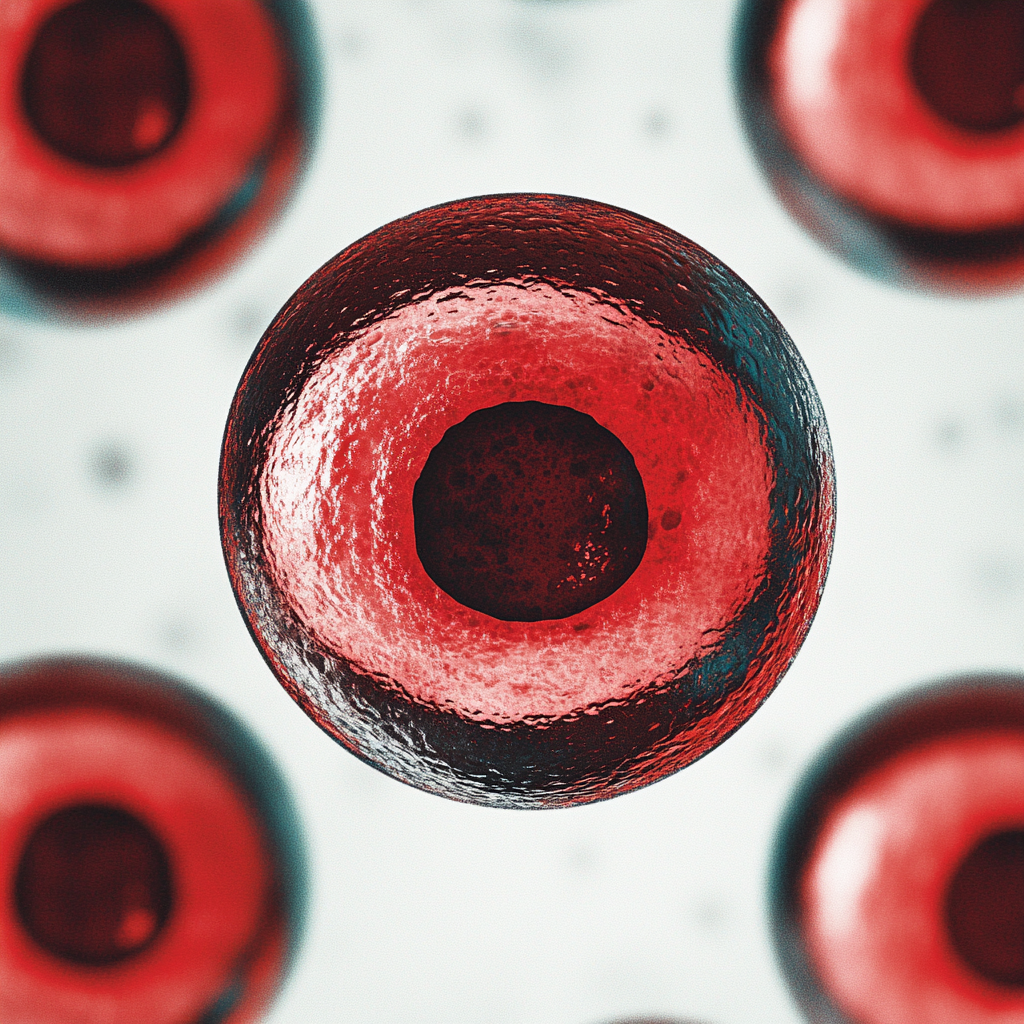

Efficacy of Low-Dose Fluconazole for Primary Prophylaxis of Invasive Candida Infections in Patients With Acute Leukemia: A Double-Blind Randomized Clinical Trial

CONCLUSION: Low-dose fluconazole is an effective alternative to high-dose regimens for preventing Candida infections in acute leukemia patients, with similar efficacy and safety. The rising threat of aspergillosis highlights the need for targeted prophylaxis. Further research is needed to refine strategies for high-risk patients. →

-

Comparison of ezetimibe and atorvastatin versus atorvastatin alone on short-term major adverse cardiac events after percutaneous coronary intervention, a double-blind placebo-controlled randomized clinical trial

CONCLUSION: Although adding ezetimibe to atorvastatin can decrease LDL and hs-CRP levels in short-term follow-up; it is not effective in lowering short-term major cardiovascular events in patients after PCI. Studies with longer-term follow-up are recommended. →

-

Exploring trauma-informed prenatal care preferences through diverse pregnant voices

CONCLUSIONS: Patient preferences identified by this study underscore the need for prenatal care to address the psychological health needs of pregnant patients to deliver high quality, comprehensive prenatal care that is trauma-informed and culturally-responsive. →

-

Comparative study of geometric localization technique and CT-guided percutaneous localization technique for peripheral GGO in wedge resection: a randomized controlled trial

CONCLUSIONS: Compared with CPLT, GLT has at least comparable outcomes in terms of effectiveness and accuracy; good safety profile was the advantage of GLT. →

-

Effectiveness of bilateral tongue base mucosectomy by transoral robotic surgery or transoral laser microsurgery in combination with tonsillectomy in identifying head and neck primary cancer of unknown primary: a randomized phase 2 protocol (RoboCUP trial)

BACKGROUND: In cases of prevalent lymphadenopathy in the head and neck cancer region, identifying the primary tumor site allows for more precise radiotherapy targeting. This improves treatment by reducing the volumes of mucosal irradiation and potentially lowering morbidity. An extensive diagnostic workup, including FDG PET-CT imaging and bilateral tonsillectomy, has been shown to identify the… →

-

The impact of biologics targeting the IL-17 and IL-23 pathways on metabolic indicators in plaque psoriasis

This study aims to compare the efficacy of IL-17 and IL-23 biologics in the treatment of plaque psoriasis (Psoriasis vulgaris) in patients with metabolic syndrome (MetS) and to explore the effects of different biologics on metabolic indicators, particularly regarding the differences in efficacy during long-term treatment. This is a randomized controlled clinical trial involving 120… →

-

Effect of long-term Mediterranean versus low-fat diet on neutrophil count, and type 2 diabetes mellitus remission in patients with coronary heart disease: results from the CORDIOPREV study

CONCLUSION: These findings suggest that neutrophil count can help in identifying patients that are more likely to achieve T2DM remission following a Mediterranean diet, suggesting a role on insulin sensitivity and β-cell function. Further research holds promise for providing valuable insights into the pathophysiology of T2DM. →

-

AWS Q Developer vs Microsoft Azure AI: The Top AI Tools for Cloud-Native Product Teams

Amazon Web Services Amazon Q Developer offers AI-driven code generation and optimization capabilities designed to enhance cloud-based development projects It reduces infrastructure costs by optimizing resource allocation and automating routine coding tasks Businesses leveraging AWS services often see a 20-30% decrease in operational expenses due to streamlined cloud management The platform integrates seamlessly with other… →

-

Operational Excellence – Операционное превосходство. Как выстроить процессы в компании таким образом, чтобы работа была эффектив…

Operational Excellence – Операционное превосходство. Как выстроить процессы в компании таким образом, чтобы работа была эффективной, быстрой и без ошибок. Применение принципов операционного превосходства для минимизации затрат и повышения производительности. #ИИМенеджмент #Маркетинг #Продуктовыймененджмент #Менеджмент #Продукт →

-

Гормональные препараты от Гротекс

Производство эффективных гормональных препаратов для лечения. →