-

Инновации в производстве с Канонфарма

Производство генериков и инновационных препаратов для широкого использования. →

-

Platform Lock-in – Зависимость от платформы. Когда пользователь оказывается привязан к одной платформе из-за данных, подписок ил…

Platform Lock-in – Зависимость от платформы. Когда пользователь оказывается привязан к одной платформе из-за данных, подписок или интеграций, что затрудняет смену сервиса. Как использовать стратегию lock-in для повышения удержания и создания барьеров для конкурентов. #Менеджмент #ИИМенеджмент #Продуктовыймененджмент #Маркетинг #Продукт →

-

Йодосодержащие препараты от Йодные технологии

Производство препаратов с йодом для различных медицинских целей. →

-

Empowering Time Series AI: How Salesforce is Leveraging Synthetic Data to Enhance Foundation Models

Empowering Time Series AI: How Salesforce is Leveraging Synthetic Data to Enhance Foundation Models Time series analysis faces significant hurdles in data availability, quality, and diversity, critical factors in developing effective foundation models. Real-world datasets often fall short due to regulatory limitations, inherent biases, poor quality, and limited paired textual annotations, making it difficult to… →

-

A Step by Step Guide to Solve 1D Burgers’ Equation with Physics-Informed Neural Networks (PINNs): A PyTorch Approach Using Autom…

A Step by Step Guide to Solve 1D Burgers’ Equation with Physics-Informed Neural Networks (PINNs): A PyTorch Approach Using Automatic Differentiation and Collocation Methods In this tutorial, we explore an innovative approach that blends with physical laws by leveraging Physics-Informed Neural Networks (PINNs) to solve the one-dimensional Burgers’ equation. Using PyTorch on Google Colab, we… →

-

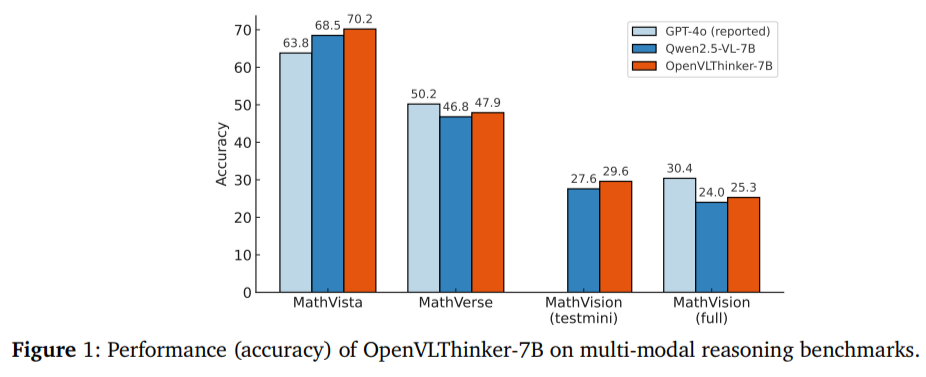

UCLA Researchers Released OpenVLThinker-7B: A Reinforcement Learning Driven Model for Enhancing Complex Visual Reasoning and Ste…

UCLA Researchers Released OpenVLThinker-7B: A Reinforcement Learning Driven Model for Enhancing Complex Visual Reasoning and Step-by-Step Problem Solving in Multimodal Systems Large vision-language models (LVLMs) integrate large language models with image processing capabilities, enabling them to interpret images and generate coherent textual responses. While they excel at recognizing visual objects and responding to prompts, they… →

-

Pivot – Разворот в бизнесе. Когда бизнес меняет направление или стратегию, потому что изначальная идея не оказалась успешной. Ка…

Pivot – Разворот в бизнесе. Когда бизнес меняет направление или стратегию, потому что изначальная идея не оказалась успешной. Как правильно провести pivot, чтобы не потерять время и ресурсы, а найти новую успешную нишу. #ИИ #ИИМаркетинг #Маркетинг #Менеджмент #Продуктовыймененджмент →

-

Лекарства для Дальнего Востока от Дальхимфарм

Производство высококачественных лекарств для регионов России. →

-

Comparison of Effectiveness Between Protein and BCAA in Late Evening Snack on Vietnamese Liver Cirrhotic Outpatients: a Randomized Clinical Trial

CONCLUSIONS: A protein snack is an effective dietary intervention in improving albumin, biochemical parameters, and nutritional status for compensated liver cirrhosis outpatients. Considering cost, availability, and taste, a BCAA snack might be unnecessary for liver cirrhosis outpatients. →

-

Impact of Various Doses of Dobutamine on Myocardial Viability and the Effects of β-Blockers in Patients With Ischemic Cardiomyopathy: An Assessment Using Stress Echocardiography

CONCLUSION: Moderate-dose DSE exhibited the optimal diagnostic performance in assessing myocardial viability in patients with ICM. Furthermore, the use of β-blockers effectively enhances the sensitivity and accuracy of the diagnosis, providing important practical guidance for the clinical evaluation of myocardial viability. →