-

Randomised, controlled Trial of CT perfusion and angiography compared to CT alone in thrombolysis-eligible acute ischaemic stroke patients: The penumbra and recanalisation acute computed tomography in ischaemic stroke evaluation (PRACTISE) trial

CONCLUSION: Among acute stroke patients imaged <4.5 h from symptom onset, multimodal CT reduced use of thrombolysis. Treatment decision times and clinical outcomes did not differ between groups. →

-

Safety and efficacy of intra-arterial tenecteplase for non-complete reperfusion of intracranial occlusions: Methodology of a randomized, controlled, multicenter study

RATIONALE: Intra-arterial fibrinolytics may be used for distal remaining vessel occlusions after incomplete mechanical thrombectomy (MT). However, their efficacy in improving reperfusion in this specific clinical scenario is unclear. While better reperfusion may lead to improved clinical outcomes, additional fibrinolytics could also increase the risk of hemorrhagic complications. →

-

Corticomotor Excitability Changes Induced by Progressive Balance Exercises in Chronic Ankle Instability: a Randomized Clinical Trial

CONCLUSIONS: Six weeks of progressive balance exercises significantly increased corticomotor excitability corresponding to the peroneus longus muscle in individuals with chronic ankle instability. →

-

Ivarmacitinib in patients with moderate to severe atopic dermatitis stratified by baseline characteristics: a post-hoc analysis of a phase 3 clinical trial

CONCLUSION: Ivarmacitinib demonstrated efficacy and good tolerability in treating moderate to severe AD with diverse patient characteristics. →

-

From awareness to action: Health Belief Model-based educational intervention to improve breast self-examination practice among college teachers in Pakistan (CRCT)

CONCLUSION: HBM-based intervention effectively enhanced BSE knowledge, beliefs, and practices while reducing barriers. Findings emphasize the value of structured educational interventions in promoting preventive health behaviors among female educators. →

-

How to Use Generative AI for Pricing

Matt Harrison Clough/Ikon Images How can recent advances in generative AI tools be applied to transform pricing decisions? By lowering technical and financial barriers, such tools democratize access to sophisticated pricing capabilities, empowering even small businesses to benefit from artificial intelligence without the need for costly, bespoke solutions. There are fundamental differences between GenAI-driven approaches… →

-

Intensive blood pressure control and risks of cognitive outcomes in patients with new-onset orthostatic hypotension

CONCLUSIONS: The occurrence of OH requires close monitoring, with caution for potential cognitive adverse effects during ongoing intensive BP-lowering treatment. Validation of current findings in studies with justified methodological designs is warranted. →

-

Effectiveness and implementation of a multi-faceted intervention to facilitate adoption of asthma self-management practices in Peruvian children and adolescents: a hybrid type 2 individually randomized controlled trial

BACKGROUND: Peru has one of the highest burdens of asthma in the world, as well as large gaps in access to evidence-based treatments. Studies often overlook the ways in which the research context and data collection activities interact and influence the experience of a research participant, their perception of an intervention, and, by extension, study… →

-

Low-Intensity Blood Flow-Restricted Multi-Joint Exercise Improves Muscle Function in Patients With Patellofemoral Pain Syndrome: A Randomized Trial

BACKGROUND Patellofemoral pain syndrome (PFPS) limits physical activity and quality of life, especially during weight-bearing tasks. Although high-load resistance exercises are recommended for rehabilitation, they may worsen symptoms in pain-sensitive individuals. Low-intensity blood flow restriction (BFR) training has emerged as a potential alternative. However, its effects on functional performance and mechanical properties remain unclear. MATERIAL… →

-

Evaluating the effect of cryotherapy and low-level laser therapy on postoperative pain and quality of life in patients with symptomatic apical periodontitis- a randomized controlled clinical study

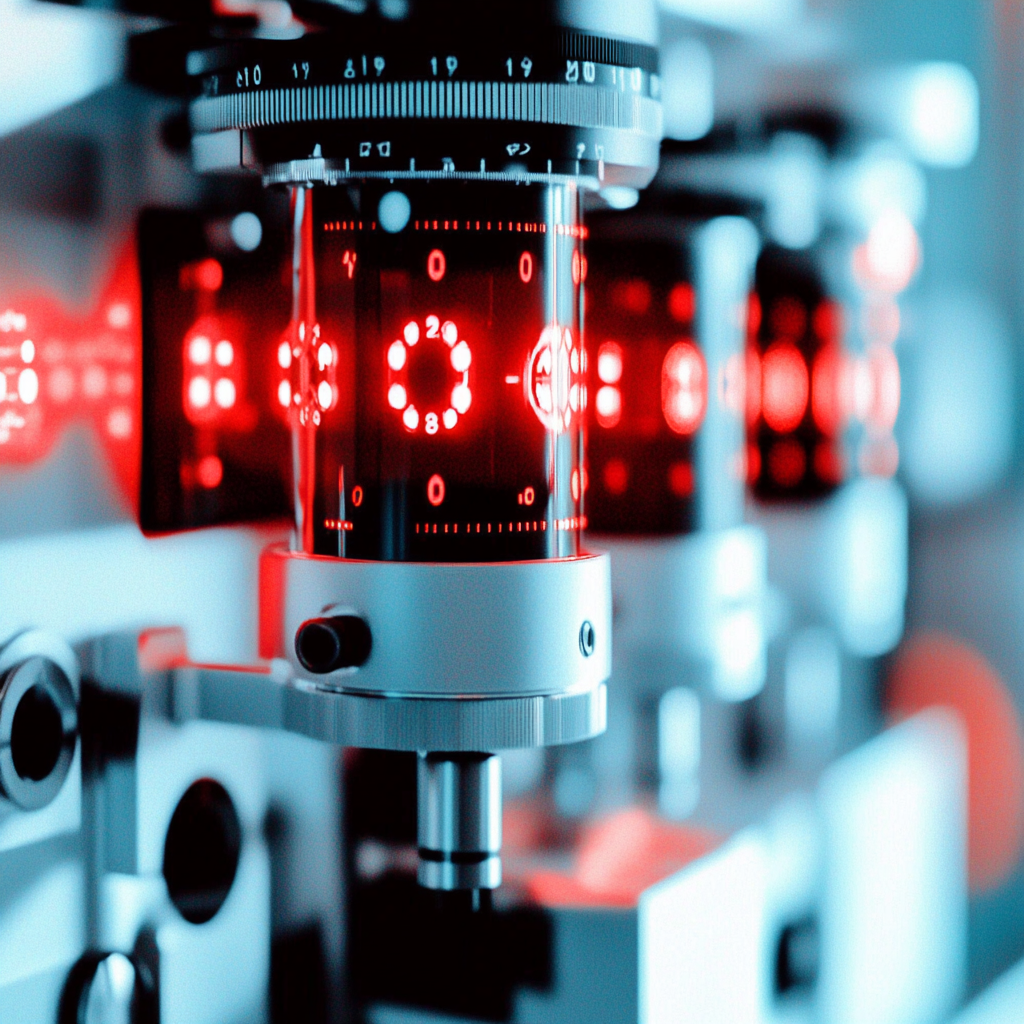

The aim of this randomized controlled clinical study is to compare the effect of intracanal cryotherapy and intraoral Low-Level LASER Therapy (LLLT) applications on postoperative pain and quality of life in patients following endodontic treatment for symptomatic apical periodontitis. After ethical clearance and registering the trial at clinical trial registry of India, a randomized, parallel-controlled… →