-

ByteDance Open-Sources DeerFlow: A Modular Multi-Agent Framework for Deep Research Automation

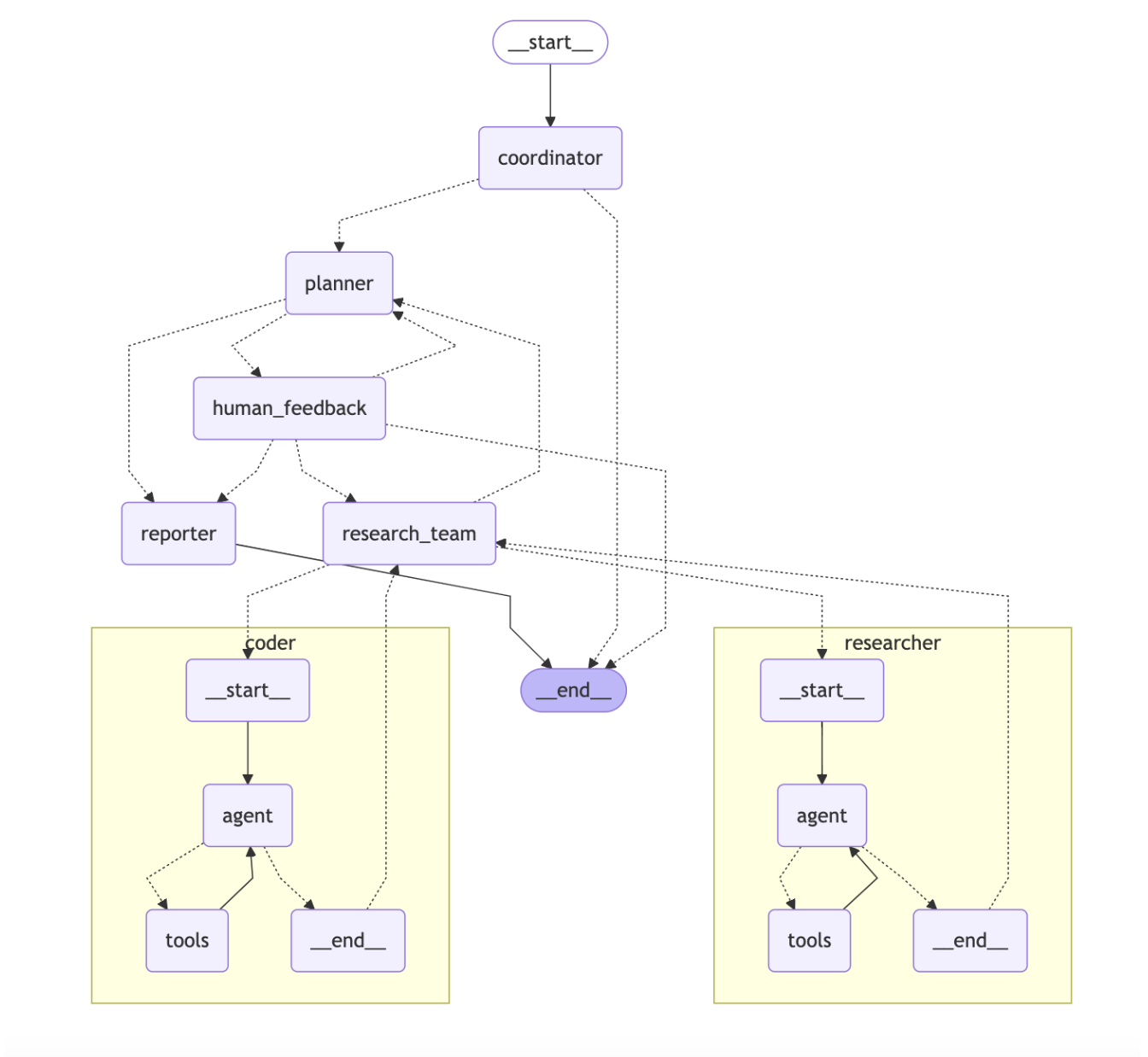

ByteDance has released DeerFlow, an open-source multi-agent framework designed to enhance complex research workflows by integrating the capabilities of large language models (LLMs) with domain-specific tools. Built on top of LangChain and LangGraph, DeerFlow offers a structured, extensible platform for automating sophisticated research tasks—from information retrieval to multimodal content generation—within a collaborative human-in-the-loop setting. Tackling… →

-

Enterprise AI Without GPU Burn: Salesforce’s xGen-small Optimizes for Context, Cost, and Privacy

Language processing in enterprise environments faces critical challenges as business workflows increasingly depend on synthesising information from diverse sources, including internal documentation, code repositories, research reports, and real-time data streams. While recent advances in large language models have delivered impressive capabilities, this progress comes with significant downsides: skyrocketing per-request costs, constant hardware upgrade requirements, and… →

-

A Deep Technical Dive into Next-Generation Interoperability Protocols: Model Context Protocol (MCP), Agent Communication Protocol (ACP), Agent-to-Agent Protocol (A2A), and Agent Network Protocol (ANP)

As autonomous systems increasingly rely on large language models (LLMs) for reasoning, planning, and action execution, a critical bottleneck has emerged, not in capability but in communication. While LLM agents can parse instructions and call tools, their ability to interoperate with one another in scalable, secure, and modular ways remains deeply constrained. Vendor-specific APIs, ad… →

-

Acute lorazepam administration does not significantly affect moral attitudes or judgments

Recent scientific studies exploring the neuropsychological foundations of moral decision-making have shown that moral attitudes and evaluations are significantly influenced by emotion, particularly negative emotionality, as well as personality traits such as neuroticism. Further psychopharmacological research has observed that GABAergic agonists are capable of influencing moral decision-making by modifying anxiety-related emotional negativity and/or through cognitive… →

-

Best Practices for Building the AI Development Platform in Government

By John P. Desmond, AI Trends Editor The AI stack defined by Carnegie Mellon University is fundamental to the approach being taken by the US Army for its AI development platform efforts, according to Isaac Faber, Chief Data Scientist at the US Army AI Integration Center, speaking at the AI World Government event held in-person and virtually… →

-

Advance Trustworthy AI and ML, and Identify Best Practices for Scaling AI

By John P. Desmond, AI Trends Editor Advancing trustworthy AI and machine learning to mitigate agency risk is a priority for the US Department of Energy (DOE), and identifying best practices for implementing AI at scale is a priority for the US General Services Administration (GSA). That’s what attendees learned in two sessions at the AI… →

-

Promise and Perils of Using AI for Hiring: Guard Against Data Bias

By AI Trends Staff While AI in hiring is now widely used for writing job descriptions, screening candidates, and automating interviews, it poses a risk of wide discrimination if not implemented carefully. Keith Sonderling, Commissioner, US Equal Opportunity Commission That was the message from Keith Sonderling, Commissioner with the US Equal Opportunity Commision, speaking at the AI… →

-

Predictive Maintenance Proving Out as Successful AI Use Case

By John P. Desmond, AI Trends Editor More companies are successfully exploiting predictive maintenance systems that combine AI and IoT sensors to collect data that anticipates breakdowns and recommends preventive action before break or machines fail, in a demonstration of an AI use case with proven value. This growth is reflected in optimistic market forecasts.… →

-

Novelty In The Game Of Go Provides Bright Insights For AI And Autonomous Vehicles

By Lance Eliot, the AI Trends Insider We already expect that humans to exhibit flashes of brilliance. It might not happen all the time, but the act itself is welcomed and not altogether disturbing when it occurs. What about when Artificial Intelligence (AI) seems to display an act of novelty? Any such instance is bound to get our attention;… →

-

Friday morning fever. Evidence from a randomized experiment on sick leave monitoring in the public sector

CEPDP1770. May 2021.Absent providers of key public services, such as schooling and health, are a major problem in both developed and developing countries. This paper provides the first analysis of a population-wide controlled field experiment for home visits checking on sick leave in the public sector. The experiment was carried out in Italy, a country… →