Model merging has emerged as a powerful technique for creating versatile, multi-task models by combining weights of task-specific models. This approach enables crucial capabilities such as skill accumulation, model weakness patching, and collaborative improvement of existing models. While model merging has shown remarkable success with full-rank finetuned (FFT) models, significant challenges arise when applying these techniques to parameter-efficient finetuning (PEFT) methods, particularly Low-Rank Adaptation (LoRA). Analysis through centered kernel alignment (CKA) reveals that, unlike FFT models with high task-update alignment, LoRA models show much lower alignment, indicating that their task-updates process inputs through misaligned subspaces.

Existing approaches have emerged to address the challenges of model merging, building upon the concept of mode connectivity where parameter values of independently trained neural networks can be interpolated without increasing test loss. An approach called Task Arithmetic (TA) introduced the concept of “task-vectors” by subtracting pre-trained model parameters from finetuned ones, while TIES improved upon this by addressing parameter interference through selective averaging of weights sharing dominant signs. Moreover, DARE explored sparse task vectors through random weight dropping. However, these methods have shown limited success when applied to LoRA models due to increased weight entanglement between models.

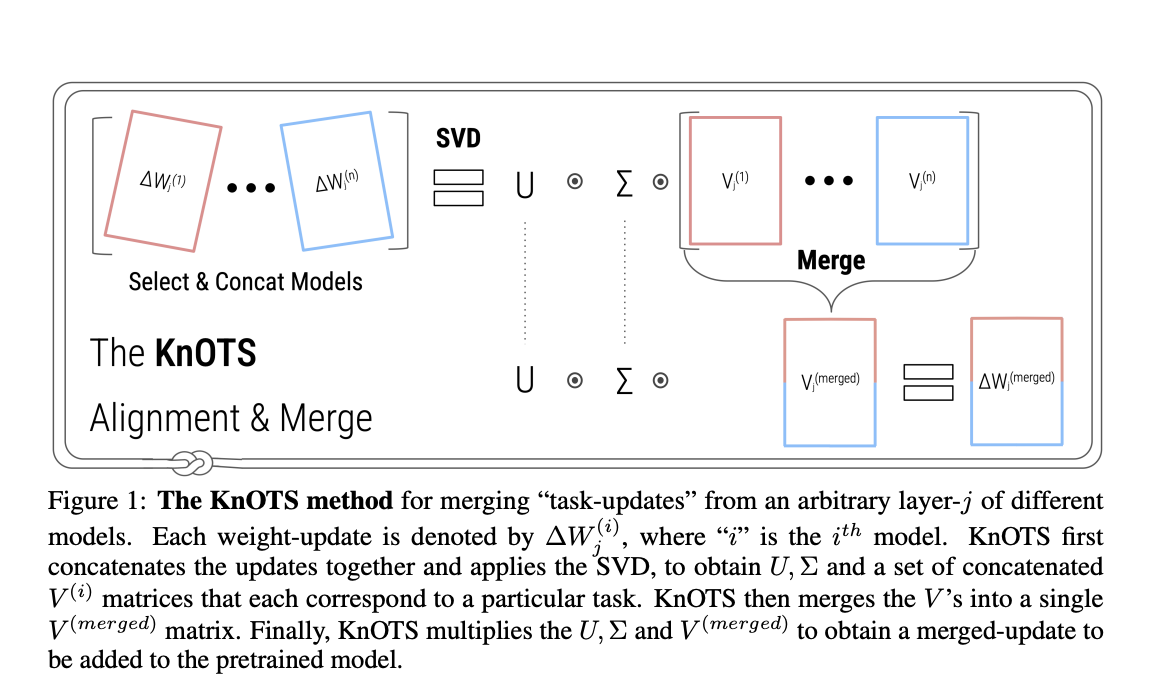

Researchers from Georgia Tech, and IBM Research, MIT have proposed KnOTS (Knowledge Orientation Through SVD), a novel approach that transforms task-updates of different LoRA models into a shared space using singular value decomposition (SVD). This method is designed to be versatile and compatible with existing merging techniques. KnOTS operates by combining task updates for each layer and decomposing them through SVD. Moreover, researchers introduced a new “joint-evaluation” benchmark to evaluate this method and test merged models’ ability to handle inputs from multiple datasets simultaneously without dataset-specific context. It provides a more realistic assessment of a model’s generalization capabilities across diverse tasks.

KnOTS implements a complex architecture operating in multiple stages to effectively align and merge LoRA models. The method works with several existing gradient-free merging approaches, including RegMean, Task-Arithmetic (TA), TIES, and DARE. RegMean uses a closed-form locally linear regression to align model weights, while TA performs a direct linear summation of parameters using scaling coefficients. TIES enhances this approach by implementing magnitude-based pruning and sign resolution to reduce parameter conflicts. Moreover, DARE introduces a probabilistic element by randomly pruning parameters following a Bernoulli distribution. The researchers also include an Ensemble baseline that processes inputs through all models and selects predictions based on the highest confidence scores.

Experimental results demonstrate KnOTS’s effectiveness across various model architectures and tasks. In the vision domain, when merging eight ViT-B/32 models finetuned on different image classification datasets, KnOTS achieves similar performance compared to existing methods. The approach shows even more impressive results with larger ViT-L/14 models, where KnOTS-TIES outperform baselines by up to 3%. In the language domain, testing on Llama3-8B models finetuned for natural language inference tasks, KnOTS-TIES significantly improves upon baseline methods, achieving up to 2.9% higher average normalized accuracy. Moreover, KnOTS-DARE-TIES further enhances performance by an additional 0.2%.

In this paper, researchers introduced KnOTS, a method that utilizes singular value decomposition (SVD) to transform task updates of LoRA models into a shared representation space, enabling the application of various gradient-free merging techniques. Moreover, the researchers introduce a novel “joint-evaluation” benchmark that evaluates the ability of merged models to handle inputs from multiple datasets, without any dataset-specific context. Extensive experiments show the effectiveness of KnOTS, which consistently improves the performance of existing merging approaches by up to 4.3%, showing its robustness across model architectures and tasks. KnOTS has the potential to create general, multi-task models by effectively aligning and merging LoRA representations.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Upcoming Live LinkedIn event] ‘One Platform, Multimodal Possibilities,’ where Encord CEO Eric Landau and Head of Product Engineering, Justin Sharps will talk how they are reinventing data development process to help teams build game-changing multimodal AI models, fast‘

The post Researchers from Georgia Tech and IBM Introduces KnOTS: A Gradient-Free AI Framework to Merge LoRA Models appeared first on MarkTechPost.