Human-computer interaction (HCI) focuses on designing and using computer technology, particularly the interfaces between people (users) and computers. Researchers in this field observe how humans interact with computers & design technologies that let humans interact with computers in novel ways. HCI encompasses various areas, such as user experience design, ergonomics, and cognitive psychology, aiming to create intuitive and efficient interfaces that enhance user satisfaction and performance.

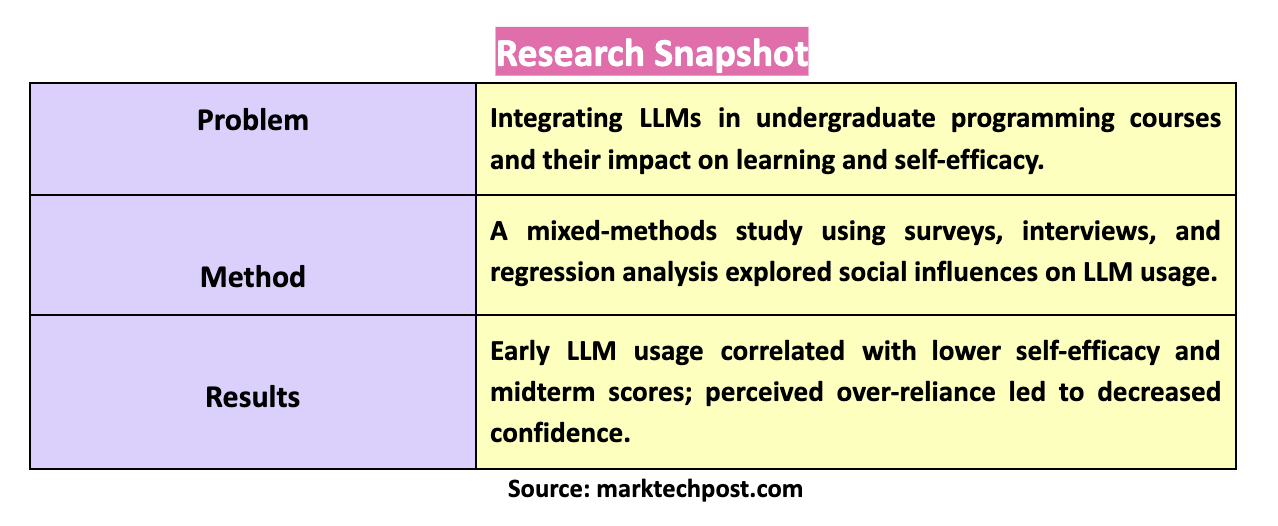

One significant challenge in HCI and education is the integration of large language models (LLMs) in undergraduate programming courses. These advanced AI tools, such as OpenAI’s GPT models, have the potential to revolutionize the way programming is taught and learned. However, their impact on students’ learning processes, self-efficacy, and career perceptions remains a critical concern. Understanding how these tools can be effectively integrated into the educational framework is essential for maximizing their benefits while minimizing potential drawbacks.

Traditionally, programming education has relied on lectures, textbooks, and interactive coding assignments. Some educational environments have begun incorporating simpler AI tools for code generation and debugging assistance. However, the integration of sophisticated LLMs is still in its nascent stages. These models can generate, debug, and explain code, offering new ways to assist students in their learning journey. Despite their potential, there is a need to understand how students adapt to these tools and how they influence their learning outcomes and self-confidence.

Researchers from the University of Michigan introduced a comprehensive study to explore the social factors influencing the adoption and use of LLMs in an undergraduate programming course. The study utilized the social shaping theory to examine how students’ social perceptions, peer influences, and career expectations impact their use of LLMs. The research team employed a mixed-methods approach, including an anonymous end-of-course survey with 158 students, mid-course self-efficacy surveys, student interviews, and a midterm performance data regression analysis. This multi-faceted approach aimed to provide a detailed understanding of the dynamics at play.

The study methodologically involved an anonymous survey distributed to students, semi-structured interviews for deeper insights, and regression analysis of midterm performance data. This approach aimed to triangulate data from multiple sources to understand the social dynamics affecting LLM usage comprehensively. Researchers discovered that students’ use of LLMs was associated with their future career expectations and perceptions of peer usage. Notably, early self-reported LLM usage correlated with lower self-efficacy and midterm scores. However, the perceived over-reliance on LLMs, rather than their actual usage, is associated with decreased self-efficacy later in the course.

The proposed methodology included a detailed survey and interview to gather qualitative and quantitative data. The survey, conducted during the final week of in-person classes, aimed to capture a representative sample of student attitudes and perceptions regarding LLMs. The survey consisted of 25 questions, covering areas such as familiarity with LLM tools, usage patterns, and concerns about over-reliance. Five self-efficacy questions were also included to assess students’ confidence in their programming abilities. This data was then analyzed using regression techniques to identify significant patterns and correlations.

Notable results from the study indicated that early LLM usage correlated with lower self-efficacy and midterm scores. Students perceived over-reliance on LLMs rather than the usage itself, which led to decreased self-efficacy later in the course. Their career aspirations and perceptions of peer usage significantly influenced students’ decisions to use LLMs. For instance, students who believed over-reliance on LLMs would hurt their job prospects tended to prefer learning programming skills independently. Conversely, those who anticipated a high future use of LLMs in their careers were likelier to engage with these tools during the course.

The study also highlighted the performance and notable results of integrating LLMs into the curriculum. For example, LLM students reported mixed outcomes in their programming self-efficacy and learning achievements. Some students found that using LLMs helped them understand complex coding concepts and error messages, while others felt that it negatively impacted their confidence in their coding abilities. Regression analysis revealed that students who felt over-reliant on LLMs had lower self-efficacy scores, emphasizing the importance of balanced tool usage.

In conclusion, the study underscores the complex dynamics of integrating LLMs into undergraduate programming education. Social factors, such as peer usage and career aspirations, heavily influence the adoption of these advanced tools. While LLMs can significantly enhance learning experiences, over-reliance on these tools can negatively impact students’ confidence and performance. Therefore, finding a balance in using LLMs is crucial to ensure students build strong foundational skills while leveraging AI tools for enhancement. These findings highlight the need for thoughtful integration strategies that consider both the technological capabilities of LLMs and the social context of their use in educational settings.

Source

- https://arxiv.org/pdf/2406.06451

The post Balancing AI Tools and Traditional Learning: Integrating Large Language Models in Programming Education appeared first on MarkTechPost.