By utilizing language thinking, Large Vision-Language Models (VLMs) have demonstrated remarkable capabilities as adaptable agents that can solve a wide range of tasks. A good way to improve VLM performance is to fine-tune them with specific visual instruction-following data. Their performance is greatly enhanced by this strategy, which teaches them to obey precise visual directions.

However, there are drawbacks to this method, which mostly depends on supervised learning from pre-gathered information. It might not be the ideal method for training agents in multi-step interactive environments that necessitate language comprehension in addition to visual recognition. The reason for this is that the diversity required to cover the wide range of decision-making scenarios that these agents may encounter may not be present in these pre-collected datasets.

Reinforcement Learning (RL) offers a way to get over these restrictions and fully develop the decision-making capabilities of VLM agents in intricate, multi-step situations. While reinforcement learning has been effective in training agents for a range of text-based tasks, it has not yet been widely utilized to optimize vector language models (VLMs) for tasks requiring end-to-end language and visual processing.

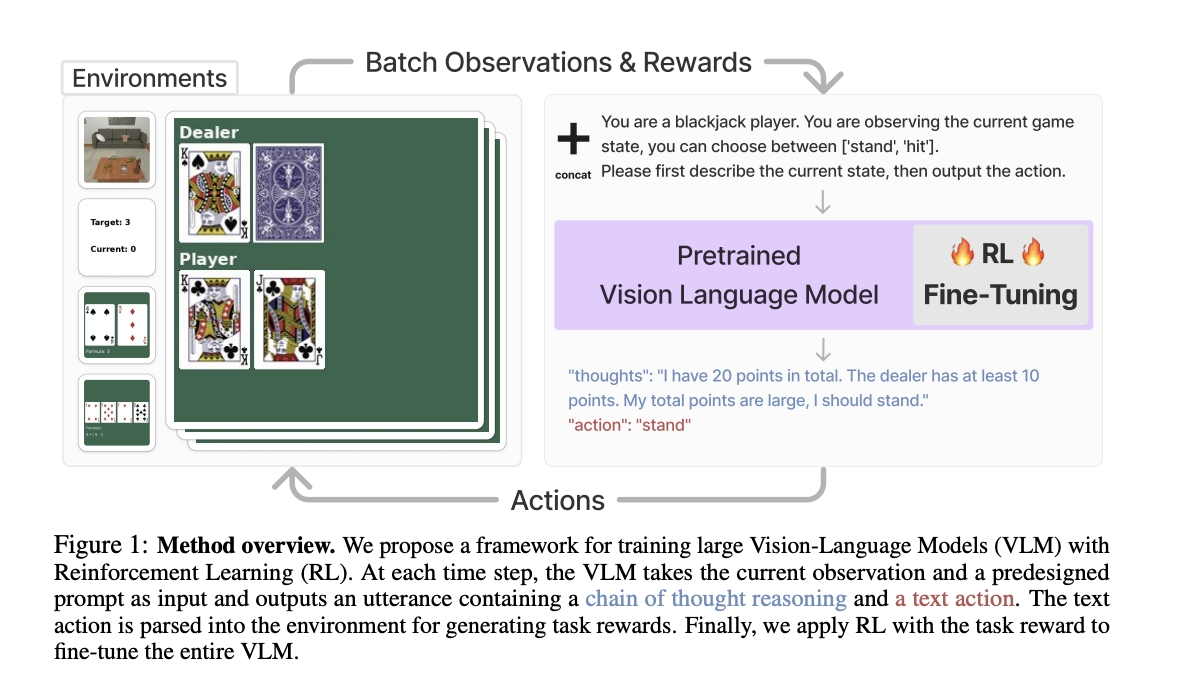

In recent research, a team of researchers has created an algorithmic framework that uses Reinforcement Learning to optimize VLMs to address this problem. First, the framework gives the task description to the VLM, causing the model to provide Chain-Of-Thought (CoT) reasoning. This is an important stage because it allows the VLM to study intermediate steps in reasoning that logically lead to the last text-based action needed to finish the task.

The text output produced by the VLM is processed into executable actions so that the agent can communicate with its surroundings. The agent is rewarded through these interactions according to how well their actions accomplish the objectives of the job. These rewards are then used to use RL to fine-tune the entire VLM, improving its ability to make decisions.

The tests’ empirical findings have shown that this paradigm greatly enhances VLM agents’ performance in decision-making tasks. For example, this approach enabled a 7-billion parameter model to outperform popular commercial models such as GPT-4V and Gemini. The team has shared that they found that these performance advantages are only possible with the CoT reasoning component. The model’s overall performance significantly decreased when they evaluated this strategy without using CoT reasoning. This demonstrates the significance of CoT reasoning in the RL training framework and its crucial function in enhancing VLMs’ decision-making abilities.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post Researchers from UC Berkeley, UIUC, and NYU Developed an Algorithmic Framework that Uses Reinforcement Learning (RL) to Optimize Vision-Language Models (VLMs) appeared first on MarkTechPost.