Recently, there’s been increasing interest in enhancing deep networks’ generalization by regulating loss landscape sharpness. Sharpness Aware Minimization (SAM) has gained popularity for its superior performance on various benchmarks, specifically in managing random label noise, outperforming SGD by significant margins. SAM’s robustness shines particularly in scenarios with label noise, showcasing substantial improvements over existing techniques. Also, SAM’s effectiveness persists even with under-parameterization, potentially increasing gains with larger datasets. Understanding SAM’s behavior, especially in the early learning phases, becomes crucial in optimizing its performance.

While SAM’s underlying mechanisms remain elusive, several studies have attempted to shed light on the significance of per-example regularization in 1-SAM. Some researchers demonstrated that in sparse regression, 1-SAM exhibits a bias towards sparser weights compared to naive SAM. Prior studies also differentiate between the two by highlighting differences in the regularization of “flatness.” Recent research links naive SAM to generalization, underscoring the importance of understanding SAM’s behavior beyond convergence.

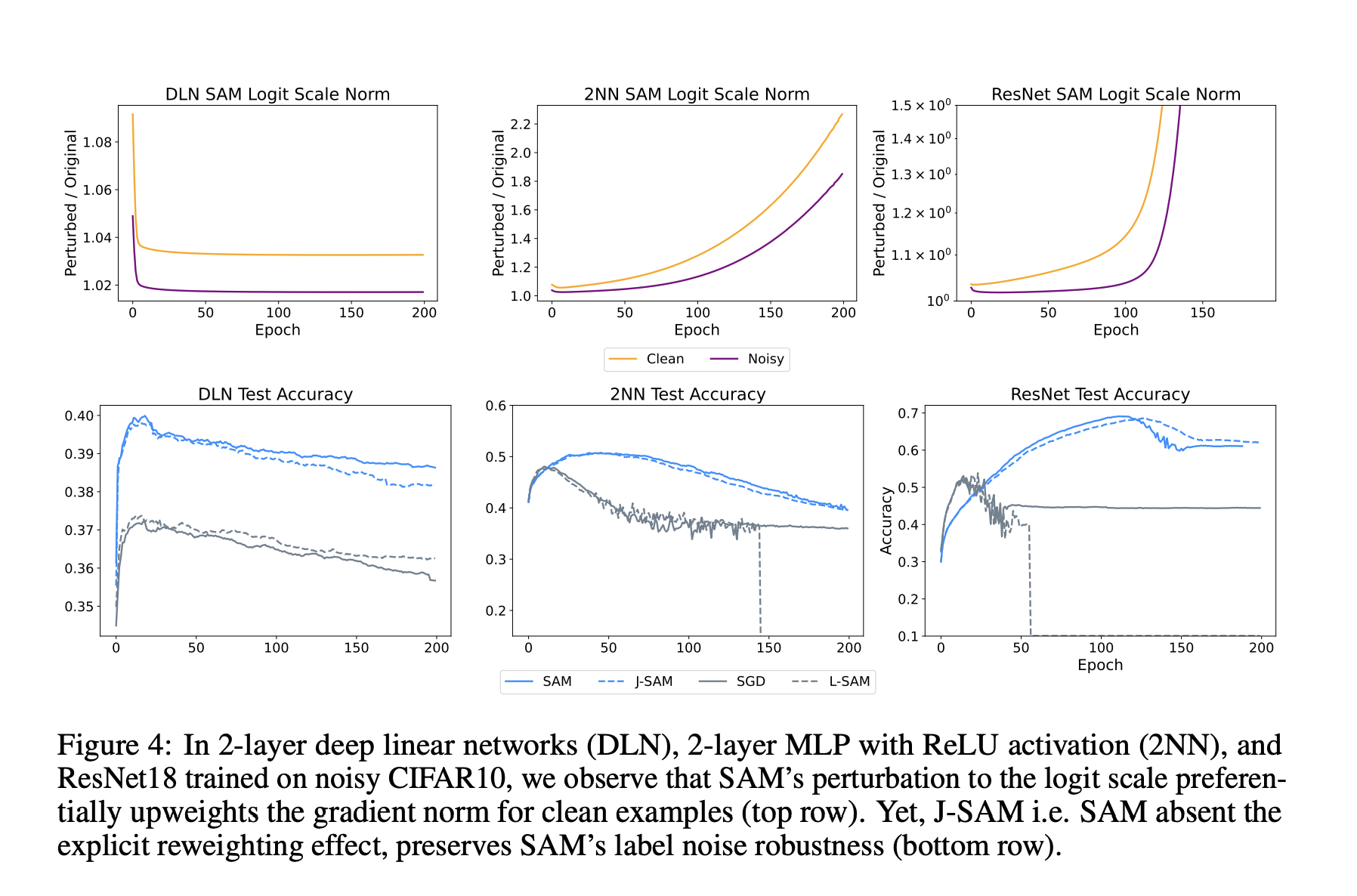

Carnegie Mellon University researchers provide a study that investigates why 1-SAM demonstrates greater robustness to label noise compared to SGD at a mechanistic level. By analyzing the gradient decomposition of each example, particularly focusing on the logit scale and network Jacobian terms, the research identifies key mechanisms enhancing early-stopping test accuracy. In linear models, SAM’s explicit up-weighting of low loss points proves beneficial, especially in the presence of mislabeled examples. Empirical findings suggest that SAM’s label noise robustness originates primarily from its Jacobian term in deep networks, indicating a fundamentally different mechanism compared to the logit scale term. Also, analyzing Jacobian-only SAM reveals a decomposition into SGD with ℓ2 regularization, offering insights into its performance improvement. These findings underscore the importance of optimization trajectory rather than sharpness properties at convergence in achieving SAM’s label noise robustness.

Through experimental investigations on toy Gaussian data with label noise, SAM demonstrates significantly higher early-stopping test accuracy compared to SGD. Analyzing SAM’s update process, it becomes evident that its adversarial weight perturbation prioritizes up-weighting the gradient signal from low-loss points, thereby maintaining high contributions from clean examples in the early training epochs. This preference for clean data leads to higher test accuracy before overfitting to noise. The study further sheds light on the role of SAM’s logit scale, showing how it effectively up-weights gradients from low-loss points, consequently improving overall performance. This preference for low-loss points is demonstrated through mathematical proofs and empirical observations, highlighting SAM’s distinct behavior from naive SAM updates.

After simplifying SAM’s regularization to include ℓ2 regularization on the last layer weights and last hidden layer intermediate activations in deep network training using SGD. This regularization objective is applied to CIFAR10 with ResNet18 architecture. Due to instability issues with batch normalization, researchers replace it with layer normalization for 1-SAM. Comparing the performance of SGD, 1-SAM, L-SAM, J-SAM, and regularized SGD, they found that while regularized SGD doesn’t match SAM’s test accuracy, the gap significantly narrows from 17% to 9% under label noise. However, in noise-free scenarios, regularized SGD only marginally improves, while SAM maintains an 8% advantage over SGD. This suggests that while not fully explaining SAM’s generalization benefits, similar regularization in the final layers is crucial for SAM’s performance, especially in noisy environments.

In conclusion, This work aims to provide a robust perspective on the effectiveness of SAM by demonstrating its ability to prioritize learning clean examples before fitting noisy ones, particularly in the presence of label noise. In linear models, SAM explicitly up-weights gradients from low loss points, akin to existing label noise robustness methods. In nonlinear settings, SAM’s regularization of intermediate activations and final layer weights improves label noise robustness, similar to methods that regulate logits’ norm. Despite their similarities, SAM remains underexplored in the label noise domain. Nonetheless, simulating aspects of SAM’s regularization of the network Jacobian can preserve its performance, suggesting potential for developing label-noise robustness methods inspired by SAM’s principles, albeit without the additional runtime costs of 1-SAM.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 41k+ ML SubReddit

The post Exploring Sharpness-Aware Minimization (SAM): Insights into Label Noise Robustness and Generalization appeared first on MarkTechPost.