A key element of Natural Language Processing (NLP) applications is Named Entity Recognition (NER), which recognizes and classifies named entities, such as names of people, places, dates, and organizations within the text. While specified entity types limit the effectiveness of traditional NER models, they also restrict their adaptability to new or diverse datasets.

On the other hand, ChatGPT and other Large Language Models (LLMs) provide greater flexibility in entity recognition by allowing the extraction of arbitrary entities from plain language instructions. However, these models are less useful in situations with limited resources because they are frequently big in size and have high computational costs, especially when accessed through APIs.

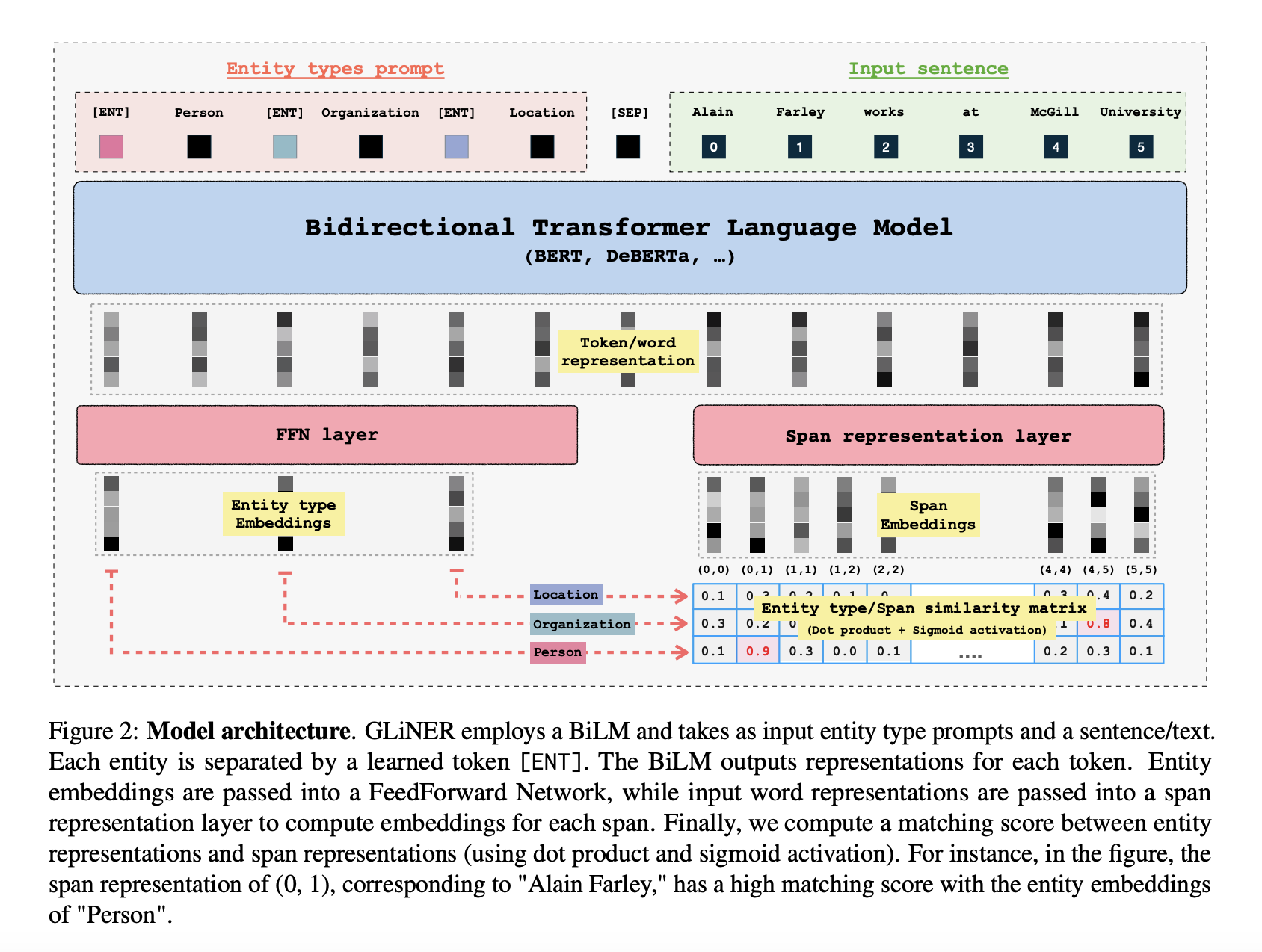

In recent research, a compact NER model named GLiNER has been developed to address these issues. GLiNER processes text in both forward and backward directions at the same time since it makes use of a bidirectional transformer encoder. Compared to LLMs like ChatGPT, which use a sequential token generation approach, this bidirectional processing offers the advantage of being more efficient and concurrently allowing for the extraction of entities.

The team has shared that they have used smaller-scale Bidirectional Language Models (BiLM) such as BERT or deBERTa in place of huge autoregressive models. Instead of viewing Open NER as a generation task, this approach reframes it as a task of matching entity type embeddings to textual span representations in latent space. This method resolves scalability problems with autoregressive models, and bidirectional context processing is made possible for richer representations.

After extensive testing, GLiNER has proven to perform well in a number of NER benchmarks, standing out especially in zero-shot assessments. In zero-shot scenarios, the model’s generalization and adaptability to a variety of datasets have been demonstrated by evaluating it on entity types on which it hasn’t been explicitly trained.

In these trials, GLiNER consistently outperformed both ChatGPT and fine-tuned LLMs, demonstrating its effectiveness in real-world NER applications. The model outperformed ChatGPT in eight out of ten untrained languages, demonstrating its resilience to languages not encountered during training. This demonstrates the effectiveness and versatility of this method in practical NER applications.

In conclusion, GLiNER offers a condensed and effective solution that strikes a balance between flexibility, performance, and resource efficiency, making it a promising approach to NER. Its exceptional zero-shot performance across several NER benchmarks has been attributed to its bidirectional transformer architecture, which allows for parallel entity extraction, hence improving speed and accuracy in comparison to typical LLMs. This study emphasizes how crucial it is to create customized models for particular NLP tasks in order to meet resource-constrained settings’ requirements while preserving good performance.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 41k+ ML SubReddit

The post Meet GLiNER: A Generalist AI Model for Named Entity Recognition (NER) Using a Bidirectional Transformer appeared first on MarkTechPost.