Reinforcement learning (RL) is a fascinating field of AI focused on training agents to make decisions by interacting with an environment and learning from rewards and penalties. RL differs from supervised learning because it involves doing rather than learning from a static dataset. Let’s delve into the core principles of RL and explore its applications in game playing, robot control, and resource management.

Principles of Reinforcement Learning

- Agent and Environment: In RL, the agent is the learner or decision-maker interacting with the environment. The environment provides context to the agent, affecting its decisions and providing feedback through rewards or penalties. A well-known example is the classic OpenAI Gym environments used for training RL agents.

- State and Action: The environment is represented by different states, which define the agent’s perception of the current situation. The agent takes actions to transition from one state to another, aiming to find the most rewarding sequences of actions. For example, in chess, a state represents the positions of all pieces on the board, and an action is a move.

- Reward Signal: Rewards and penalties guide the agent’s learning. A reward signal evaluates the agent’s last action based on the resulting state. The agent aims to maximize its cumulative reward, learning from positive and negative outcomes. In video games, a reward could be points scored, while a penalty might be losing a life.

- Policy: A policy is the agent’s strategy for selecting actions based on states. It can be deterministic (a fixed action for each state) or stochastic (an action chosen probabilistically based on state). A robust policy is key to effective decision-making, guiding the agent toward favorable outcomes. DeepMind’s AlphaZero uses a sophisticated policy network to select moves in board games like chess and Go.

- Value Function: The value function predicts the expected cumulative reward from a particular state, helping the agent evaluate the potential long-term benefits of different actions. Temporal Difference (TD) learning and Monte Carlo methods are popular approaches to estimate the value function.

- Exploration and Exploitation: An agent must balance exploring new actions to discover better strategies (exploration) and leveraging known strategies to maximize rewards (exploitation). The trade-off is crucial in RL, as excessive exploration can waste time on unproductive actions, while excessive exploitation can prevent the discovery of better solutions.

Applications of Reinforcement Learning

- Game Playing

In-game playing, RL has proven its potential by developing AI agents that outperform human champions in various games. Algorithms like Q-learning and Deep Q-Networks (DQN) enable agents to learn optimal strategies through millions of iterations. For instance, DeepMind’s AlphaGo famously defeated the world champion in Go by combining supervised learning and RL to learn effective strategies. Another notable example is OpenAI’s Dota 2 bots, which learned to play the complex multiplayer online game Dota 2 by training in simulated environments. The bots leveraged RL techniques like PPO to develop strategic gameplay over millions of matches.

- Robot Control

RL is crucial in enabling robots to learn and adapt to their environments in robotics. Algorithms like PPO and Soft Actor-Critic (SAC) train agents to perform tasks like walking, picking up objects, and flying drones. For instance, Boston Dynamics’ Spot robot dog utilizes RL to navigate complex terrains and perform challenging maneuvers. In simulated environments like Mujoco, agents can safely explore different actions before applying them in the real world. This approach allows robots to gain experience in simulation, refining their skills through thousands of simulated trials before being deployed in real-world applications.

- Resource Management

RL is increasingly being used in resource management scenarios to help optimize the allocation of limited resources. In cloud computing, RL algorithms help optimize scheduling to minimize costs and latency by dynamically allocating resources based on workload demand. Microsoft Research’s Project PAIE is an example of using RL to optimize resource management. In energy management, RL can optimize power distribution in smart grids. By learning consumption patterns, these algorithms enable grids to distribute energy more efficiently, reduce waste, and stabilize the power supply.

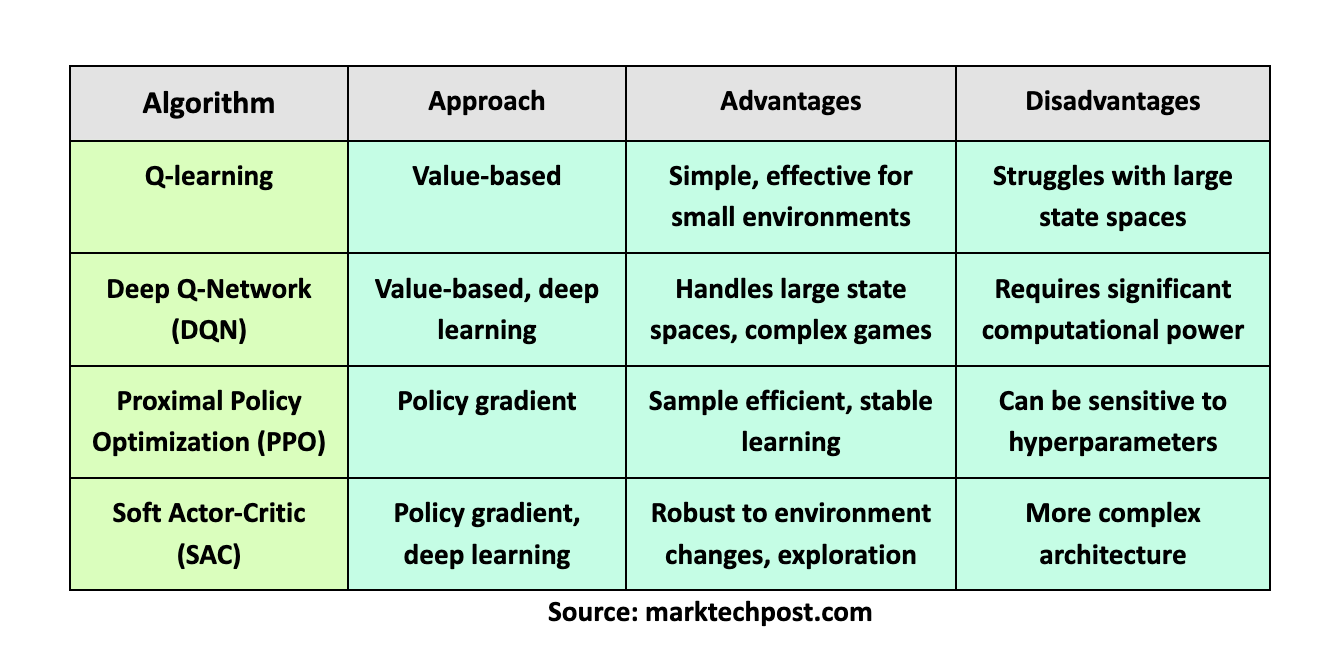

Comparing Reinforcement Learning Algorithms

Below is a comparison of popular RL algorithms:

Conclusion

RL offers a unique approach to AI by allowing agents to learn optimal behaviors through rewards and penalties. Its applications range from game playing to robotics and resource management. As RL algorithms evolve and computational capabilities expand, the potential to apply RL in more complex, real-world scenarios will only grow.

Sources

- https://deepmind.com/research/case-studies/alphago-the-story-so-far

- https://www.bostondynamics.com/spot

- https://openai.com/research/openai-five

- https://en.wikipedia.org/wiki/AlphaGo

- http://www.mujoco.org/

- https://www.microsoft.com/en-us/research/project/paie/

- https://ai.googleblog.com/2019/01/soft-actor-critic-algorithm-for.html

- https://www.microsoft.com/en-us/research/project/learned-resource-management-systems/

The post Reinforcement Learning: Training AI Agents Through Rewards and Penalties appeared first on MarkTechPost.