Accurately measuring physiological signals such as heart rate (HR) and heart rate variability (HRV) from facial videos using remote photoplethysmography (rPPG) presents several significant challenges. rPPG, a non-contact technique that analyzes subtle changes in blood volume from facial video, offers a promising solution for non-invasive health monitoring. However, capturing these minute signals accurately is difficult due to issues such as varying lighting conditions, facial movements, and the need to model long-range dependencies across extended video sequences. These challenges complicate the extraction of precise physiological signals from facial videos, which is essential for real-time applications in medical and wellness contexts.

Current methods for rPPG measurement largely rely on convolutional neural networks (CNNs) and Transformer-based models. CNNs are highly effective at extracting local spatial features from video frames but struggle to capture the long-range temporal dependencies required for accurate heart rate estimation. While Transformers address this limitation by leveraging self-attention mechanisms to capture global spatio-temporal dependencies, they suffer from high computational complexity and inefficiency when handling long video sequences. Both approaches also face challenges in handling noise caused by variations in lighting or facial movements, which can severely impact the accuracy and reliability of rPPG-based measurements in real-world scenarios.

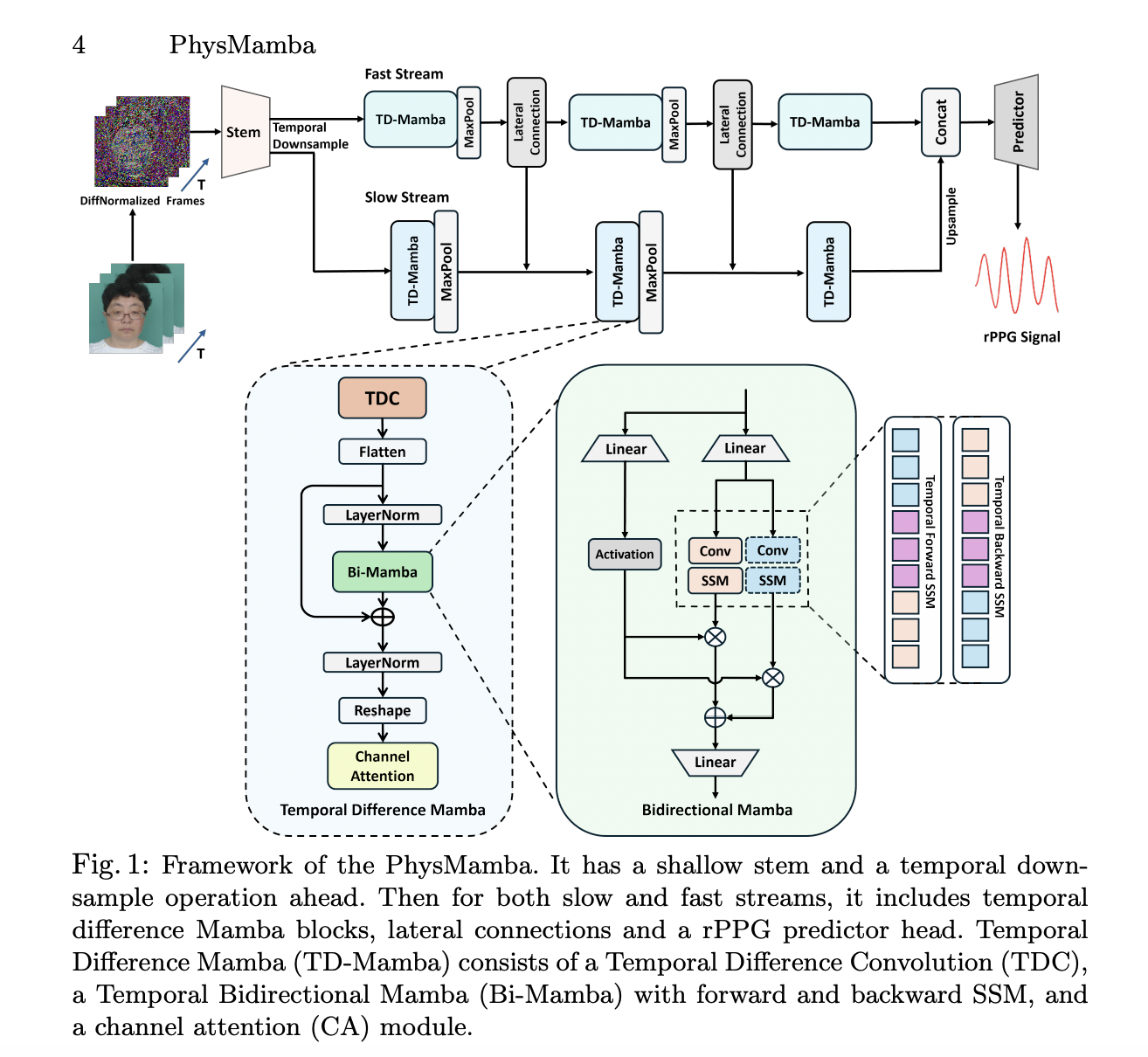

Researchers from Great Bay University introduce PhysMamba, an innovative framework designed to address the shortcomings of existing methods in physiological measurement. PhysMamba is built on the Temporal Difference Mamba (TD-Mamba) block, which combines Temporal Bidirectional Mamba (Bi-Mamba) with Temporal Difference Convolution (TDC) to capture fine-grained local temporal dynamics and long-range dependencies from facial videos. The dual-stream SlowFast architecture processes multi-scale temporal features, integrating slow and fast streams to reduce temporal redundancy while maintaining critical physiological features. This combination of technologies enables the model to efficiently handle long video sequences while improving accuracy in rPPG signal estimation, marking a significant improvement over conventional CNN and Transformer approaches.

The architecture of PhysMamba consists of a shallow stem for initial feature extraction, followed by three TD-Mamba blocks and an rPPG prediction head. The TD-Mamba block incorporates TDC to refine local temporal features, Bi-Mamba to capture long-range dependencies, and channel attention to reduce redundancy across feature channels. The SlowFast architecture processes slow and fast temporal features in parallel, enhancing the model’s ability to capture both short-term and long-range spatio-temporal dynamics. This method was tested on three benchmark datasets—PURE, UBFC-rPPG, and MMPD—using standard evaluation metrics, including Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), and Pearson’s correlation coefficient (ρ), with heart rate measured in beats per minute (bpm).

PhysMamba achieved remarkable improvements across all tested datasets and metrics. On the PURE dataset, it delivered an MAE of 0.25 bpm and RMSE of 0.4 bpm, outperforming previous models like PhysFormer and EfficientPhys. The method also performed robustly on the UBFC-rPPG dataset, achieving an MAE of 0.54 bpm and RMSE of 0.76 bpm, confirming its effectiveness in various real-world conditions. In cross-dataset evaluations, PhysMamba consistently outperformed competing models by accurately capturing subtle physiological changes while maintaining computational efficiency, making it highly suitable for real-time heart rate monitoring from facial videos.

PhysMamba presents a powerful solution for non-contact physiological measurement by addressing key limitations in capturing long-range spatio-temporal dependencies from facial videos. The integration of the TD-Mamba block and the dual-stream SlowFast architecture enables more accurate and efficient rPPG signal extraction, resulting in superior performance across multiple datasets. By advancing the state-of-the-art in rPPG-based heart rate estimation, PhysMamba shows great potential for applications in real-time, non-invasive physiological monitoring in healthcare and beyond.

Check out the Paper and Codes. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post What if Facial Videos Could Measure Your Heart Rate? This AI Paper Unveils PhysMamba and Its Efficient Remote Physiological Solution appeared first on MarkTechPost.