A significant challenge in deploying large language models (LLMs) and latent variable models (LVMs) is balancing low inference overhead with the ability to rapidly switch adapters. Traditional methods such as Low Rank Adaptation (LoRA) either fuse adapter parameters into the base model weights, losing rapid switching capability, or maintain adapter parameters separately, incurring significant latency. Additionally, existing methods struggle with concept loss when multiple adapters are used concurrently. Addressing these issues is critical for deploying AI models in resource-constrained environments like mobile devices and ensuring robust performance across diverse applications.

LoRA and its variants are the primary techniques used to adapt large generative models. LoRA is favored for its efficiency during training and inference, but it modifies a significant portion of the base model’s weights when fused, leading to large memory and latency costs during rapid switching. In unfused mode, LoRA incurs up to 30% higher inference latency. Furthermore, LoRA suffers from concept loss in multi-adapter settings, where different adapters overwrite each other’s influence, degrading the model’s performance. Sparse adaptation techniques have been explored, but they often require complex implementations and do not fully address rapid switching and concept retention issues.

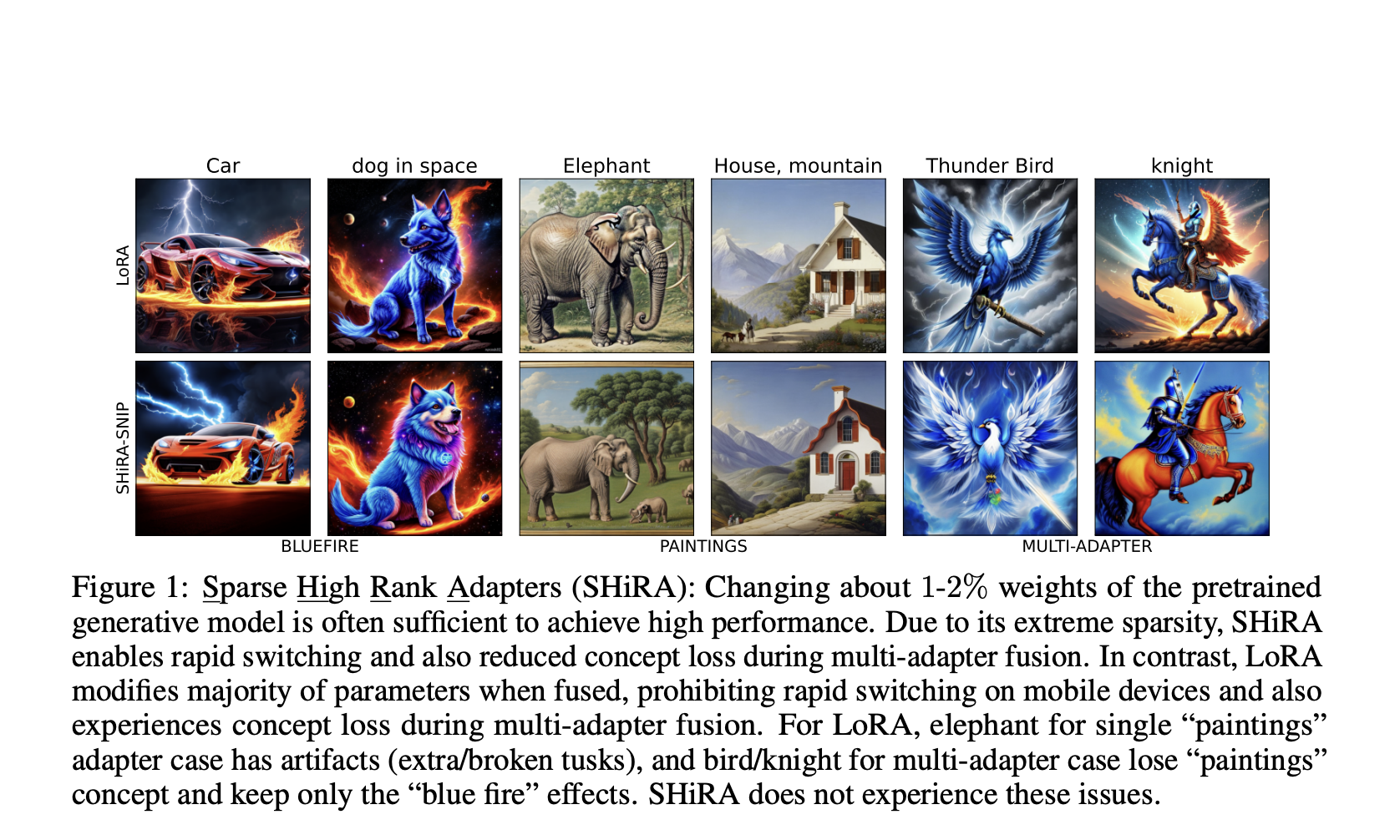

Researchers from Qualcomm AI propose Sparse High Rank Adapters (SHiRA), a highly sparse adapter framework that modifies only 1-2% of the base model’s weights. This framework enables rapid switching by minimizing the number of weights that need to be updated and mitigates concept loss through its sparse structure. The researchers leveraged gradient masking to update only the most critical weights during training, maintaining high performance with minimal parameter changes. SHiRA’s design ensures that it remains lightweight and efficient, making it suitable for deployment on mobile devices and other resource-constrained environments.

The SHiRA framework is implemented using a gradient masking technique where a sparse mask determines which weights are trainable. Various strategies to create these masks include random selection, weight magnitude, gradient magnitude, and SNIP (Sensitivity-based Pruning). The adapters can be rapidly switched by storing only the non-zero weights and their indices and applying them using efficient scatter operations during inference. The researchers also provide a memory- and latency-efficient implementation based on the Parameter-Efficient Fine-Tuning (PEFT) library, which reduces GPU memory usage by 16% compared to standard LoRA and trains at nearly the same speed.

SHiRA demonstrates superior performance in extensive experiments on both LLMs and LVMs. The approach consistently outperforms traditional LoRA methods, achieving up to 2.7% higher accuracy in commonsense reasoning tasks. Additionally, SHiRA maintains high image quality in style transfer tasks, effectively addressing the concept loss issues that plague LoRA. SHiRA achieves significantly higher HPSv2 scores on style transfer datasets, indicating superior image generation quality. By modifying only 1-2% of the base model’s weights, SHiRA ensures rapid adapter switching and minimal inference overhead, making it highly efficient and practical for deployment in resource-constrained environments such as mobile devices.

In conclusion, Sparse High Rank Adapters (SHiRA) represent a significant advancement in adapter techniques for AI models. SHiRA addresses critical challenges of rapid adapter switching and concept loss in multi-adapter settings while maintaining low inference overhead. By modifying only 1-2% of the base model’s weights, this approach offers a practical and efficient solution for deploying large models in resource-constrained environments, thus advancing the field of AI research and deployment.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post Revolutionizing Adapter Techniques: Qualcomm AI’s Sparse High Rank Adapters (SHiRA) for Efficient and Rapid Deployment in Large Language Models appeared first on MarkTechPost.