In a groundbreaking development, Timescale, the PostgreSQL cloud database company, has introduced two revolutionary open-source extensions, pgvectorscale, and pgai. These innovations have made PostgreSQL faster than Pinecone for AI workloads and 75% cheaper. Let’s explore how these extensions work and their implications for AI application development.

Introduction to pgvectorscale and pgai

Timescale unveiled the pgvectorscale and pgai extensions, aiming to enhance PostgreSQL’s scalability and usability for AI applications. These extensions are licensed under the open-source PostgreSQL license, allowing developers to build retrieval-augmented generation, search, and AI agent applications with PostgreSQL at a fraction of the cost compared to specialized vector databases like Pinecone.

Innovations in AI Application Performance

pgvectorscale is designed to help developers build more scalable AI applications featuring higher performance embedding search and cost-efficient storage. It introduces two significant innovations:

- StreamingDiskANN index: Adapted from Microsoft research, this index significantly enhances query performance.

- Statistical Binary Quantization: Developed by Timescale researchers, this technique improves on standard Binary Quantization, leading to substantial performance gains.

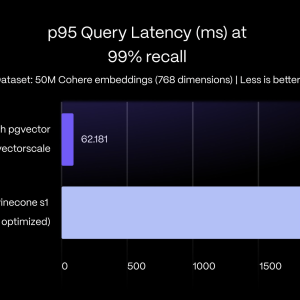

Timescale’s benchmarks reveal that with pgvectorscale, PostgreSQL achieves 28x lower p95 latency and 16x higher query throughput than Pinecone for approximate nearest neighbor queries at 99% recall. Unlike pgvector, written in C, pgvectorscale is developed in Rust, opening new avenues for the PostgreSQL community to contribute to vector support.

pgai simplifies the development of search and retrieval-augmented generation (RAG) applications. It allows developers to create OpenAI embeddings and obtain OpenAI chat completions directly within PostgreSQL. This integration facilitates tasks such as classification, summarization, and data enrichment on existing relational data, streamlining the development process from proof of concept to production.

Real-World Impact and Developer Feedback

Web Begole, CTO of Market Reader, praised the new extensions: “Pgvectorscale and pgai are incredibly exciting for building AI applications with PostgreSQL. Having embedding functions directly within the database is a huge bonus.” This integration promises to simplify and enhance the efficiency of updating saved embeddings, saving significant time and effort.

John McBride, Head of Infrastructure at OpenSauced, also highlighted the value of these extensions: “Pgvectorscale and pgai are great additions to the PostgreSQL AI ecosystem. The introduction of Statistical Binary Quantization promises lightning performance for vector search, which will be valuable as users scale the vector workload.”

Challenging Specialized Vector Databases

The primary advantage of dedicated vector databases like Pinecone has been their performance, thanks to purpose-built architectures for storing and searching large volumes of vector data. However, Timescale’s pgvectorscale challenges this notion by integrating specialized architectures and algorithms into PostgreSQL. According to Timescale’s benchmarks, PostgreSQL with pgvectorscale achieves 1.4x lower p95 latency and 1.5x higher query throughput than Pinecone’s performance-optimized index at 90% recall.

Cost Benefits and Accessibility

The cost benefits of using PostgreSQL with pgvector and pgvectorscale are substantial. Self-hosting PostgreSQL is approximately 45 times cheaper than using Pinecone. Specifically, PostgreSQL costs about $835 per month on AWS EC2, compared to Pinecone’s $3,241 per month for the storage-optimized index and $3,889 per month for the performance-optimized index.

The Future of AI Applications with PostgreSQL

Timescale’s new extensions reinforce the “PostgreSQL for Everything” movement, where developers aim to simplify complex data architectures by leveraging PostgreSQL’s robust ecosystem. Ajay Kulkarni, CEO of Timescale, emphasized the company’s mission: “By open-sourcing pgvectorscale and pgai, Timescale aims to establish PostgreSQL as the default database for AI applications. This eliminates the need for separate vector databases and simplifies the data architecture for developers as they scale.”

Conclusion

The introduction of pgvectorscale and pgai marks a significant milestone in the AI and database industry. By making PostgreSQL faster than Pinecone and significantly cheaper, Timescale sets a new standard for performance and cost-efficiency in AI workloads. These extensions enhance PostgreSQL’s capabilities and democratize access to high-performance AI application development tools.

Sources

- https://www.timescale.com/newsroom/postgresql-is-now-faster-than-pinecone-75-cheaper-with-new-open-source

- https://github.com/timescale/pgvectorscale

- https://x.com/avthars/status/1800517917194305842

The post A New Era AI Databases: PostgreSQL with pgvectorscale Outperforms Pinecone and Cuts Costs by 75% with New Open-Source Extensions appeared first on MarkTechPost.