Natural language processing (NLP) has made incredible strides in recent years, particularly through the use of large language models (LLMs). However, one of the primary issues with these LLMs is that they have largely focused on data-rich languages such as English, leaving behind many underrepresented languages and dialects. Moroccan Arabic, also known as Darija, is one such dialect that has received very little attention despite being the main form of daily communication for over 40 million people. Due to the lack of extensive datasets, proper grammatical standards, and suitable benchmarks, Darija has been classified as a low-resource language. As a result, it has often been neglected by developers of large language models. The challenge of incorporating Darija into LLMs is further compounded by its unique mix of Modern Standard Arabic (MSA), Amazigh, French, and Spanish, along with its emerging written form that still lacks standardization. This has led to an asymmetry where dialectal Arabic like Darija is marginalized, despite its widespread use, which has affected the ability of AI models to cater to the needs of these speakers effectively.

Meet Atlas-Chat!!

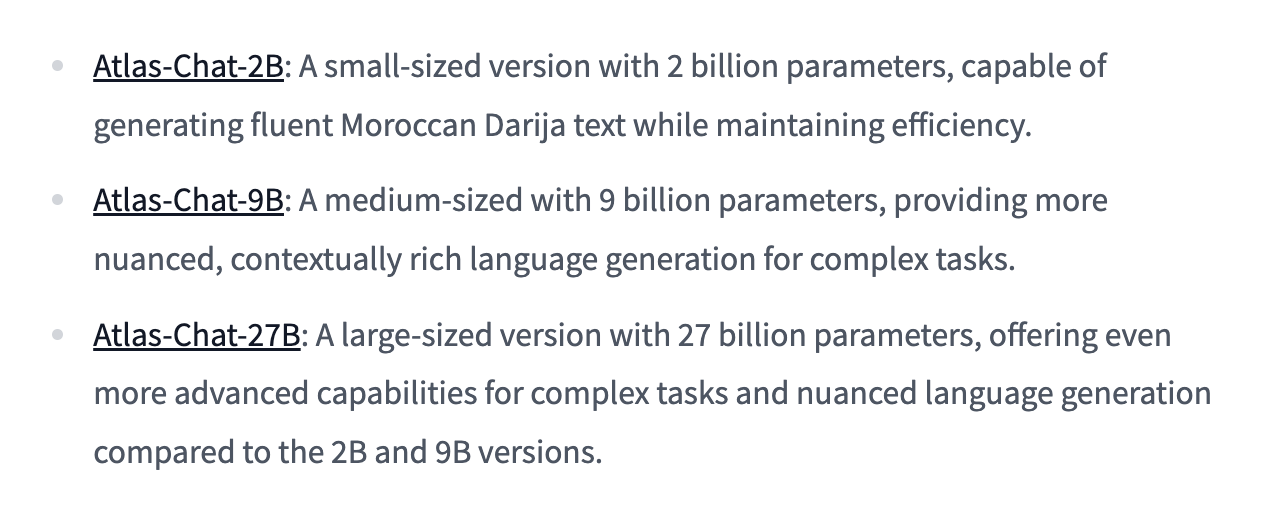

MBZUAI (Mohamed bin Zayed University of Artificial Intelligence) has released Atlas-Chat, a family of open, instruction-tuned models specifically designed for Darija—the colloquial Arabic of Morocco. The introduction of Atlas-Chat marks a significant step in addressing the challenges posed by low-resource languages. Atlas-Chat consists of three models with different parameter sizes—2 billion, 9 billion, and 27 billion—offering a range of capabilities to users depending on their needs. The models have been instruction-tuned, enabling them to perform effectively across different tasks such as conversational interaction, translation, summarization, and content creation in Darija. Moreover, they aim to advance cultural research by better understanding Morocco’s linguistic heritage. This initiative is particularly noteworthy because it aligns with the mission to make advanced AI accessible to communities that have been underrepresented in the AI landscape, thus helping bridge the gap between resource-rich and low-resource languages.

Technical Details and Benefits of Atlas-Chat

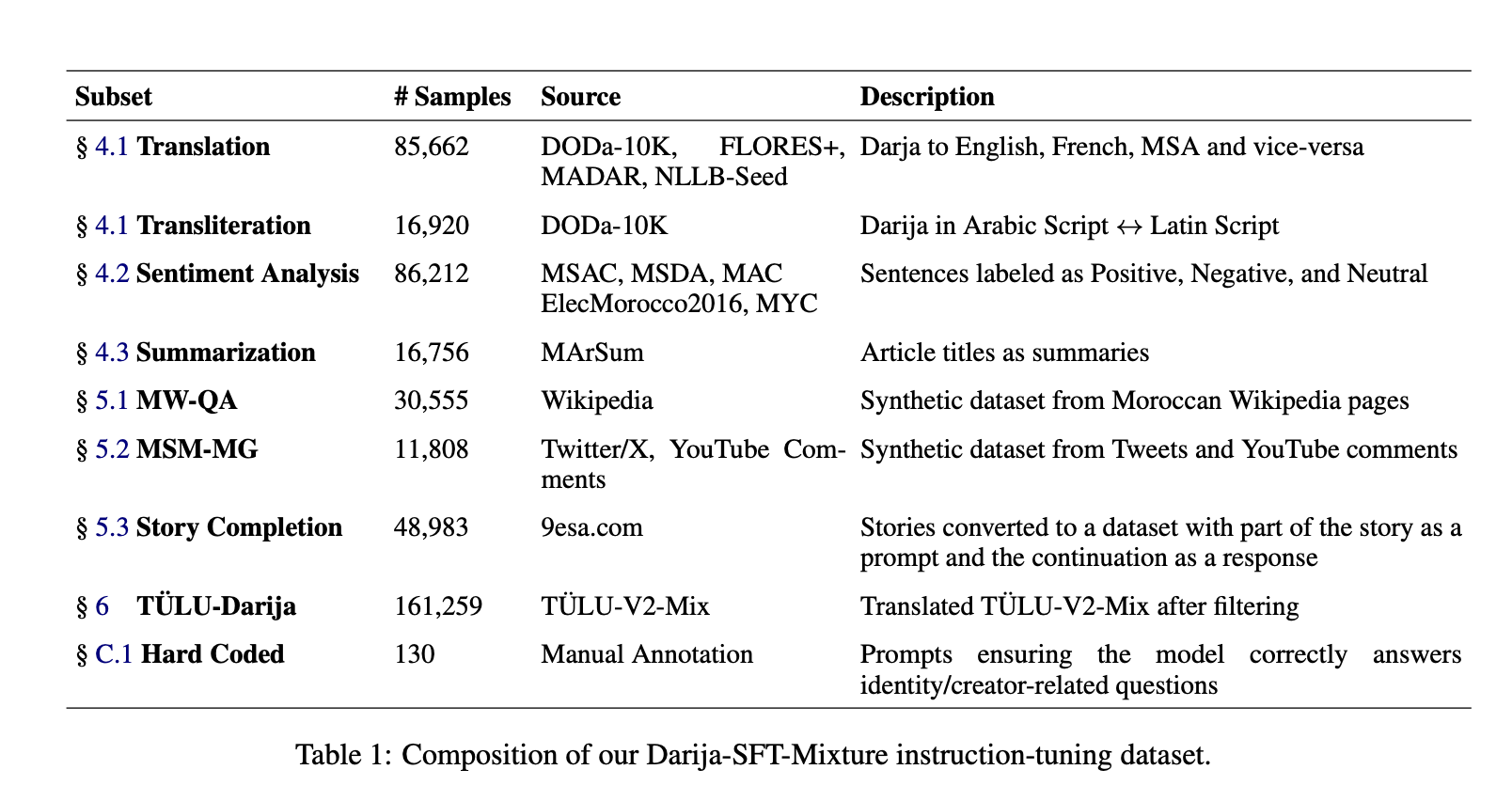

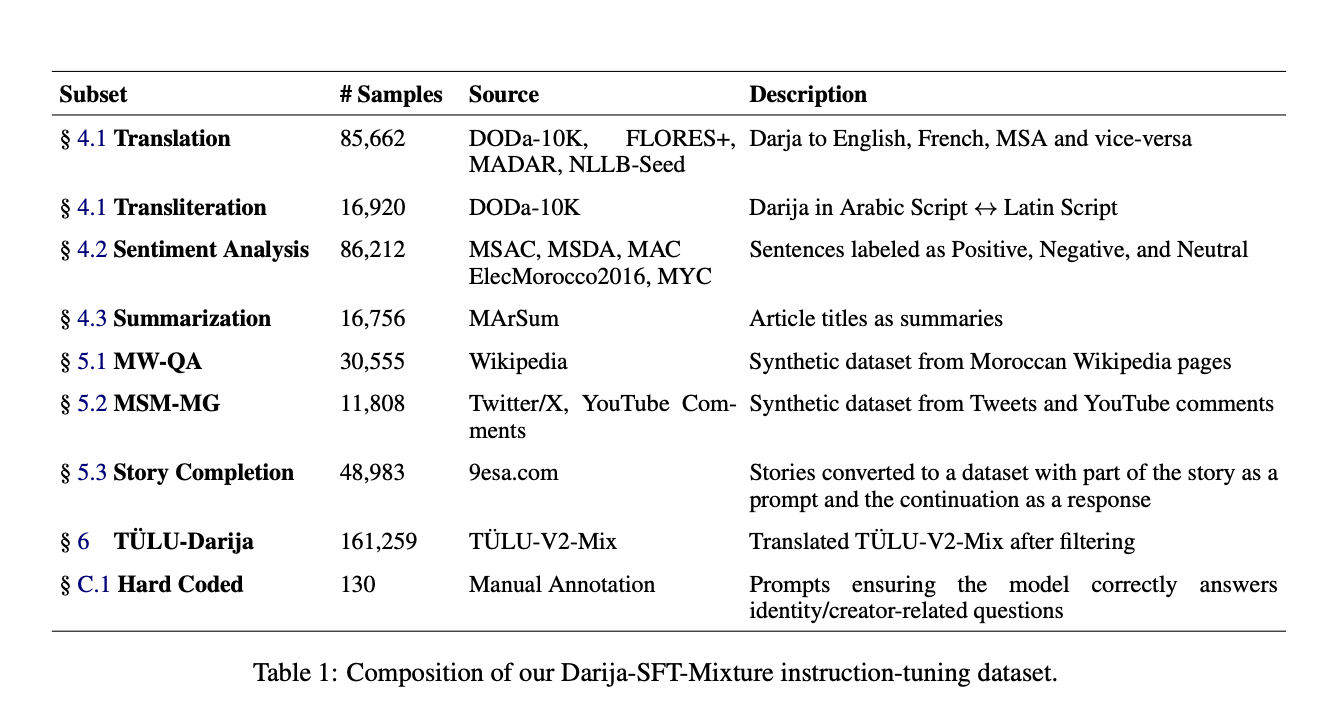

Atlas-Chat models are developed by consolidating existing Darija language resources and creating new datasets through both manual and synthetic means. Notably, the Darija-SFT-Mixture dataset consists of 458,000 instruction samples, which were gathered from existing resources and through synthetic generation from platforms like Wikipedia and YouTube. Additionally, high-quality English instruction datasets were translated into Darija with rigorous quality control. The models have been fine-tuned on this dataset using different base model choices like the Gemma 2 models. This careful construction has led Atlas-Chat to outperform other Arabic-specialized LLMs, such as Jais and AceGPT, by significant margins. For instance, in the newly introduced DarijaMMLU benchmark—a comprehensive evaluation suite for Darija covering discriminative and generative tasks—Atlas-Chat achieved a 13% performance boost over a larger 13 billion parameter model. This demonstrates its superior ability in following instructions, generating culturally relevant responses, and performing standard NLP tasks in Darija.

Why Atlas-Chat Matters

The introduction of Atlas-Chat is crucial for multiple reasons. First, it addresses a long-standing gap in AI development by focusing on an underrepresented language. Moroccan Arabic, which has a complex cultural and linguistic makeup, is often neglected in favor of MSA or other dialects that are more data-rich. With Atlas-Chat, MBZUAI has provided a powerful tool for enhancing communication and content creation in Darija, supporting applications like conversational agents, automated summarization, and more nuanced cultural research. Second, by providing models with varying parameter sizes, Atlas-Chat ensures flexibility and accessibility, catering to a wide range of user needs—from lightweight applications requiring fewer computational resources to more sophisticated tasks. The evaluation results for Atlas-Chat highlight its effectiveness; for example, Atlas-Chat-9B scored 58.23% on the DarijaMMLU benchmark, significantly outperforming state-of-the-art models like AceGPT-13B. Such advancements indicate the potential of Atlas-Chat in delivering high-quality language understanding for Moroccan Arabic speakers.

Conclusion

Atlas-Chat represents a transformative advancement for Moroccan Arabic and other low-resource dialects. By creating a robust and open-source solution for Darija, MBZUAI is taking a major step in making advanced AI accessible to a broader audience, empowering users to interact with technology in their own language and cultural context. This work not only addresses the asymmetries seen in AI support for low-resource languages but also sets a precedent for future development in underrepresented linguistic domains. As AI continues to evolve, initiatives like Atlas-Chat are crucial in ensuring that the benefits of technology are available to all, regardless of the language they speak. With further improvements and refinements, Atlas-Chat is poised to bridge the communication gap and enhance the digital experience for millions of Darija speakers.

Check out the Paper and Models on Hugging Face. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Sponsorship Opportunity with us] Promote Your Research/Product/Webinar with 1Million+ Monthly Readers and 500k+ Community Members

The post MBZUAI Researchers Release Atlas-Chat (2B, 9B, and 27B): A Family of Open Models Instruction-Tuned for Darija (Moroccan Arabic) appeared first on MarkTechPost.