AI’s rapid rise has been driven by powerful language models, transforming industries from customer service to content creation. However, many languages, particularly those from smaller linguistic communities, lack access to cutting-edge AI tools. Vietnamese, spoken by over 90 million people, is one such underserved language. With most AI advancements focusing on major global languages, reliable AI tools in Vietnamese remain scarce, posing challenges for businesses, educators, and local communities. Arcee AI aims to bridge this gap with advanced small language models (SLMs) tailored to underrepresented languages.

Arcee AI Releases Arcee-VyLinh: A Powerful 3B Vietnamese Language Model

Arcee AI has announced the release of Arcee-VyLinh, a powerful new small language model with 3 billion parameters. Arcee-VyLinh is based on the Qwen2.5-3B architecture and has a context length of 32K tokens, making it highly versatile for various tasks. It is purpose-built for the Vietnamese language, delivering high performance while maintaining manageable computational demands. What sets Arcee-VyLinh apart is its ability to outperform models of similar size and even some larger competitors in various natural language processing tasks. This is a crucial milestone, given that the Vietnamese have been largely neglected by mainstream AI models. Arcee-VyLinh aims to change this narrative, pushing the boundaries of what a smaller, efficient language model can achieve while enhancing the AI landscape for millions of Vietnamese speakers.

Technical Highlights and Benefits

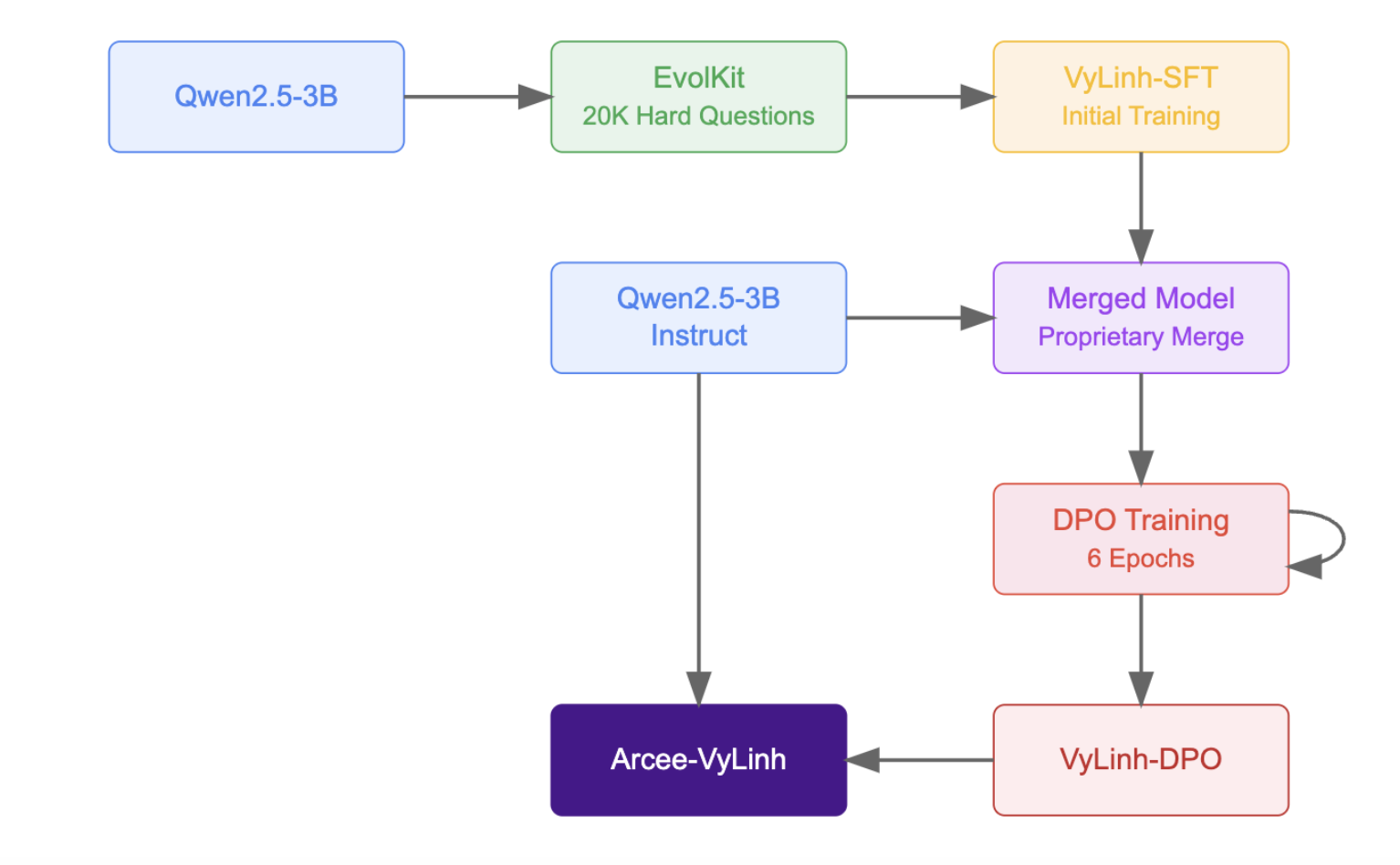

Arcee-VyLinh employs a unique multi-stage training process that maximizes language capability and efficiency. This process involves EvolKit, proprietary model merging, and iterative Directional Pruning and Optimization (DPO) to enhance language understanding while maintaining efficiency. It is trained on a custom-evolved dataset combined with ORPO-Mix-40K, a Vietnamese dataset, which ensures rich language representation. Arcee-VyLinh supports both English and Vietnamese inputs, with optimizations specifically for Vietnamese, making it versatile and practical for a range of applications.

The result is a compact yet highly capable model that delivers robust language generation and comprehension without the enormous computational footprint typically associated with larger models. These innovations mean that Arcee-VyLinh excels in tasks like conversational AI, language translation, and content moderation—all while being cost-effective. Arcee AI’s emphasis on creating a small language model capable of “punching above its weight” ensures that Arcee-VyLinh provides quality AI services comparable to larger models, with lower computational demands.

Performance Analysis

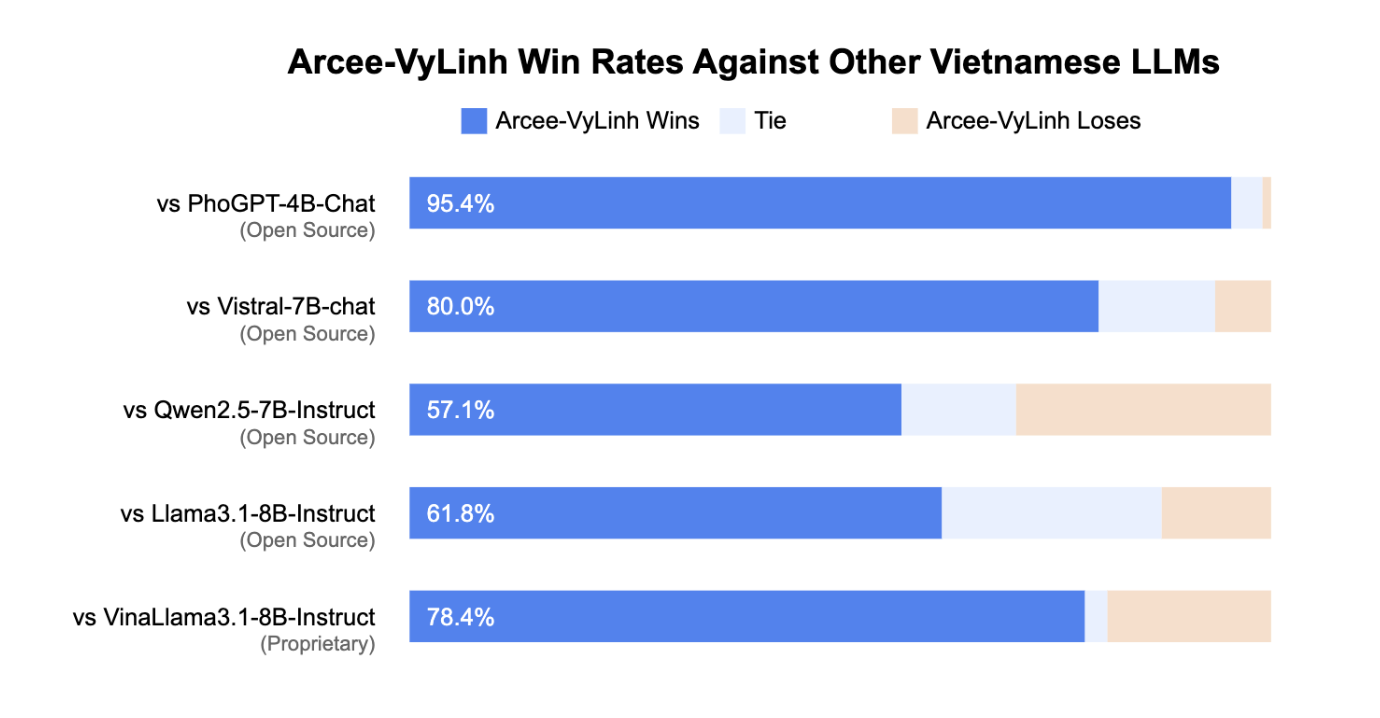

Arcee-VyLinh demonstrated exceptional capabilities against both open-source and proprietary models. It achieved a 95.4% win rate against PhoGPT-4B-Chat, an 80% win rate against Vistral-7B-chat, and a 57.1% win rate against Qwen2.5-7B-Instruct. Additionally, it maintained a 61.8% win rate against Llama3.1-8B-Instruct and a 78.4% win rate against VinaLlama3.1-8B-Instruct. These results are particularly noteworthy as Arcee-VyLinh achieves these win rates with just 3 billion parameters, significantly fewer than its competitors, which range from 4 billion to 8 billion parameters. This demonstrates the effectiveness of Arcee AI’s training methodology, particularly the combination of evolved hard questions and iterative DPO training.

Why Arcee-VyLinh Matters

Arcee-VyLinh represents a major milestone for Vietnamese AI and resource-efficient models. Smaller languages have often been overlooked in AI development, limiting access to impactful innovations. Arcee-VyLinh addresses this gap with applications in customer service, content generation, document processing, and conversational agents. Early tests show its ability to provide coherent, relevant responses that rival larger models, making it ideal for organizations needing powerful AI without high costs.

Arcee AI’s commitment to open-source development fosters community involvement, leading to further enhancements and broader adoption. By focusing on underrepresented languages, Arcee AI sets a precedent for AI inclusivity, proving that small models can have a significant impact.

Conclusion

Arcee-VyLinh shows that AI research can succeed with inclusivity, resource efficiency, and practical applications. By introducing a 3 billion parameter Vietnamese model, Arcee AI addresses a critical gap, offering accessible tools for individuals and enterprises. Arcee-VyLinh’s blend of sophistication and practicality marks a significant advancement for Vietnamese AI and small language models. In a world dominated by large models, Arcee-VyLinh proves that impactful AI doesn’t need a massive footprint—smaller, focused models can deliver equally impressive results. Arcee AI’s commitment to open-source development ensures continued growth with community contributions.

Check out the Details and Model on Hugging Face. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Sponsorship Opportunity with us] Promote Your Research/Product/Webinar with 1Million+ Monthly Readers and 500k+ Community Members

The post Arcee AI Releases Arcee-VyLinh: A Powerful 3B Vietnamese Small Language Model appeared first on MarkTechPost.