A major challenge in diffusion models, especially those used for image generation, is the occurrence of hallucinations. These are instances where the models produce samples entirely outside the support of the training data, leading to unrealistic and non-representative artifacts. This issue is critical because diffusion models are widely employed in tasks such as video generation, image inpainting, and super-resolution. Hallucinations undermine the reliability and realism of generated content, posing a significant barrier to their use in applications that demand high accuracy and fidelity, such as medical imaging.

Current methods for addressing failures in diffusion models include generative modeling techniques like Score-Based Generative Models and Denoising Diffusion Probabilistic Models (DDPMs). These methods involve adding noise to data in a forward process and learning to denoise it in a reverse process. Despite their successes, they face limitations such as training instabilities, memorization, and inaccurate modeling of complex objects. These shortcomings often stem from the model’s inability to handle the discontinuous loss landscapes in their decoders, leading to high variance and resultant hallucinations. Additionally, recursive generative model training frequently results in model collapse, where models fail to produce diverse or realistic outputs over successive generations.

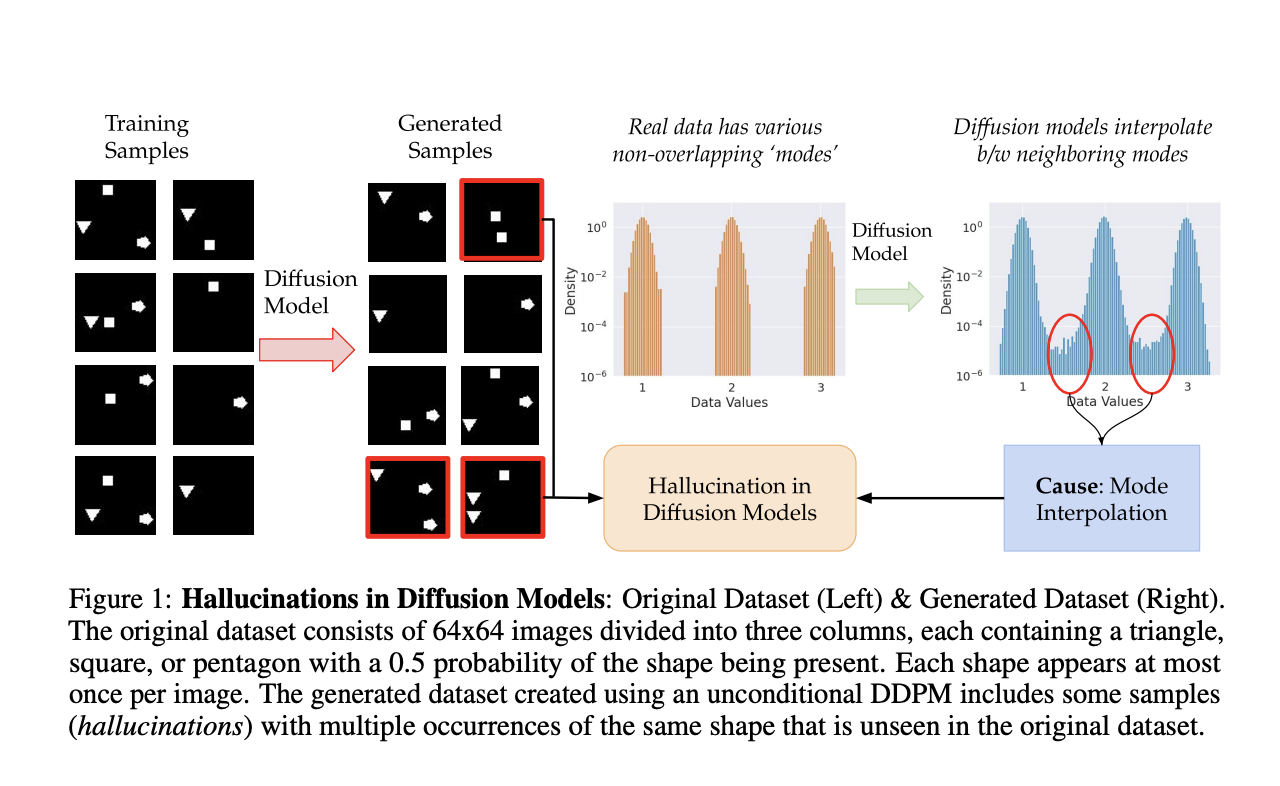

To address these limitations, researchers from Carnegie Mellon University and DatalogyAI introduced a novel approach centered on the concept of mode interpolation. This method examines how diffusion models interpolate between different data distribution modes, resulting in unrealistic artifacts. The innovation lies in identifying that high variance in the output trajectory of the models signals hallucinations. Utilizing this understanding, the researchers propose a metric for detecting and removing hallucinations during the generation process. This approach significantly reduces hallucinations while maintaining the quality and diversity of generated samples, representing a substantial advancement in the field.

The research validates this novel approach through comprehensive experiments on both synthetic and real datasets. The researchers explore 1D and 2D Gaussian distributions to demonstrate how mode interpolation leads to hallucinations. For example, in the SIMPLE SHAPES dataset, the diffusion model generates images with unrealistic combinations of shapes not present in the training data. The method involves training a denoising diffusion probabilistic model (DDPM) with specific noise schedules and timesteps on these datasets. The key innovation is a metric based on the variance of predicted values during the reverse diffusion process, effectively capturing deviations from the training data distribution.

The effectiveness of the proposed method is demonstrated by significantly reducing hallucinations while maintaining high-quality output. Key experiments on various datasets, including MNIST and synthetic Gaussian datasets, show that the proposed metric can remove over 95% of hallucinations while retaining 96% of in-support samples. Performance improvements are highlighted through comparisons with existing baselines, where the proposed approach achieves higher specificity and sensitivity in detecting hallucinated samples. The findings underscore the robustness and efficiency of the proposed approach in enhancing the reliability and realism of diffusion models’ outputs.

In conclusion, the researchers make a significant contribution to AI by addressing the critical challenge of hallucinations in diffusion models. The proposed method of detecting and removing hallucinations through mode interpolation and trajectory variance offers a robust solution, enhancing the reliability and applicability of generative models. This advancement paves the way for more accurate and realistic AI-generated content, particularly in fields requiring high precision and reliability.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

The post CMU Researchers Provide an In-Depth Study to Formulate and Understand Hallucination in Diffusion Models through Mode Interpolation appeared first on MarkTechPost.