Reinforcement learning (RL) is a specialized area of machine learning where agents are trained to make decisions by interacting with their environment. This interaction involves taking action and receiving feedback through rewards or penalties. RL has been instrumental in developing advanced robotics, autonomous vehicles, and strategic game-playing technologies and solving complex problems in various scientific and industrial domains.

A significant challenge in RL is managing the complexity of environments with large discrete action spaces. Traditional RL methods like Q-learning involve a computationally expensive process of evaluating the value of all possible actions at each decision point. This exhaustive search process becomes increasingly impractical as the number of actions grows, leading to substantial inefficiencies and limitations in real-world applications where quick and effective decision-making is crucial.

Current value-based RL methods, including Q-learning and its variants, face considerable challenges in large-scale applications. These methods rely heavily on maximizing a value function’s overall potential actions to update the agent’s policy. While deep Q-networks (DQN) leverage neural networks to approximate value functions, they still need to work on scalability issues due to the extensive computational resources required to evaluate numerous actions in complex environments.

Researchers from KAUST and Purdue University have introduced innovative stochastic value-based RL methods to address these inefficiencies. These methods include Stochastic Q-learning, StochDQN, and StochDDQN, which utilize stochastic maximization techniques. These methods significantly reduce the computational load by considering only a subset of possible actions in each iteration. This approach allows for scalable solutions that can more effectively handle large discrete action spaces.

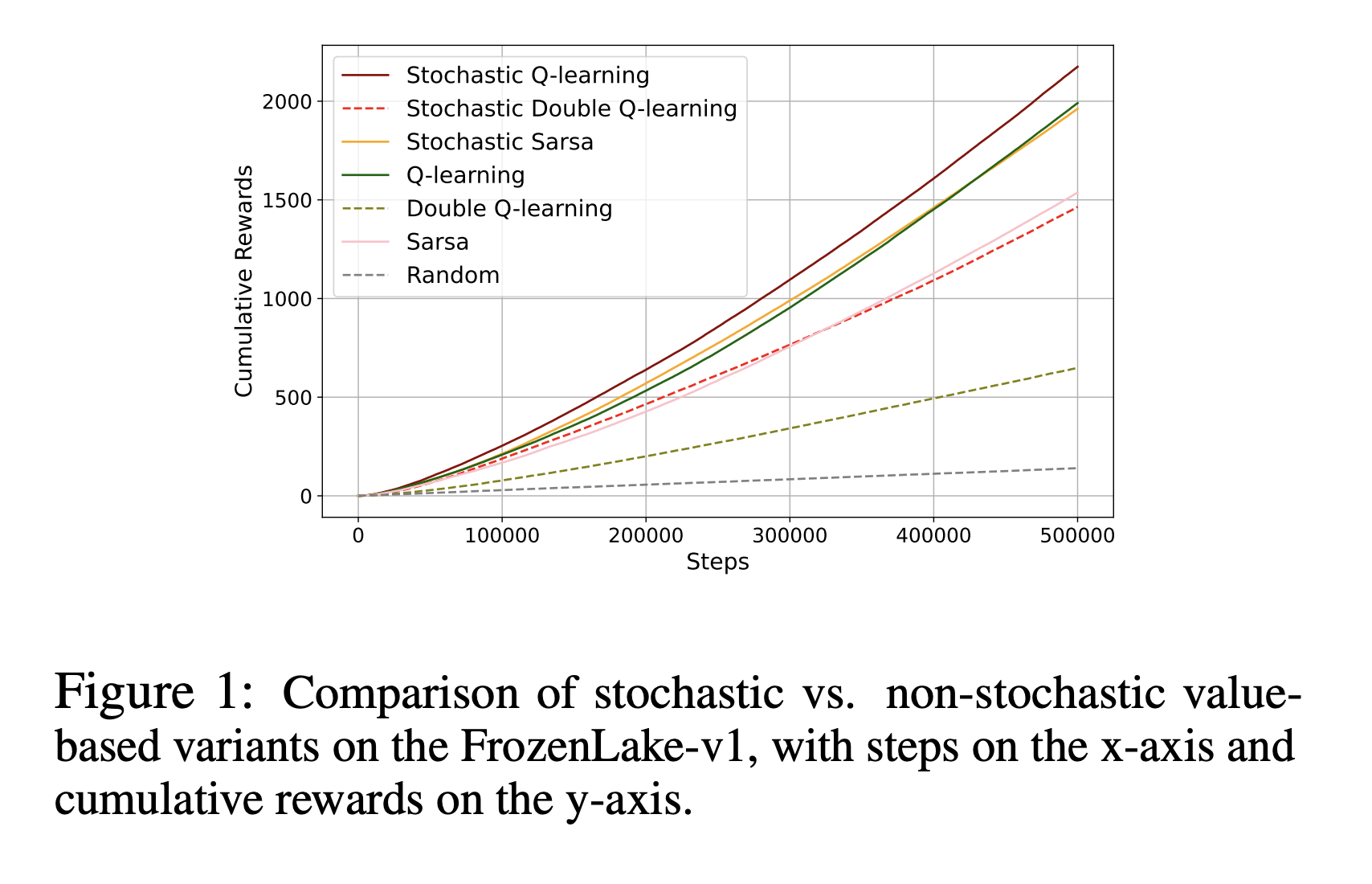

By incorporating stochastic maximization techniques, the researchers implemented stochastic value-based RL methods, including Stochastic Q-learning, StochDQN, and StochDDQN. They tested these methods on various datasets, including Gymnasium environments like FrozenLake-v1 and MuJoCo control tasks such as InvertedPendulum-v4 and HalfCheetah-v4. The framework involved replacing traditional max and arg max operations with stochastic equivalents, reducing computational complexity. The evaluations demonstrated that the stochastic methods achieved faster convergence and higher efficiency than non-stochastic methods, handling up to 4096 actions with significantly reduced computational time per step.

The results show that stochastic methods significantly improve performance and efficiency. In the FrozenLake-v1 environment, Stochastic Q-learning achieved optimal cumulative rewards in 50% fewer steps than traditional Q-learning. In the InvertedPendulum-v4 task, StochDQN reached an average return of 90 in 10,000 steps, while DQN took 30,000 steps. For HalfCheetah-v4, StochDDQN completed 100,000 steps in 2 hours, whereas DDQN required 17 hours for the same task. Furthermore, the time per step for stochastic methods was reduced to 0.003 seconds from 0.18 seconds in tasks with 1000 actions, representing a 60-fold increase in speed. These quantitative results highlight the efficiency and effectiveness of the stochastic methods.

To conclude, research introduces stochastic methods to enhance the efficiency of RL in large discrete action spaces. By incorporating stochastic maximization, the methods significantly reduce computational complexity while maintaining high performance. Tested across various environments, these methods achieved faster convergence and higher efficiency than traditional approaches. This work is crucial as it offers scalable solutions for real-world applications, making RL more practical and effective in complex environments. The innovations presented hold significant potential for advancing RL technologies in diverse fields.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post This AI Paper from KAUST and Purdue University Presents Efficient Stochastic Methods for Large Discrete Action Spaces appeared first on MarkTechPost.