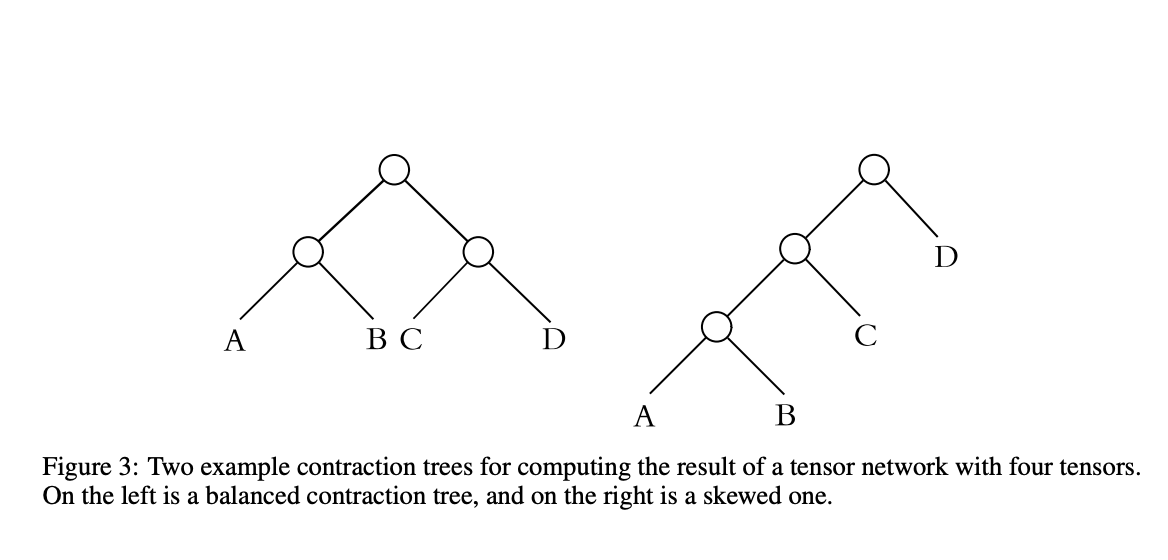

Tensor contradictions are used to solve problems related to different research fields, including model counting, quantum circuits, graph problems, and machine learning. But to minimize the computational cost, finding a contradiction order is important. If one sees the result of the computation of the product of a sequence of matrices A, B, and C, then the result will always be the same, but there will be different computational costs based on matrix dimensions. Moreover, the cost of the contraction scales for tensor networks increases with the increase in the number of tensors. The path used for finding which two tensors contract at each other is important to enhance computation time.

Earlier works have focused on finding efficient contraction paths (CPs) for tensor hypernetworks. To compute tensor contraction paths, one of the existing methods is to use a simulated annealing and a genetic algorithm that outperforms the standard greedy approach for smaller networks. The second method is graph decomposition in which Line-Graph (LG) and Factor-Tree (FT) methods are used. LG uses structured graph analysis to find a contraction order, whereas FT is used in the preprocessing to handle high-rank tensors. The third method, where reinforcement learning (RL) and Graph Neural Networks (GNNs) are combined and used to find an efficient path, includes real and synthetic quantum circuits.

A team of researchers has introduced a novel method to enhance tensor contraction paths using a modified standard greedy algorithm with an improved cost function. The cost function used by the standard greedy algorithm (SGA) to find the pairwise contractions for the path at each step is straight and depends on the size of two input tensors and the output tensor. To overcome this, the proposed method finds the costs of pairwise contractions using more information, such as providing different cost functions to cover a broad range of problems. The method outperforms the state-of-the-art greedy implementations by Optimized Einsum (opt_einsum), and in some cases, it outperforms methods like hypergraph partitioning combined with greedy.

Researchers used the SGA in opt_einsum to find CPs efficiently for large numbers of tensors. There are three phases in which the CP is computed:

- The computation of Hadamard products which are, elementwise multiplication of tensors with the same set of index.

- Contraction of remaining tensors until all contraction indices are over by selecting the lowest cost pair at each step.

- Computation of outer products by selection of the pair that minimizes the input sizes sum at each step

Further, the modified greedy algorithm uses cost functions as parameters, unlike the SGA which uses only one cost function. Then, different cost functions are utilized at runtime and the most appropriate cost function is selected for generating further CPs.

CPs for 10 problems are computed to calculate the multiple-cost-functions approach, various algorithms are compared, and for each algorithm, flops are measured. Researchers performed two experiments. In the first experiment, 128 paths are computed with each algorithm for each problem example. The goal is to calculate the solution’s quality without considering computation time. In the second experiment, the limitation is not on the number of paths but rather on computation time, which is limited to 1 second. The goal is to show a balance between time and quality to find an efficient path quickly for practical scenarios.

In conclusion, researchers proposed a novel approach to enhance tensor contraction paths using a modified standard greedy algorithm. A multiple-cost-functions approach is used where each cost function is calculated for each problem example and the best cost function is selected for computing the CP. Compared to standard greedy and random greedy algorithms by opt_einsum, and the greedy algorithm and hypergraph partitioning method, the proposed method can find efficient CPs in less time and solve complex problems but other methods fail to do the task.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post Enhancing Tensor Contraction Paths Using a Modified Standard Greedy Algorithm with Improved Cost Function appeared first on MarkTechPost.