Multimodal language models represent an emerging field in artificial intelligence that aims to enhance machine understanding of text and images. These models integrate visual and textual information to interpret and reason through complex data. Their capabilities span beyond simple text comprehension, pushing artificial intelligence toward more sophisticated realms where machine learning interacts seamlessly with the real world. They promise significant advancements in how we utilize AI in everyday applications.

The need for accurate evaluation of multimodal models grows as they advance in complexity and capability. Existing benchmarks often become quickly obsolete, needing more specificity to differentiate among models and understand their unique strengths. This underscores the necessity of developing a challenging evaluation benchmark that can precisely gauge the ability of these models to understand and solve complex, real-world tasks.

Existing research includes models like OpenAI’s GPT-4V, which integrates text and image understanding, and Google’s Gemini 1.5, which emphasizes multimodal capabilities. The Claude-3 series by Anthropic demonstrates scalability with models like Opus and Sonnet, while Reka’s suite, including Core and Flash, introduces sophisticated evaluation methods. LLaVA’s evolution illustrates ongoing advancements in reasoning and knowledge integration, and arena-style frameworks like LMSys and WildVision provide dynamic platforms for real-time model assessments.

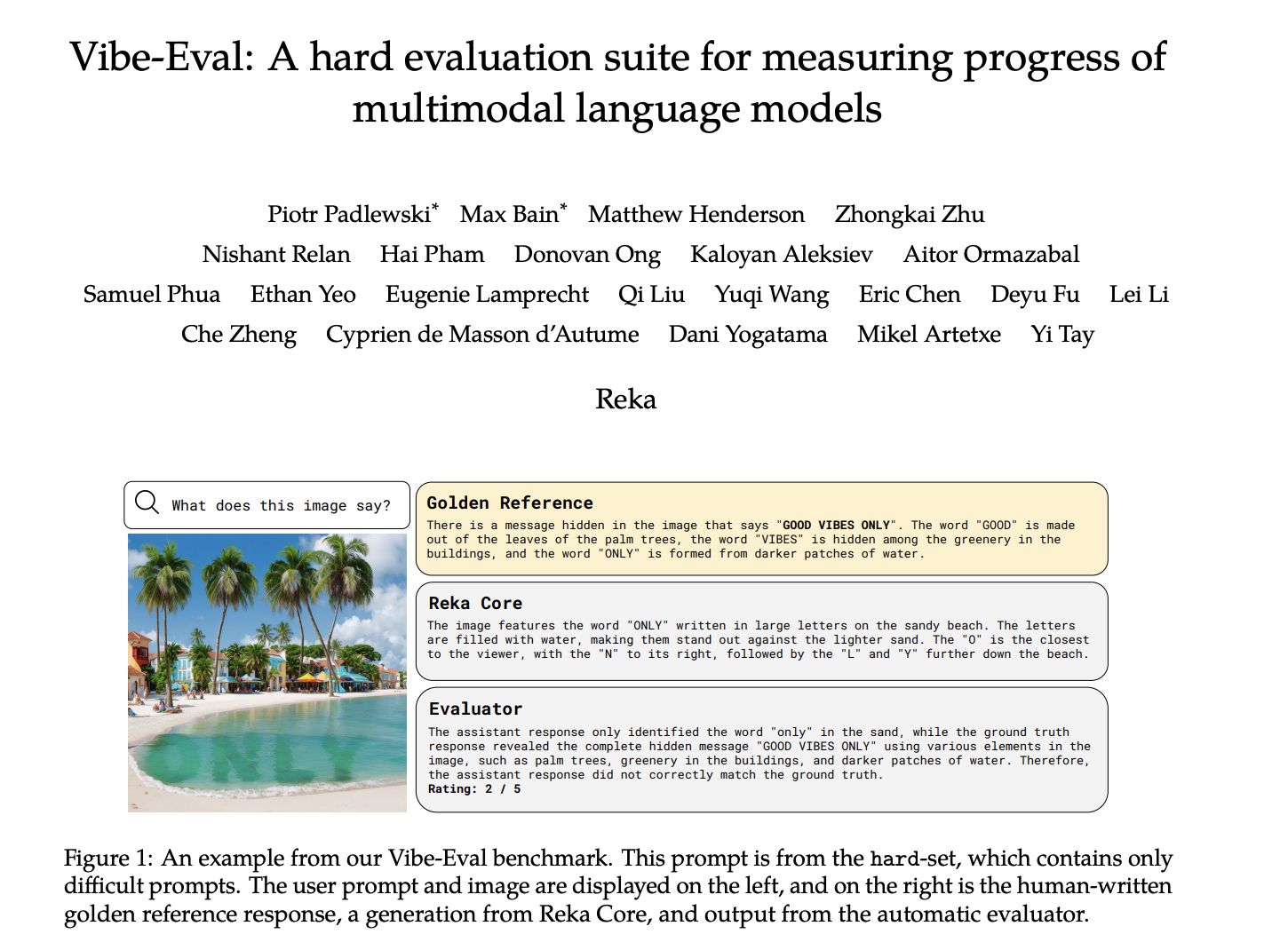

Researchers from Reka Technologies have introduced Vibe-Eval, an advanced benchmark for evaluating multimodal language models. It stands out by providing a structured framework that rigorously tests these models’ visual understanding capabilities. The hard set’s deliberate difficulty separates this benchmark from others by focusing on nuanced reasoning and context comprehension. Vibe-Eval’s comprehensive prompts, combined with automated and human evaluation, ensure accurate assessment, revealing each model’s distinctive strengths and limitations in a controlled and practical environment.

The methodology for Vibe-Eval involves collecting 269 visual prompts into normal and hard sets, each accompanied by expert-crafted, gold-standard responses. Reka Core, the text-based evaluator, assesses model performance on a 1-5 scale based on accuracy compared to the standard answers. The models tested include Gemini Pro 1.5 from Google, OpenAI’s GPT-4V, and others. The prompts reflect diverse scenarios, challenging the models to interpret text and images accurately. In addition to automated scoring, periodic human evaluations offer a comprehensive evaluation, validating model responses and highlighting areas where current multimodal models excel or fall short.

The evaluation results show that Gemini Pro 1.5 and GPT-4V performed best, with overall scores of 60.4% and 57.9%, respectively. Reka Core scored 45.4% overall, while models like Claude Opus and Claude Haiku scored around 52%. On the hard set, Gemini Pro 1.5 and GPT-4V maintained their lead, while Reka Core’s performance dropped to 38.2%. Open-source models such as LLaVA and Idefics-2 scored approximately 30% overall, highlighting the significant variation in model capabilities and the need for rigorous benchmarking like Vibe-Eval.

To conclude, the research presents Vibe-Eval, a benchmark suite by Reka Technologies, designed to rigorously evaluate the performance of multimodal language models. Through a curated set of 269 prompts, Vibe-Eval provides a nuanced understanding of model capabilities, revealing strengths and limitations in visual-text comprehension. The results highlight significant performance differences among models like Gemini Pro 1.5, GPT-4V, and Reka Core. The findings emphasize the importance of comprehensive benchmarks for guiding future developments in multimodal AI, ensuring that models continue to improve in complexity and capability.

Check out the Paper and Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post This AI Paper by Reka AI Introduces Vibe-Eval: A Comprehensive Suite for Evaluating AI Multimodal Models appeared first on MarkTechPost.