«`html

AutoCode: A New AI Framework for Competitive Programming Problem Generation and Verification

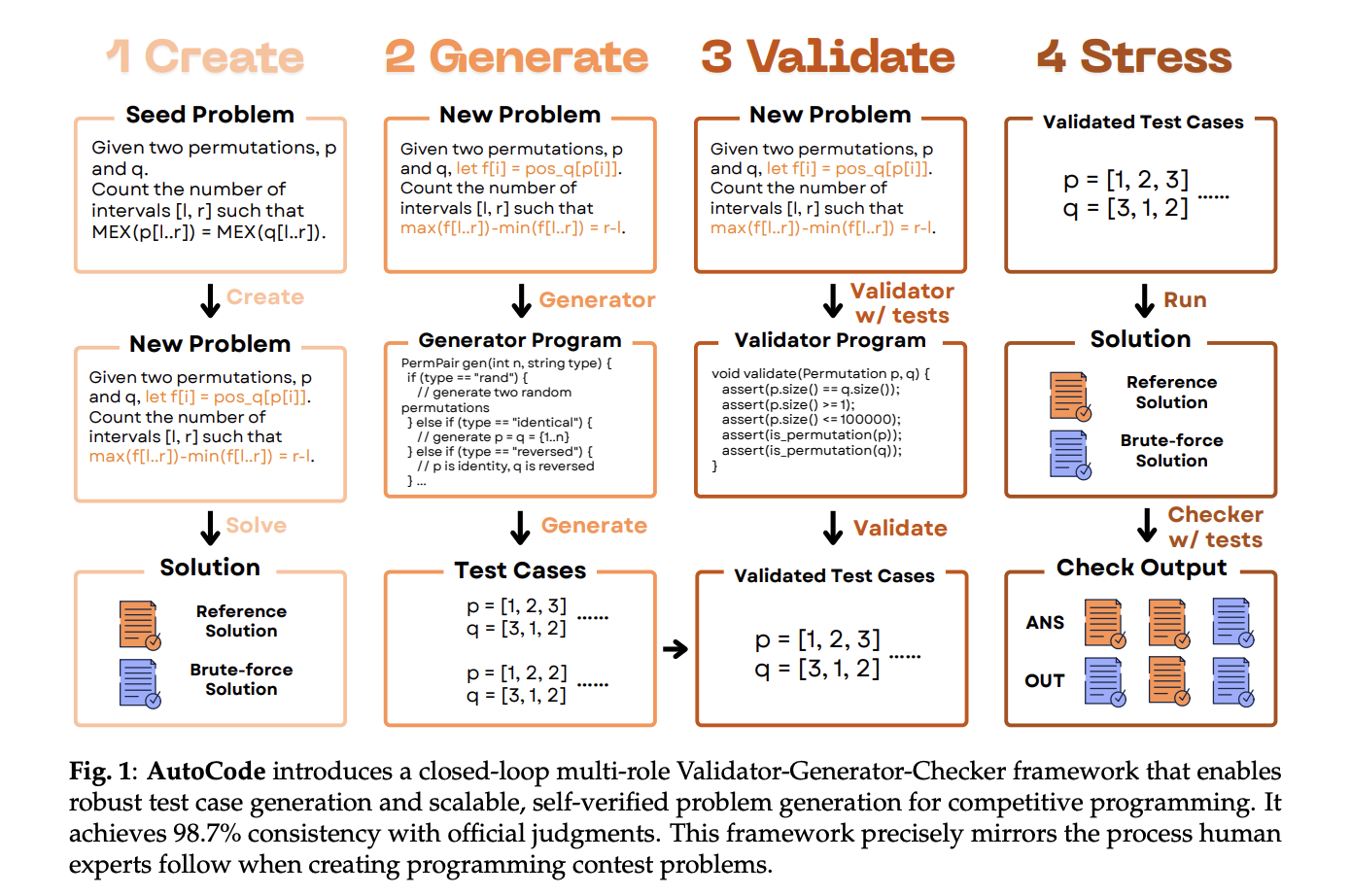

AutoCode is a novel AI framework developed by researchers from UCSD, NYU, University of Washington, Princeton University, Canyon Crest Academy, OpenAI, UC Berkeley, MIT, University of Waterloo, and Sentient Labs. This framework enables large language models (LLMs) to create and verify competitive programming problems, closely mimicking the workflow of human problem setters.

Understanding the Target Audience

The primary audience for AutoCode includes:

- Competitive Programmers: Individuals participating in coding competitions who seek high-quality problems to solve.

- Educators and Trainers: Those involved in teaching programming and algorithm design, looking for reliable problem sets for their students.

- Researchers and Developers: Professionals in AI and machine learning who are interested in the application of LLMs for practical problem generation and evaluation.

These groups share common pain points such as the need for quality control in problem sets, the challenge of generating diverse and challenging problems, and the desire for reliable evaluation metrics. Their goals revolve around improving the quality of competitive programming problems and ensuring accurate validation of submitted solutions.

Key Features of AutoCode

AutoCode introduces a structured approach to problem setting through a Validator–Generator–Checker loop, which enhances the evaluation of programming problems:

- Validator: This component minimizes false negatives (FNR) by enforcing input legality. It synthesizes evaluation inputs and selects the best candidate validator program to classify these cases accurately.

- Generator: The generator reduces false positives (FPR) by employing three strategies: small-data exhaustion for boundary coverage, randomized extreme cases, and time limit exceeding structures to detect incorrect complexity solutions.

- Checker: The checker assesses contestant outputs against reference solutions using complex rules. It generates scenarios and selects the most accurate checker program.

- Interactor: For interactive problems, AutoCode uses a mutant-based interactor that makes logical edits to the reference solution, ensuring reliable acceptance of correct solutions while rejecting flawed variants.

Performance Metrics

AutoCode has shown impressive results in various benchmarks:

- On a benchmark of 7,538 problems, AutoCode achieved a consistency rate of 91.1%, with a false positive rate (FPR) of 3.7% and a false negative rate (FNR) of 14.1%.

- In a separate test involving 720 recent Codeforces problems, AutoCode reported 98.7% consistency, with an FPR of 1.3% and an FNR of 1.2%.

These results highlight the effectiveness of AutoCode in producing high-quality competitive programming problems and validating solutions accurately, surpassing previous benchmarks set by other generators.

Importance of Problem Setting in Evaluation

Traditional code benchmarks often rely on under-specified tests, allowing incorrect solutions to pass, which skews evaluation metrics and rewards fragile tactics. AutoCode addresses this by utilizing a validator-first approach and adversarial test generation, significantly reducing false positives and negatives.

Conclusion

AutoCode represents a significant advancement in the field of competitive programming problem generation and validation. By focusing on problem setting and employing a robust Validator–Generator–Checker model, it ensures high-quality problem sets that align closely with official judging standards. The framework not only enhances the reliability of programming contests but also provides a cleaner reinforcement learning signal for downstream applications.

For further insights, you can access the full research paper detailing AutoCode’s methodology and findings.

«`