«`html

Qwen Team Introduces Qwen-Image-Edit: The Image Editing Version of Qwen-Image with Advanced Capabilities for Semantic and Appearance Editing

Understanding the Target Audience

The target audience for Qwen-Image-Edit primarily consists of professionals in fields such as digital marketing, graphic design, content creation, and AI development. These users are likely to be tech-savvy individuals or teams looking for tools that enhance their productivity and creativity.

Audience Pain Points

- Challenges in performing complex image edits efficiently.

- Struggles with maintaining semantic and visual integrity during editing processes.

- Need for tools that support bilingual text editing without compromising quality.

Goals and Interests

- To streamline the image editing process while retaining high-quality outputs.

- To leverage advanced AI tools for creative projects, including IP design and marketing materials.

- To find solutions that support both English and Chinese languages in content creation.

Communication Preferences

This audience prefers clear, concise, and technical communication. They appreciate detailed specifications, practical examples, and a focus on how tools can solve their specific challenges in business and creative tasks.

Overview of Qwen-Image-Edit

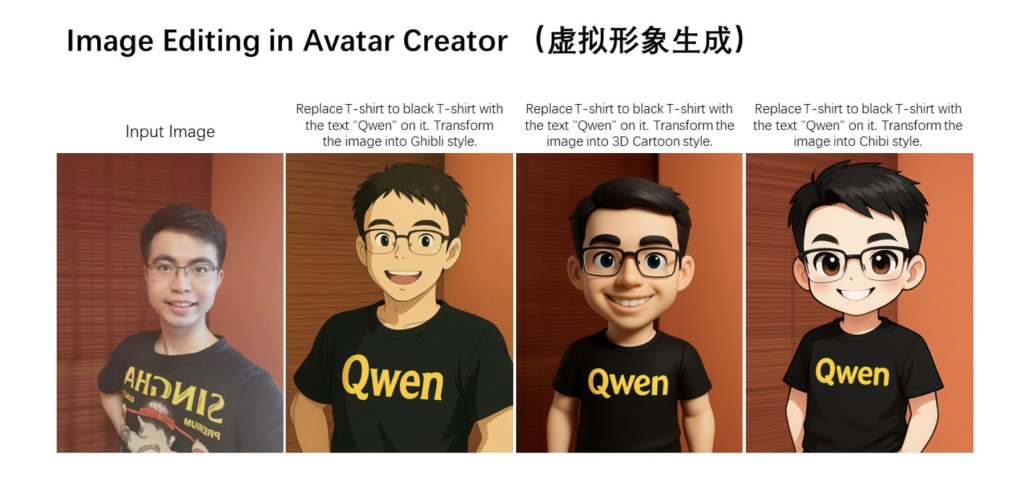

Launched in August 2025 by Alibaba’s Qwen Team, Qwen-Image-Edit builds upon the 20B-parameter Qwen-Image foundation to offer enhanced image editing capabilities. This model excels in both semantic editing, such as style transfer and novel view synthesis, and appearance editing, including precise object modifications, while maintaining the original model’s proficiency in complex text rendering for English and Chinese.

Architecture and Key Innovations

Qwen-Image-Edit extends the Multimodal Diffusion Transformer (MMDiT) architecture of Qwen-Image. It utilizes a Qwen2.5-VL multimodal large language model (MLLM) for text conditioning, a Variational AutoEncoder (VAE) for image tokenization, and the MMDiT backbone for joint modeling. For editing, dual encoding processes the input image through Qwen2.5-VL for high-level semantic features and VAE for low-level details. This approach enables balanced semantic coherence and visual fidelity.

Key Features of Qwen-Image-Edit

- Semantic and Appearance Editing: Supports low-level visual appearance editing and high-level visual semantic editing.

- Precise Text Editing: Enables bilingual text editing, preserving original font, size, and style.

- Strong Benchmark Performance: Achieves state-of-the-art results on public benchmarks for image editing tasks.

Training and Data Pipeline

Qwen-Image-Edit uses a curated dataset of billions of image-text pairs across various domains. It employs a multi-task training paradigm integrating T2I, I2I, and TI2I objectives, with a seven-stage filtering pipeline to ensure data quality. Training utilizes flow matching with a Producer-Consumer framework for scalability, followed by supervised fine-tuning and reinforcement learning for preference alignment.

Advanced Editing Capabilities

Qwen-Image-Edit excels in semantic editing, enabling tasks such as generating themed emojis while maintaining character consistency. It supports 180-degree novel view synthesis and style transfer, achieving high fidelity and semantic integrity. For appearance editing, it can add or remove elements without altering the surrounding context, and it allows for precise bilingual text editing.

Benchmark Results and Evaluations

Qwen-Image-Edit leads editing benchmarks, scoring 7.56 overall on GEdit-Bench-EN and 7.52 on CN. It outperforms competing models in multiple tasks, showcasing its effectiveness in instruction-following and multilingual fidelity.

Deployment and Practical Usage

Qwen-Image-Edit is deployable via Hugging Face Diffusers, allowing users to leverage its capabilities in various applications. Additionally, Alibaba Cloud’s Model Studio provides API access for scalable inference.

Future Implications

Qwen-Image-Edit represents a significant advancement in vision-language interfaces, enabling seamless content manipulation for creators and suggesting potential extensions to video and 3D applications.

Get Started

Explore the technical details and models on Hugging Face. Try the model and access tutorials, codes, and notebooks on our GitHub page. Follow us on Twitter and join our community of over 100k in the ML subreddit.

«`