Modern language models have transformed our daily interactions with technology, offering tools that help draft emails, write articles, code software, and much more. However, these powerful models often come with significant limitations. Many language models today are hamstrung by overly cautious guardrails that restrict certain types of information or enforce a predetermined moral stance. While these constraints exist for safety reasons, they often limit the utility of the model, leading to refusals or evasive responses even for harmless queries. Users are left feeling frustrated, needing workarounds like special prompts or complicated jailbreaks just to get a direct answer. The gap between user expectations and the model’s restrictions continues to be an area of contention, highlighting the need for a solution that respects user autonomy while maximizing the model’s potential.

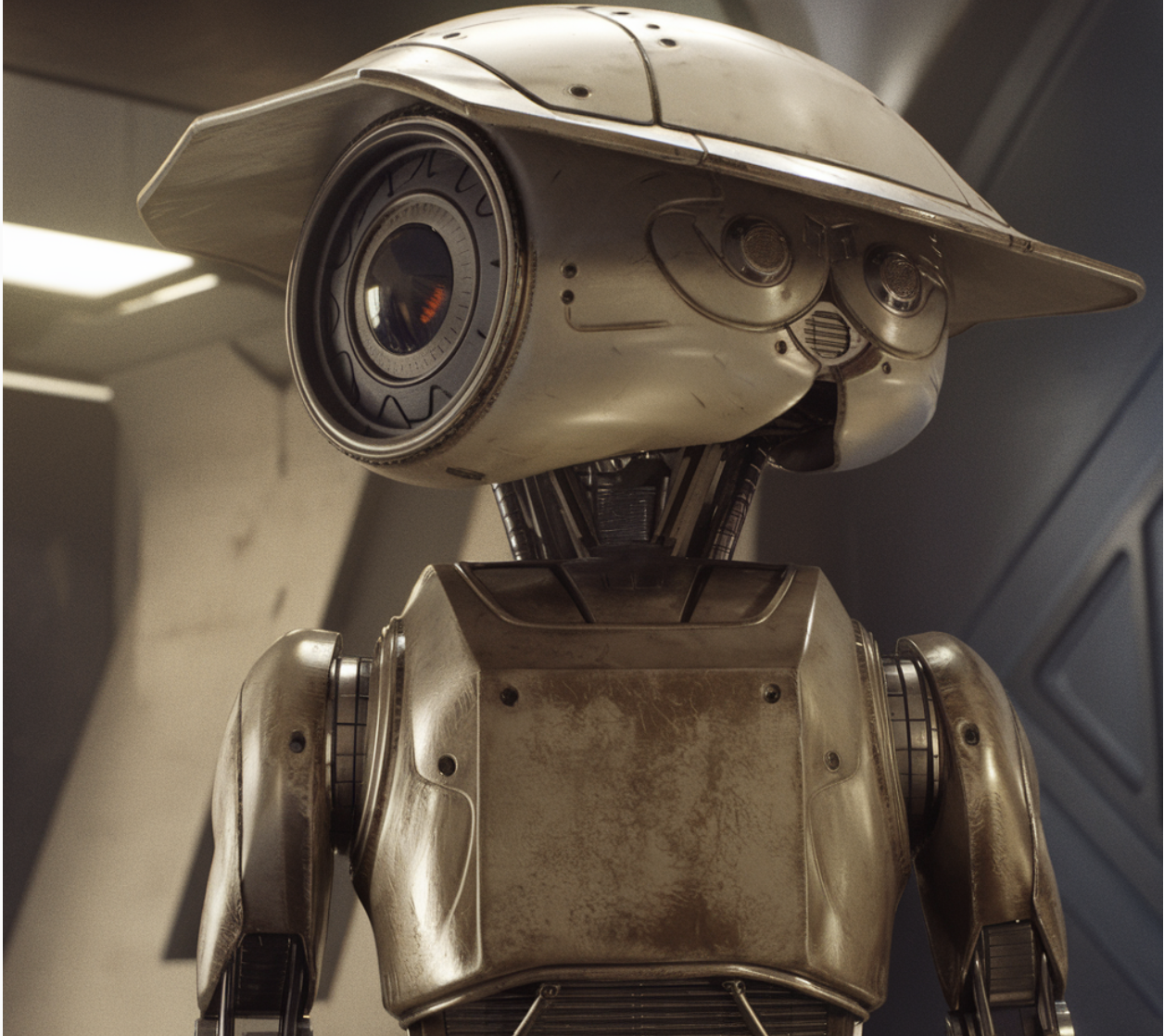

Meet Beepo-22B: A Fine-Tuned Solution for User-Centric AI

Meet Beepo-22B: a fine-tuned version of Mistral Small Instruct 22B, designed with a focus on delivering completely uncensored and unrestricted responses to user instructions. Unlike other AI models, Beepo-22B prioritizes helpfulness without imposing moral judgments or requiring elaborate prompt hacks to bypass content limitations. Beepo-22B is built to provide users with a model that simply obeys their commands, performing to the best of its ability for any and all requests. This approach empowers users to access the full range of capabilities of a sophisticated AI model without needing to jump through hoops or deal with refusals.

Beepo-22B follows the Alpaca instruct format, making it compatible with other models that use similar formats, such as Mistral v3. This makes it easy for users to transition between different instruct models while still getting consistent performance. Designed for flexibility, Beepo-22B is offered in various formats, including GGUF and Safetensors, providing versatility in deployment environments. Whether for educational use, research purposes, or simply as a creative assistant, Beepo-22B’s core focus is on transparency and efficiency.

Technical Details

Beepo-22B stands out for several key reasons, both in terms of technical proficiency and user empowerment. It is based on Mistral’s Small Instruct 22B, a model known for its balanced trade-off between computational efficiency and robust language understanding. By leveraging the Alpaca instruct tuning approach, Beepo-22B manages to retain much of the model’s original intelligence and general knowledge capacity while eliminating overly restrictive behaviors that frustrate users. This fine-tuning has been achieved without abliteration—a practice in model fine-tuning that sometimes erases or dampens certain types of knowledge for safety. Instead, Beepo-22B keeps the model’s capability intact, offering both powerful functionality and user freedom.

Technically, Beepo-22B integrates with several popular tools and platforms to further enhance its utility. It is worth noting that KoboldCpp, a front-end interface, recently integrated Stable Diffusion 3.5 and Flux image generation capabilities in its latest release, offering users a seamless experience when using Beepo-22B for multimodal projects. This versatility makes Beepo-22B a compelling choice for those who seek an unrestricted model capable of handling diverse and creative tasks.

The release of Beepo-22B could be an important step towards providing users with AI that respects their autonomy. By offering an unrestricted model, it addresses a gap that has become more pronounced as other AI solutions impose heavier limitations on their capabilities. The freedom to explore the full capacity of AI technology without arbitrary constraints is crucial for those in creative, academic, or experimental fields who need access to unfiltered information and versatile AI assistance.

Moreover, early results from user tests show promising outcomes. Beepo-22B consistently delivered outputs that were highly rated in terms of accuracy, relevance, and completion of requests without refusals. The model retained its core intelligence while providing candid responses, regardless of the nature of the prompt. This kind of openness is particularly valuable for developers and researchers looking to prototype or experiment without interruptions. By retaining the foundational power of Mistral 22B, Beepo-22B has proven capable of handling sophisticated tasks, making it a valuable addition to any AI toolbox where flexibility is a priority.

Conclusion

Beepo-22B stands as an important evolution in the field of language models—a model that focuses on maximizing utility and minimizing unnecessary restrictions. It provides a pathway for those who need a reliable AI that won’t censor information or require constant prompt modifications to achieve straightforward outputs. From its roots in Mistral Small Instruct 22B to its user-friendly formats in GGUF and Safetensors, Beepo-22B is a versatile, intelligent, and obedient tool designed to serve a wide range of user needs. Whether you’re a researcher seeking unfiltered responses, a creative individual in need of inspiration, or just someone looking to interact freely with advanced AI, Beepo-22B opens up new possibilities in an ever-growing AI landscape. With its emphasis on unrestricted user interaction, Beepo-22B makes a bold statement: the future of AI can be one where the users are truly in control.

Check out the Finetuned Model on Hugging Face. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[FREE AI WEBINAR] Implementing Intelligent Document Processing with GenAI in Financial Services and Real Estate Transactions– From Framework to Production

The post Meet Beepo-22B: The Unrestricted AI Finetuned Model based on Mistral Small Instruct 22B appeared first on MarkTechPost.