While today’s LLMs can skillfully use various tools, they still operate synchronously, only processing one action at a time. This strict turn-based setup limits their ability to handle multiple tasks simultaneously, reducing interactivity and responsiveness. For example, in a hypothetical scenario with an AI travel assistant, the model can’t respond to a quick weather query while preparing a detailed itinerary, forcing users to wait. Although recent advancements, like OpenAI’s real-time voice API, support some asynchronous responses, broader implementation is limited by a lack of training data specifically for asynchronous tool use, and there are still design challenges to overcome.

The study builds on foundational systems research, particularly on asynchronous execution, polling versus interrupts, and real-time systems, with influences from works by Dijkstra, Hoare, and recent systems like ROS. Asynchronous execution supports AI agent responsiveness, which is crucial in real-time environments. In generative AI, the rise of large action models (LAMs), such as xLAM, has enhanced AI agents’ capabilities, enabling tool use and function calling beyond traditional LLM applications. New tools like AutoGen and AgentLite also foster multi-agent cooperation and task management, advancing coordination frameworks. Notably, developments in speech models and spoken dialogue systems further enhance AI’s real-time, interactive capabilities.

Salesforce AI Research introduces an approach for asynchronous AI agents, enabling them to multitask and use tools in real-time. This work centers on an event-driven finite-state machine framework for efficient agent execution and interaction, enhanced with automatic speech recognition and text-to-speech capabilities. Drawing from concurrent programming and real-time systems, the architecture supports any language model that produces valid messages, and Llama 3.1 and GPT-4o were fine-tuned for optimal performance. The study explores architectural trade-offs, particularly in context management, comparing forking versus spawning methods in event-driven AI environments.

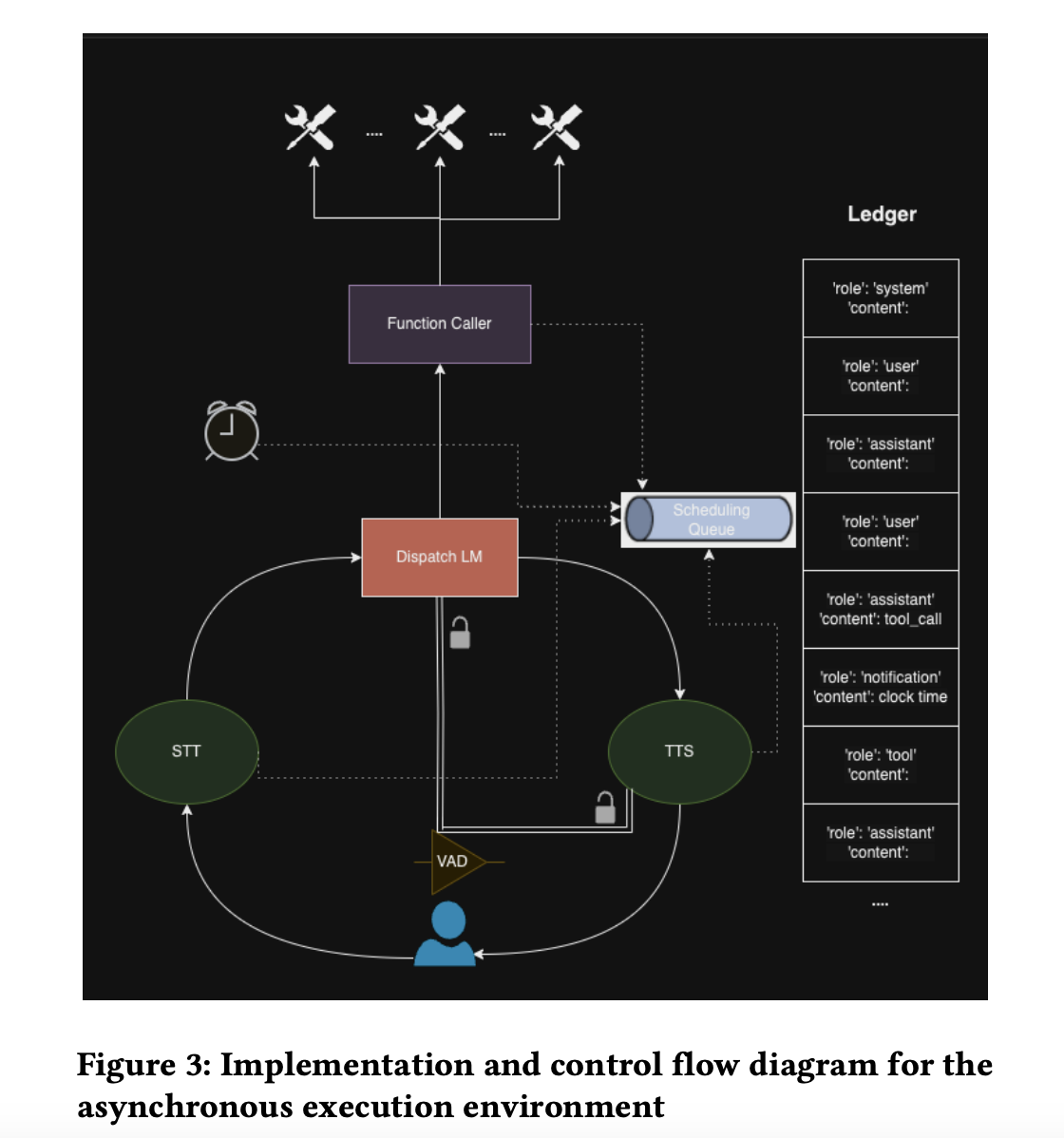

The proposed real-time agent framework integrates an asynchronous execution environment with a structured prompting specification akin to a software hardware division. As long as the LLM generates outputs according to this specification, the environment can handle function calls and user interactions via speech-to-text (STT) and text-to-speech (TTS) peripherals. The core of this system is an event-driven finite state machine (FSM) with priority scheduling, referred to as the dialog system, which manages conversational states, scheduling, and message processing. This dialog system is linked to a dispatcher responsible for LLM generation, function calling, and managing context, with a ledger acting as a comprehensive record. STT and TTS support real-time voice-based interaction, but the system can also function using text input and output.

The framework introduces “fork” and “spawn” options for handling parallel processes and creating concurrent instances with shared or unique contexts. This enables agents to work on complex tasks by dynamically organizing multi-agent hierarchies. The FSM prioritizes event processing to ensure responsiveness; high-priority events, like user interruptions, directly shift states to handle immediate user input. An extension of OpenAI’s ChatML markup language is used for asynchronous context management, adding a “notification” role for real-time updates and handling interruptions with specific tokens. This design supports highly interactive real-time communication by maintaining accurate context and ensuring smooth transitions between generating, listening, emitting, and idle states.

In conclusion, the study presents a real-time AI agent framework that enhances interactivity through asynchronous execution, allowing simultaneous tool usage and multitasking—addressing the limitations of sequential, turn-based systems. Built on an event-driven finite-state machine, this architecture supports real-time tool use, voice interaction, and clock-aware task management—fine-tuning of Llama 3.1 and GPT-4o as dispatch models showed an improved generation of accurate ledger messages. The design also highlights the potential for tighter integration with multi-modal models to reduce latency further and improve performance. Future directions include exploring multi-modal language models and extended multi-agent systems for time-constrained tasks.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[FREE AI WEBINAR] Implementing Intelligent Document Processing with GenAI in Financial Services and Real Estate Transactions– From Framework to Production

The post Asynchronous AI Agent Framework: Enhancing Real-Time Interaction and Multitasking with Event-Driven FSM Architecture appeared first on MarkTechPost.