Large language models (LLMs) have shown exceptional capabilities in comprehending human language, reasoning, and knowledge acquisition, suggesting their potential to serve as autonomous agents. However, training high-performance web agents based on open LLMs within online environments, such as WebArena, faces several critical challenges. The first challenge is insufficient predefined training tasks in online benchmarks. The next challenge is assessing success for arbitrary web browsing tasks due to the sparsity and high cost of feedback signals. Lastly, the absence of a predefined training set necessitates online exploration, leading to policy distribution drift and potential catastrophic forgetting, which can decrease the agent’s performance over time.

The existing methods include adopting LLMs as Agents and Reinforcement Learning (RL) for LLMs. Current research in LLMs as Agents has two main categories: training-free and training-based approaches. While some studies have used powerful LLMs like GPT-4 to generate demonstrations, the accuracy of these methods remains insufficient for complex tasks. Researchers have explored RL techniques to address this challenge, which uses sequential decision-making to control devices and interact with complex environments. Existing RL-based methods, such as AgentQ, which uses DPO for policy updates, and actor-critic architectures, have shown promise in complex device control tasks. However, the limited and sparse feedback signals are often binary success or failure after multiple interaction rounds in web-based tasks.

Researchers from Tsinghua University and Zhipu AI have proposed WEBRL, a self-evolving online curriculum RL framework designed to train high-performance web agents using open LLMs. It addresses the key challenges in building LLM web agents, including the scarcity of training tasks, sparse feedback signals, and policy distribution drift in online learning. Moreover, it utilizes three key components:

- A self-evolving curriculum that generates new tasks from unsuccessful attempts.

- A robust outcome-supervised reward model (ORM)

- Adaptive RL strategies to ensure consistent improvements.

Moreover, WEBRL bridges the gap between open and proprietary LLM-based web agents, creating a way for more accessible and powerful autonomous web interaction systems.

WEBRL utilizes a self-evolving online curriculum that harnesses the trial-and-error process inherent in exploration to address the scarcity of web agent training tasks. In each training phase, WEBRL autonomously generates novel tasks from unsuccessful attempts in the preceding phase, providing a progressive learning trajectory. It also incorporates a KL-divergence term between the reference and actor policies into its learning algorithm to reduce the policy distribution shift induced by curriculum-based RL. This constraint on policy updates promotes stability and prevents catastrophic forgetting. Moreover, WEBRL implements an experience replay buffer augmented with a novel actor confidence filtering strategy.

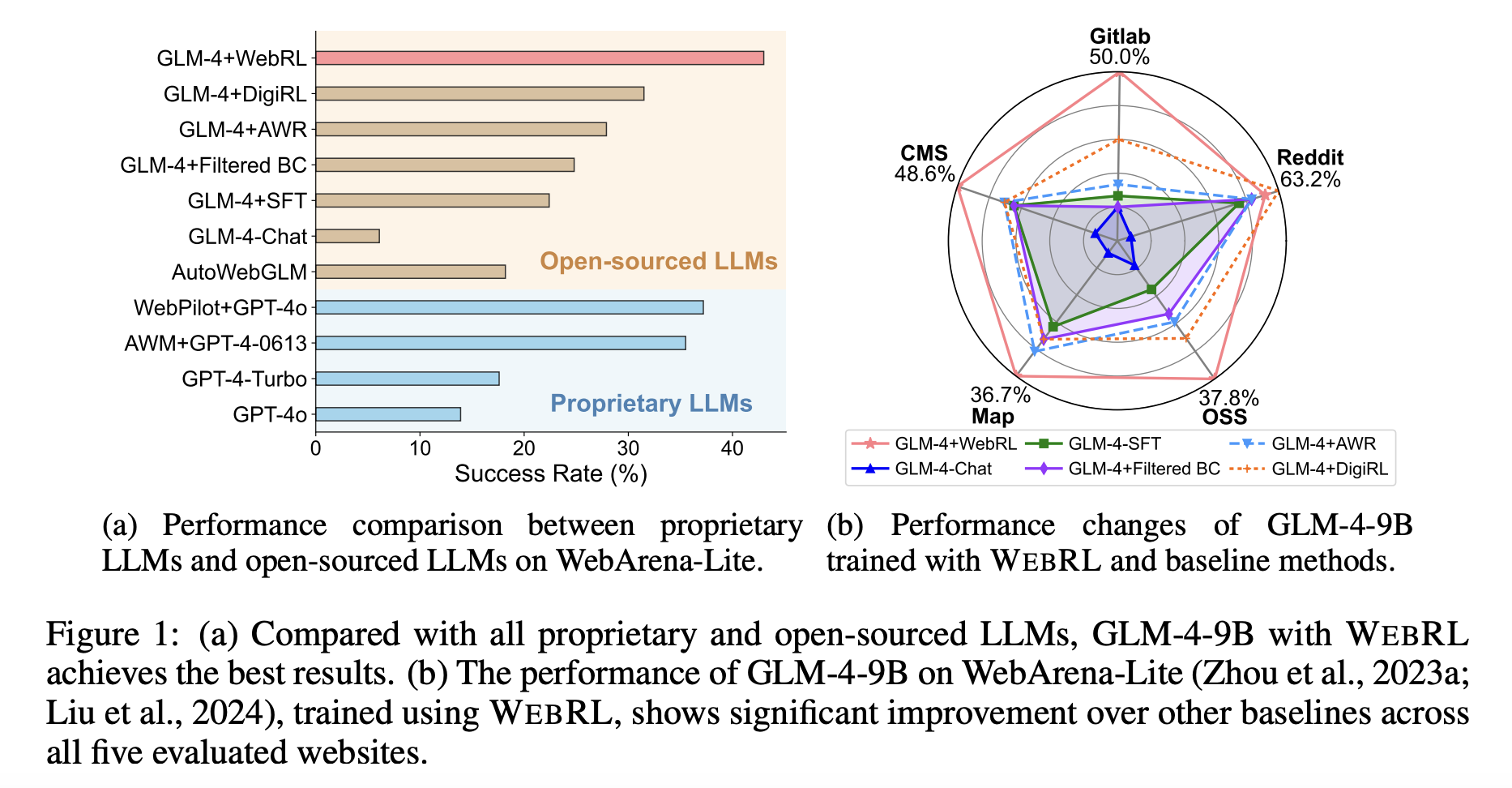

The results obtained for Llama-3.1-8B trained using WEBRL achieve an average accuracy of 42.4%, surpassing all the baseline approaches, including prompting and training alternatives. WEBRL excels in specific tasks such as Gitlab (46.7%) and CMS (54.3%), showcasing its ability to address complex web tasks effectively. Moreover, it outperforms imitation learning-based methods, such as SFT and Filtered BC. Moreover, it consistently outperforms DigiRL, a previous state-of-the-art method that conducts policy updates on a predefined, fixed set of tasks, which may not align with the model’s current skill level. WEBRL addresses this by using self-evolving curriculum learning, adjusting the task complexity based on the model’s abilities, promoting wider exploration, and supporting continuous improvement.

In this paper, the researchers have introduced WEBRL, a novel self-evolving online curriculum RL framework for training LLM-based web agents. It addresses the critical challenges in building effective LLM web agents, including the scarcity of training tasks, the sparsity of feedback signals, and the policy distribution drift in online learning. The results demonstrate that WEBRL enables LLM-based web agents to outperform existing state-of-the-art approaches, including proprietary LLM APIs, and these findings help enhance the capabilities of open-source LLMs for web-based tasks, paving the way for more accessible and powerful autonomous web interaction systems. The successful application of WEBRL across different LLM architectures, like Llama-3.1 and GLM-4 validates the robustness and adaptability of the proposed framework.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Sponsorship Opportunity with us] Promote Your Research/Product/Webinar with 1Million+ Monthly Readers and 500k+ Community Members

The post WEBRL: A Self-Evolving Online Curriculum Reinforcement Learning Framework for Training High-Performance Web Agents with Open LLMs appeared first on MarkTechPost.