The most serious challenge regarding IGNNs relates to slow inference speed and scalability. While these networks are effective at capturing long-range dependencies in graphs and addressing over-smoothing issues, they require computationally expensive fixed-point iterations. This reliance on iterative procedures severely limits their scalability, particularly when applied to large-scale graphs, such as those in social networks, citation networks, and e-commerce. The high computational overhead for convergence impacts both inference speed and presents a major bottleneck for real-world applications, where rapid inference and high accuracy are critical.

Current solutions for IGNNs rely on fixed-point solvers such as Picard iterations or Anderson Acceleration (AA), with each solution requiring multiple forward iterations to compute fixed points. Although functional, these methods are computationally expensive and scale poorly with graph size. For instance, on smaller graphs like Citeseer, IGNNs require over 20 iterations to converge, and this burden increases significantly with larger graphs. The slow convergence and high computational demands make IGNNs unsuitable for real-time or large-scale graph learning tasks, limiting their broader applicability to large datasets.

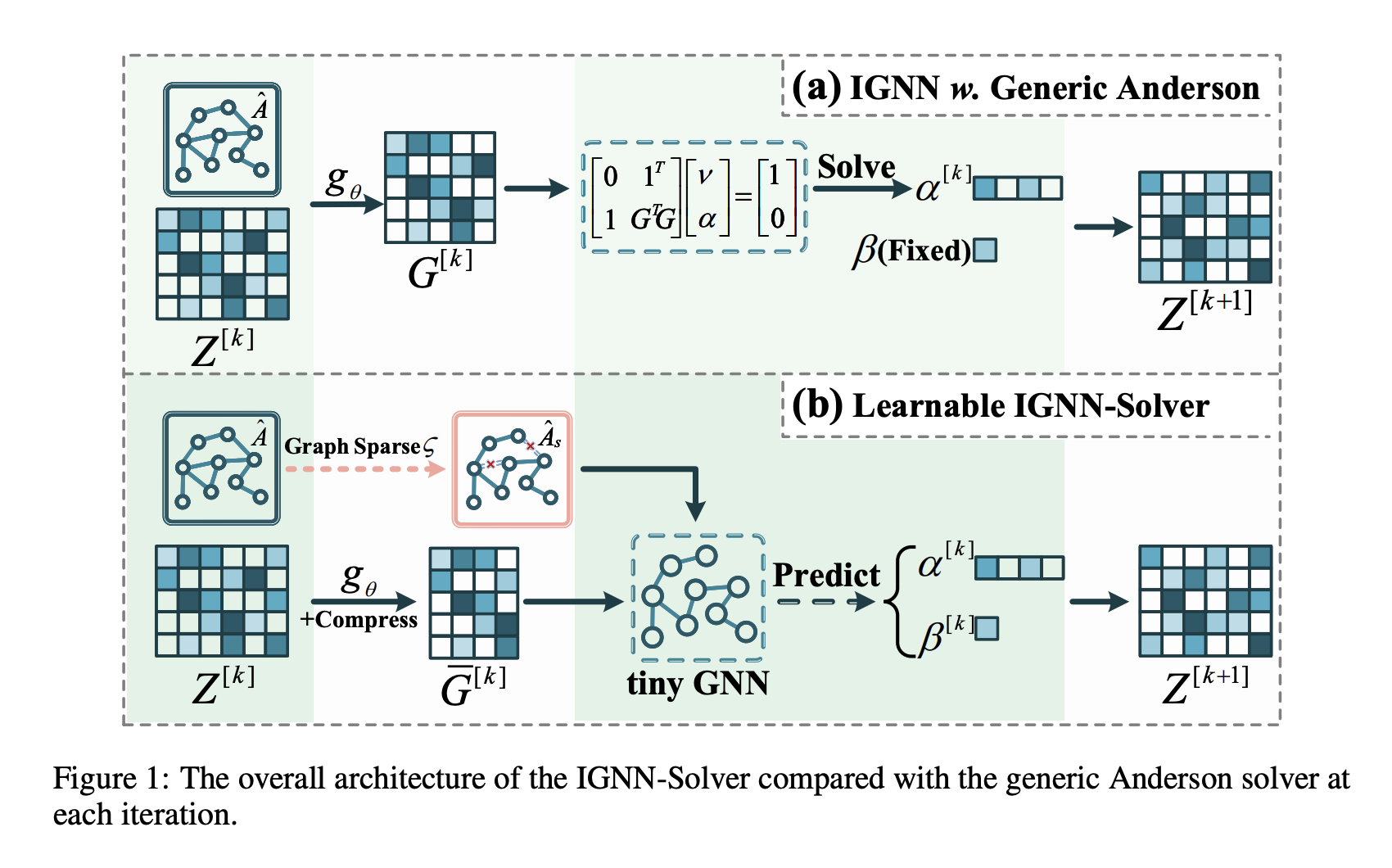

A team of researchers from Huazhong University of Science and Technology, hanghai Jiao Tong University, and Renmin University of China introduce IGNN-Solver, a novel framework that accelerates the fixed-point solving process in IGNNs by employing a generalized Anderson Acceleration method, parameterized by a small Graph Neural Network (GNN). IGNN-Solver addresses the speed and scalability issues of traditional solvers by efficiently predicting the next iteration step and modeling iterative updates as a temporal process based on graph structure. A key feature of this method is the lightweight GNN, which dynamically adjusts parameters during iterations, reducing the number of steps required for convergence, and thus enhancing efficiency and scalability. This approach improves inference speed by up to 8× while maintaining high accuracy, making it ideal for large-scale graph learning tasks.

IGNN-Solver integrates two critical components:

- A learnable initializer that estimates an optimal starting point for the fixed-point iteration process, reducing the number of iterations needed for convergence.

- A generalized Anderson Acceleration technique that uses a small GNN to model and predict iterative updates as graph-dependent steps. This enables efficient adjustment of iteration steps to ensure fast convergence without sacrificing accuracy. The researchers validated IGNN-Solver’s performance on nine real-world datasets, including large-scale datasets like Amazon-all, Reddit, ogbn-arxiv, and ogbn-products, with node and edge counts ranging from hundreds of thousands to millions. Results show that IGNN-Solver adds only 1% to the total training time of the IGNN model while significantly accelerating inference.

IGNN-Solver achieved substantial improvements in both speed and accuracy across various datasets. In large-scale applications such as Amazon-all, Reddit, ogbn-arxiv, and ogbn-products, the solver accelerates IGNN inference by up to 8×, maintaining or exceeding the accuracy of standard methods. For example, on the Reddit dataset, IGNN-Solver improved accuracy to 93.91%, surpassing the baseline model’s 92.30%. Across all datasets, the solver delivers at least a 1.5× speedup, with larger graphs benefiting even more. Additionally, the computational overhead introduced by the solver is minimal, accounting for only about 1% of the total training time, highlighting its scalability and efficiency for large-scale graph tasks.

In conclusion, IGNN-Solver represents a significant advancement in addressing the scalability and speed challenges of IGNNs. By incorporating a learnable initializer and a lightweight, graph-dependent iteration process, it achieves considerable inference acceleration while maintaining high accuracy. These innovations make it an essential tool for large-scale graph learning tasks, providing fast and efficient inference for real-world applications. This contribution enables practical and scalable deployment of IGNNs on large-scale graph datasets, offering both speed and precision.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post IGNN-Solver: A Novel Graph Neural Solver for Implicit Graph Neural Networks appeared first on MarkTechPost.