Large vision-language models have emerged as powerful tools for multimodal understanding, demonstrating impressive capabilities in interpreting and generating content that combines visual and textual information. These models, such as LLaVA and its variants, fine-tune large language models (LLMs) on visual instruction data to perform complex vision tasks. However, developing high-quality visual instruction datasets presents significant challenges. These datasets require diverse images and texts from various tasks to generate various questions, covering areas like object detection, visual reasoning, and image captioning. The quality and diversity of these datasets directly impact the model’s performance, as evidenced by LLaVA’s substantial improvements over previous state-of-the-art methods on tasks like GQA and VizWiz. Despite these advancements, current models face limitations due to the modality gap between pre-trained vision encoders and language models, which restricts their generalization ability and feature representation.

Researchers have made significant strides in addressing the challenges of vision-language models through various approaches. Instruction tuning has emerged as a key methodology, enabling LLMs to interpret and execute human language instructions across diverse tasks. This approach has evolved from closed-domain instruction tuning, which uses publicly available datasets, to open-domain instruction tuning, which utilizes real-world question-answer datasets to enhance model performance in authentic user scenarios.

In vision-language integration, methods like LLaVA have pioneered the combination of LLMs with CLIP vision encoders, demonstrating remarkable capabilities in image-text dialogue tasks. Subsequent research has focused on refining visual instruction tuning by improving dataset quality and variety during pre-training and fine-tuning phases. Models such as LLaVA-v1.5 and ShareGPT4V have achieved notable success in general vision-language comprehension, showcasing their ability to handle complex question-answering tasks.

These advancements highlight the importance of sophisticated data handling and model-tuning strategies in developing effective vision-language models. However, challenges remain in bridging the modality gap between vision and language domains, necessitating continued innovation in model architecture and training methodologies.

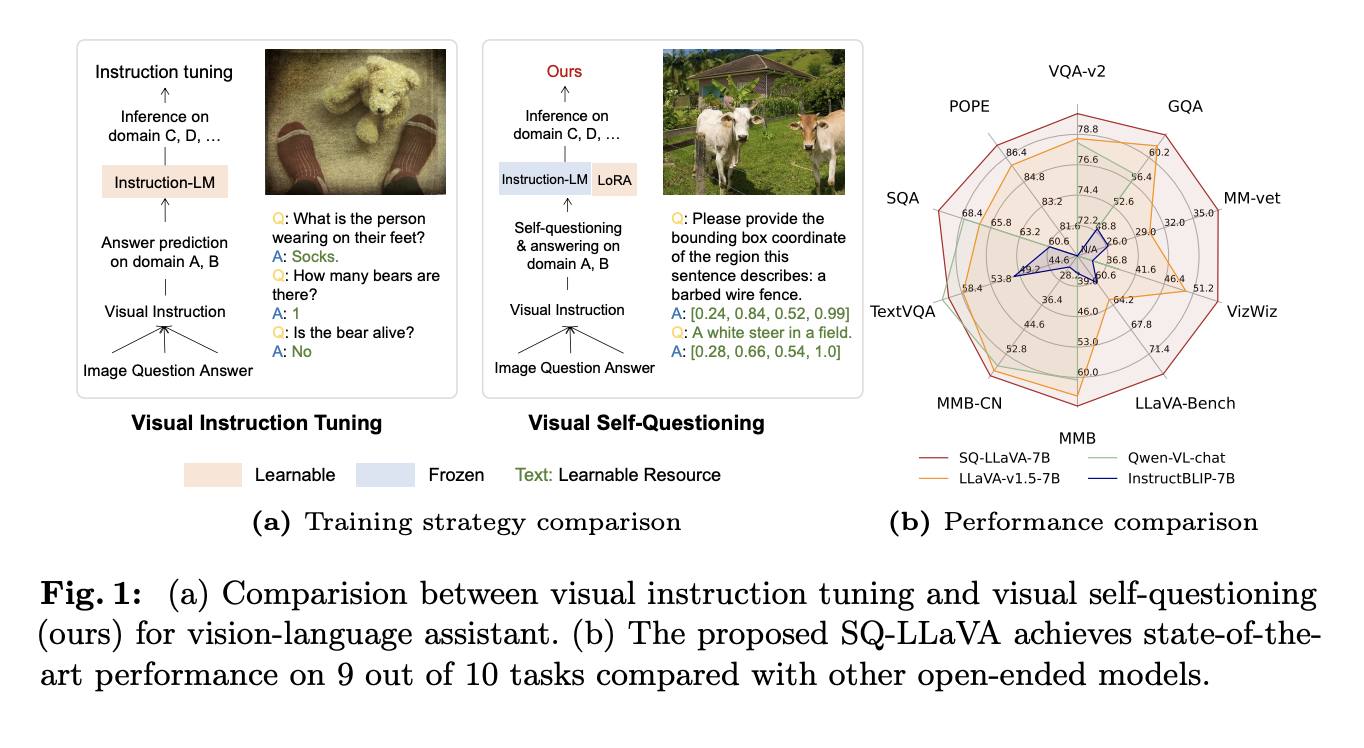

Researchers from Rochester Institute of Technology and Salesforce AI Research propose a unique framework, SQ-LLaVA based on a visual self-questioning approach, implemented in a model named SQ-LLaVA (Self-Questioning LLaVA). This method aims to enhance vision-language understanding by training the LLM to ask questions and discover visual clues without requiring additional external data. Unlike existing visual instruction tuning methods that focus primarily on answer prediction, SQ-LLaVA extracts relevant question context from images.

The approach is based on the observation that questions often contain more image-related information than answers, as evidenced by higher CLIPScores for image-question pairs compared to image-answer pairs in existing datasets. By utilizing this insight, SQ-LLaVA utilizes questions within instruction data as an additional learning resource, effectively enhancing the model’s curiosity and questioning ability.

To efficiently align vision and language domains, SQ-LLaVA employs Low-Rank Adaptations (LoRAs) to optimize both the vision encoder and the instructional LLM. Also, a prototype extractor is developed to enhance visual representation by utilizing learned clusters with meaningful semantic information. This comprehensive approach aims to improve vision-language alignment and overall performance in various visual understanding tasks without the need for new data collection or extensive computational resources.

The SQ-LLaVA model architecture comprises four main components designed to enhance vision-language understanding. At its core is a pre-trained CLIP-ViT vision encoder that extracts sequence embeddings from input images. This is complemented by a robust prototype extractor that learns visual clusters to enrich the original image tokens, improving the model’s ability to recognize and group similar visual patterns.

A trainable projection block, consisting of two linear layers, maps the enhanced image tokens to the language domain, addressing the dimension mismatch between visual and linguistic representations. The backbone of the model is a pre-trained Vicuna LLM, which predicts subsequent tokens based on the previous embedding sequence.

The model introduces a visual self-questioning approach, utilizing a unique [vusr] token to instruct the LLM to generate questions about the image. This process is designed to utilize the rich semantic information often present in questions, potentially surpassing that of answers. The architecture also includes an enhanced visual representation component featuring a prototype extractor that uses clustering techniques to capture representative semantics in the latent space. This extractor iteratively updates cluster assignments and centers, adaptively mapping visual cluster information to the raw image embeddings.

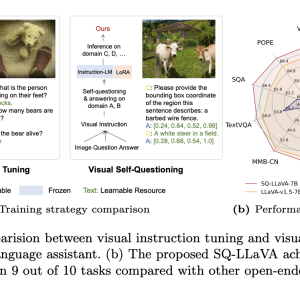

The researchers evaluated SQ-LLaVA on a comprehensive set of ten visual question-answering benchmarks, covering a wide range of tasks from academic VQA to instruction tuning tasks designed for large vision-language models. The model demonstrated significant improvements over existing methods in several key areas:

1. Performance: SQ-LLaVA-7B and SQ-LLaVA-13B outperformed previous methods in six out of ten visual instruction tuning tasks. Notably, SQ-LLaVA-7B achieved a 17.2% improvement over LLaVA-v1.5-7B on the LLaVA (in the wild) benchmark, indicating superior capabilities in detailed description and complex reasoning.

2. Scientific reasoning: The model showed improved performance on ScienceQA, suggesting strong capabilities in multi-hop reasoning and comprehension of complex scientific concepts.

3. Reliability: SQ-LLaVA-7B demonstrated a 2% and 1% improvement over LLaVA-v1.5-7B and ShareGPT4V-7B on the POPE benchmark, indicating better reliability and reduced object hallucination.

4. Scalability: SQ-LLaVA-13B surpassed previous works in six out of ten benchmarks, demonstrating the method’s effectiveness with larger language models.

5. Visual information discovery: The model showed advanced capabilities in detailed image description, visual information summary, and visual self-questioning. It generated diverse and meaningful questions about given images without requiring human textual instructions.

6. Zero-shot image captioning: SQ-LLaVA achieved significant improvements over baseline models like ClipCap and DiscriTune, with a 73% and 66% average improvement across all datasets.

These results were achieved with significantly fewer trainable parameters compared to other methods, highlighting the efficiency of the SQ-LLaVA approach. The model’s ability to generate diverse questions and provide detailed image descriptions demonstrates its potential as a powerful tool for visual information discovery and understanding.

SQ-LLaVA introduces a unique visual instruction tuning method that enhances vision-language understanding through self-questioning. The approach achieves superior performance with fewer parameters and less data across various benchmarks. It demonstrates improved generalization to unseen tasks, reduces object hallucination, and enhances semantic image interpretation. By framing questioning as an intrinsic goal, SQ-LLaVA explores model curiosity and proactive question-asking abilities. This research highlights the potential of visual self-questioning as a powerful training strategy, paving the way for more efficient and effective large vision-language models capable of tackling complex problems across diverse domains.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit

[Upcoming Event- Oct 17 202] RetrieveX – The GenAI Data Retrieval Conference (Promoted)

The post SQ-LLaVA: A New Visual Instruction Tuning Method that Enhances General-Purpose Vision-Language Understanding and Image-Oriented Question Answering through Visual Self-Questioning appeared first on MarkTechPost.