Large Language Models (LLMs) evaluate and interpret links between words or tokens in a sequence primarily through the self-attention mechanism. However, this module’s time and memory complexity rises quadratically with sequence length, which is a disadvantage. Longer sequences demand exponentially more memory and processing, which makes scaling LLMs for applications involving longer contexts inefficient and challenging.

FlashAttention was developed as a way to overcome this restriction by accelerating attention computations and utilizing less memory. It does this by making use of the GPU memory hierarchy, which is the arrangement and accessibility of memory on a GPU. By dividing the computations into smaller, more manageable chunks that fit more effectively into the GPU memory, FlashAttention optimizes the attention process, resulting in faster performance and less memory overhead. This increases the scalability of the attention mechanism, especially for longer sequences.

Combining quantization methods with FlashAttention is an intriguing new research topic. Quantization uses less complex numerical forms, such as INT8 (8-bit integer), to minimize the precision of the data used in model simulations, enabling faster processing and less memory usage. This can result in even higher efficiency gains when combined with FlashAttention, particularly in the inference stage, which is when the model generates predictions based on previously learned data.

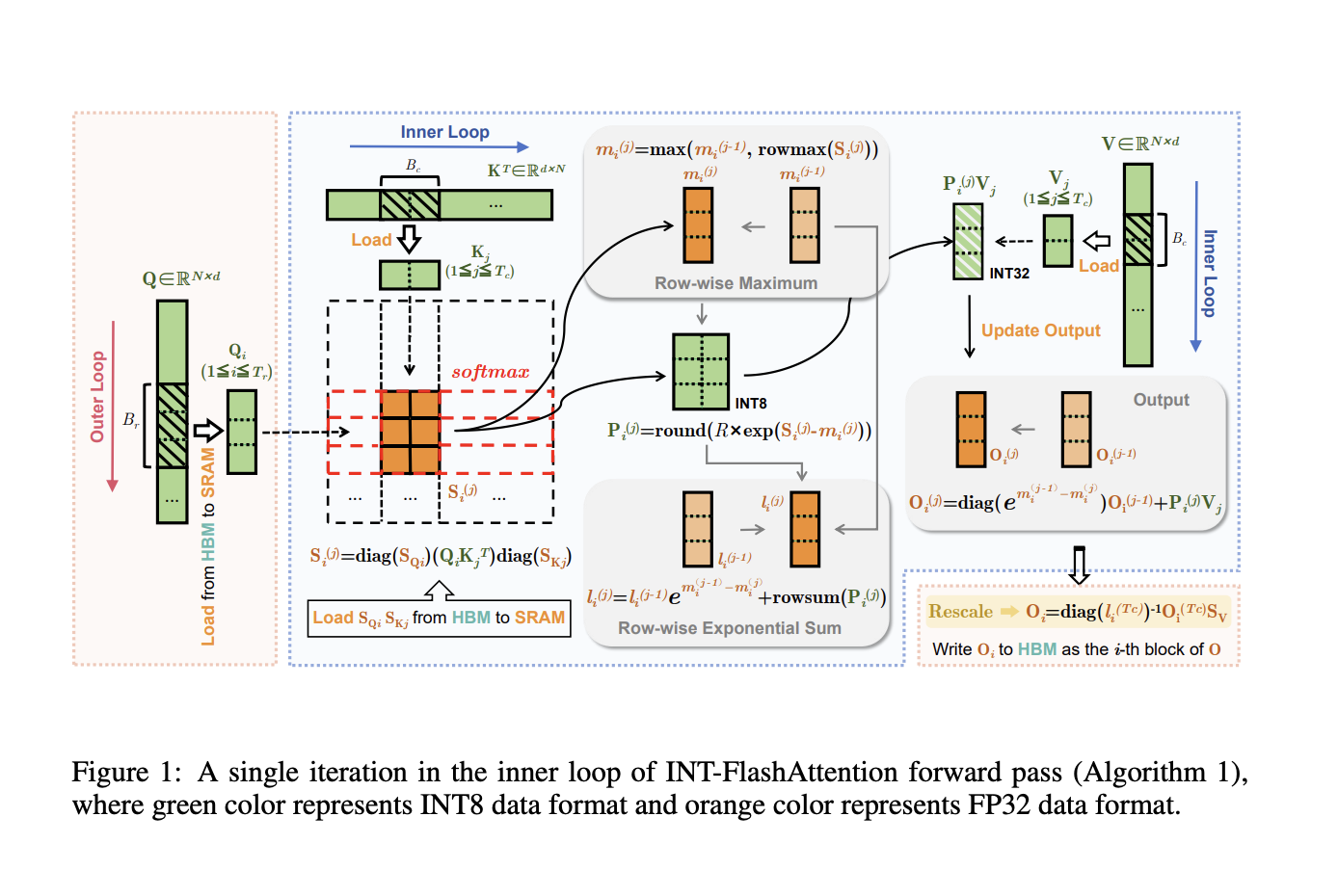

In recent research from China, INT-FlashAttention has been proposed, which is a significant innovation in this regard. As the first architecture created especially for Ampere GPUs, like NVIDIA’s A100 series, it completely integrates INT8 quantization with the forward process of FlashAttention. INT-FlashAttention uses much more efficient INT8 general matrix-multiplication (GEMM) kernels in place of the floating-point operations normally utilized in the self-attention module. Compared to floating-point formats like FP16 or FP8, INT8 operations demand substantially fewer processing resources, which significantly increases inference speed and energy savings.

INT-FlashAttention is unique in that it can process fully INT8 inputs, including the query (Q), key (K), and value (V) matrices that are essential to the attention mechanism for all calculations related to attention. To retain accuracy even with reduced precision, INT-FlashAttention preserves token-specific information by utilizing a token-level post-training quantization technique. Furthermore flexible, this token-level approach makes the framework compatible with various lower-precision formats, such as INT4 (4-bit integers), providing additional memory and computational savings without compromising performance.

The team has shared that upon evaluation when INT-FlashAttention is used instead of the typical FP16 (16-bit floating-point) implementation of FlashAttention, the inference speed is 72% faster. Compared to FP8-based FlashAttention, it can eliminate quantization error by up to 82%, which means that in addition to operating more quickly, it also maintains greater accuracy. These findings have shown that INT-FlashAttention can greatly increase the scalability and efficiency of LLMs on commonly used hardware, such as Ampere GPUs.

The team has summarized their primary contributions as follows.

- The research has presented INT-FlashAttention, a unique token-level post-training quantization architecture that enhances efficiency without compromising the core attention mechanism. It smoothly integrates into the forward computational workflow of FlashAttention.

- The team has implemented the INT8 version of the INT-FlashAttention prototype, which is a major advancement in attention computing and quantization techniques.

- Extensive tests have been conducted to validate the experimental results, which show that INT-FlashAttention achieves a much higher inference speed than baseline solutions. It also exhibits better quantization accuracy than previous solutions, meaning that in addition to being faster, it preserves a more accurate representation of the data than FP16 or FP8 FlashAttention implementations.

In conclusion, the release of INT-FlashAttention is a key step towards improving the efficiency and accessibility of high-performance LLMs for a wider range of applications, especially in data centers where older GPU architectures like Ampere are still widely used. By using quantization and FlashAttention together, INT-FlashAttention provides a potent way to improve large-scale language model inference speed and accuracy.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 52k+ ML SubReddit.

We are inviting startups, companies, and research institutions who are working on small language models to participate in this upcoming ‘Small Language Models’ Magazine/Report by Marketchpost.com. This Magazine/Report will be released in late October/early November 2024. Click here to set up a call!

The post Researchers from China Introduce INT-FlashAttention: INT8 Quantization Architecture Compatible with FlashAttention Improving the Inference Speed of FlashAttention on Ampere GPUs appeared first on MarkTechPost.