Software engineering integrates principles from computer science to design, develop, and maintain software applications. As technology advances, the complexity of software systems increases, creating challenges in ensuring efficiency, accuracy, and overall performance. Artificial intelligence, particularly using Large Language Models (LLMs), has significantly impacted this field. LLMs now automate tasks like code generation, debugging, and software testing, reducing human involvement in these repetitive tasks. These approaches are becoming critical in addressing the growing challenges in modern software development.

One of the major challenges in software engineering is managing the increasing complexity of software systems. As software scales, traditional methods often fail to meet the demands of modern applications. Developers need help generating reliable code, detecting vulnerabilities, and ensuring functionality throughout development. This complexity calls for solutions that assist with code generation and seamlessly integrate various tasks, minimizing errors and improving overall development speed.

Current tools used in software engineering, such as LLM-based models, assist developers by automating tasks like code summarization, bug detection, and code translation. However, while these tools provide automation, they are typically designed for narrow, task-specific functions. They often need a cohesive framework to integrate the full spectrum of software development tasks. This fragmentation limits their ability to address the broader context of software engineering challenges, leaving room for further innovation.

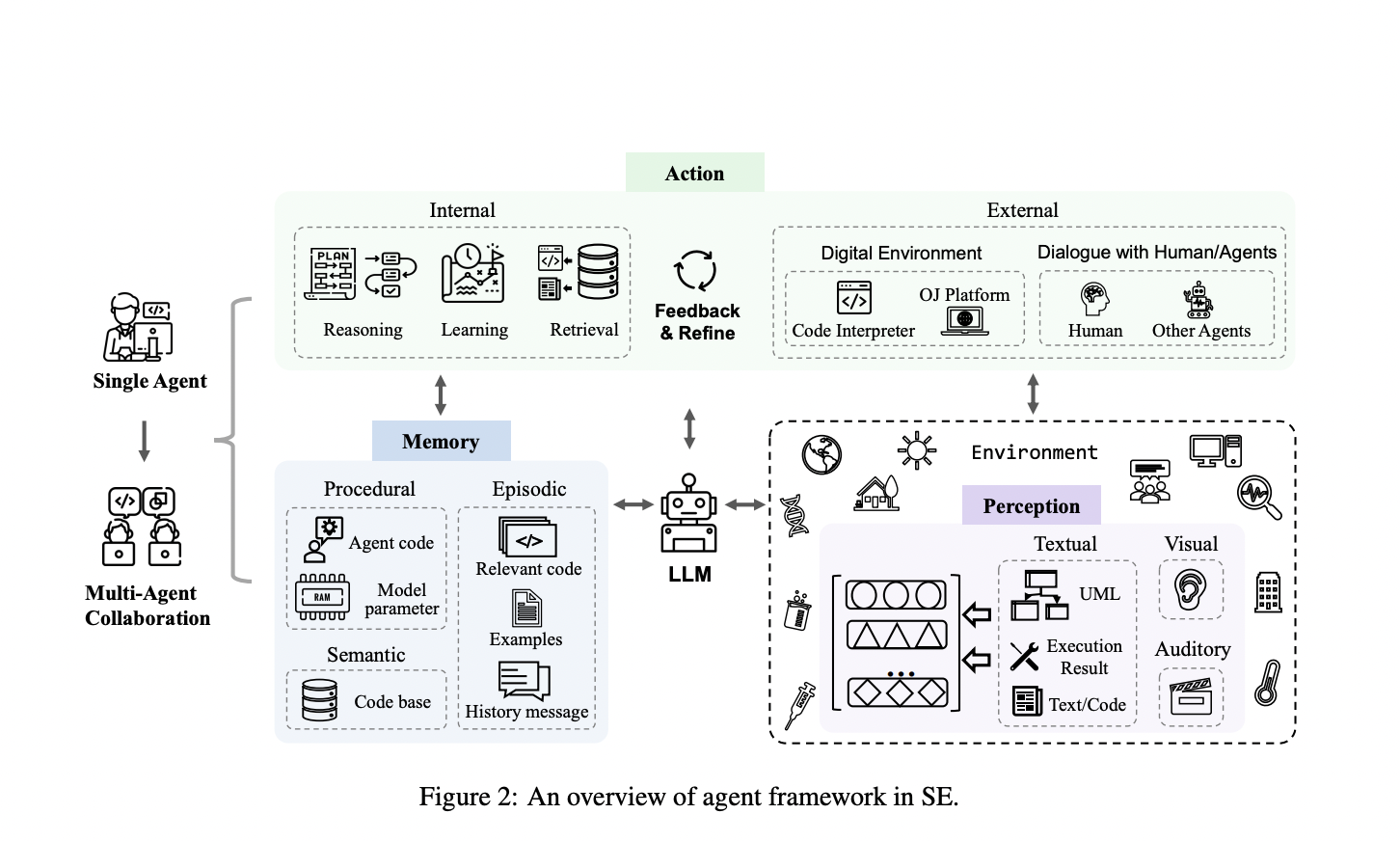

Researchers from Sun Yat-sen University, Xi’an Jiaotong University, Shenzhen Institute of Advanced Technology, Xiamen University, and Huawei Cloud Computing Technologies have proposed a new framework to tackle these challenges. This framework uses LLM-driven agents for software engineering tasks and includes three key modules: perception, memory, and action. The perception module processes various inputs, such as text, images, and audio, while the memory module organizes and stores this information for future decision-making. The action module uses this information to make informed decisions and perform tasks like code generation, debugging, and other software development activities.

The framework’s methodology involves these modules working together to automate complex workflows. The perception module processes inputs and converts them into a format that LLMs can understand. The memory module stores different types of information, such as semantic, episodic, and procedural memory, which are used to improve decision-making. The action module combines inputs and memory to execute tasks such as code generation and debugging, learning from previous actions to improve future outputs. This integrated approach enhances the system’s ability to handle various software engineering tasks with greater contextual awareness.

The study highlighted several performance challenges in implementing this framework. One significant issue identified was the hallucinations produced by LLM-based agents, such as generating non-existent APIs. These hallucinations impact the system’s reliability, and mitigating them is critical to improving performance. The framework also faces challenges in multi-agent collaboration, where agents must synchronize and share information, leading to increased computational costs and communication overheads. Researchers noted that improving resource efficiency and reducing these communication costs is essential for enhancing the system’s overall performance.

The study also discusses areas for future research, particularly the need to address the hallucinations generated by LLMs and optimize multi-agent collaboration processes. These critical challenges must be resolved to realize the potential of LLM-based agents in software engineering fully. Further, incorporating more advanced software engineering technologies into these frameworks could enhance their capabilities, especially in handling complex software projects.

In conclusion, the research offers a comprehensive framework to address the growing challenges in software engineering by leveraging LLM-based agents. The proposed system integrates perception, memory, and action modules to automate key tasks such as code generation, debugging, and decision-making. While the framework demonstrates potential, the study emphasizes opportunities for improvement, particularly in reducing hallucinations and enhancing efficiency in multi-agent collaboration. The contributions from Sun Yat-sen University and Huawei Cloud Computing mark a significant step forward in integrating AI technologies into practical software engineering applications.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post This AI Paper Introduces a Comprehensive Framework for LLM-Driven Software Engineering Tasks appeared first on MarkTechPost.