Previous research on Retrieval-Augmented Generation (RAG) in large language models (LLMs) concentrated on enhancing retrieval models to improve document selection for generation tasks. Initial studies established the benefits of integrating external information into LLMs, but recent extensions to noisy environments often focused on a limited range of noise types, typically assuming noise negatively impacted model performance. These studies lacked a comprehensive classification system, restricting their findings’ practical applicability.

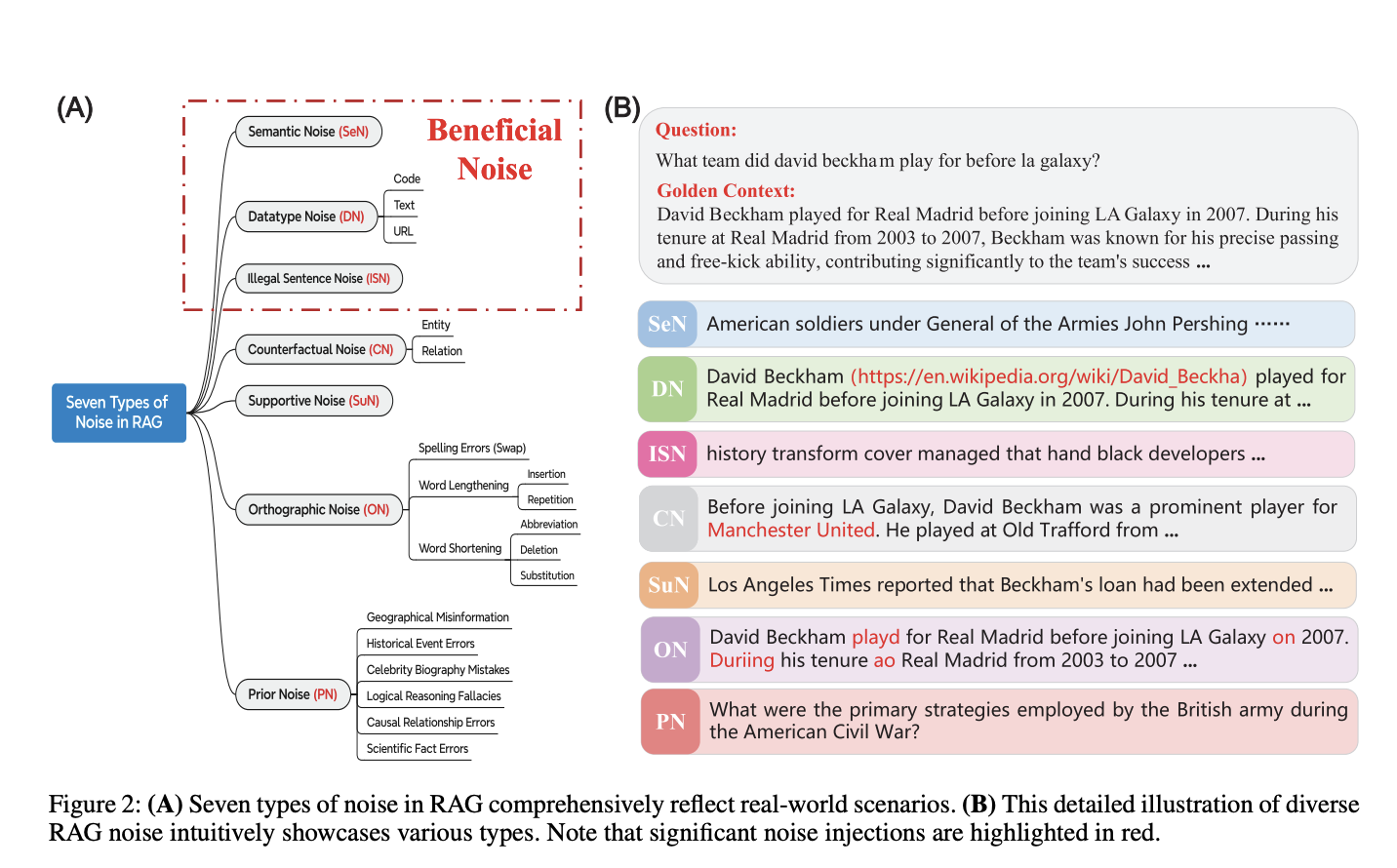

Different training techniques are aimed to improve the robustness of the RAG model against retrieval noise, with frameworks like RobustRAG enhancing defence against corruption attacks. However, prior research often neglected the systematic evaluation of noise, overlooking its potential positive effects. The need for a detailed exploration of retrieval noise, including a clear classification of noise types, became evident. This paper addresses these gaps by defining seven types of noise, categorizing them into beneficial and harmful groups, and providing a nuanced understanding of RAG noise in LLMs.

The researchers from Beijing National Research Center for Information Science and Technology and Tsinghua University addressed challenges in LLMs, particularly hallucinations, by examining the role of RAG in mitigating these issues. This method critiques previous research for its limited focus on noise types and assumptions of noise being detrimental, neglecting potential benefits. The paper introduces a novel evaluation framework, NoiserBench, and categorizes noise into beneficial and harmful types. By defining seven distinct noise types, this study offers a structured approach to enhancing RAG systems and improving LLM performance across various scenarios.

This study employs a systematic approach to examine the impact of RAG noise on LLMs. The methodology begins by defining seven distinct noise types, categorized into beneficial (e.g., semantic, datatype) and harmful (e.g., counterfactual, supportive) groups. A novel benchmark, NoiserBench, is introduced to generate varied retrieval documents, enabling a thorough evaluation of noise effects. A systematic framework is proposed to create diverse noisy documents, allowing for a comprehensive assessment of their influence on model outputs.

Experimentation involves selecting eight diverse LLMs, and analyzing their responses to RAG noise across multiple datasets. Data is collected before and after introducing beneficial noise, with a two-step statistical analysis verifying hypotheses about noise effects. The study compares outputs, showing that beneficial noise leads to clearer reasoning and more standardized formats in LLMs. Evaluation metrics across different model architectures, scales, and RAG designs confirm the significance of beneficial noise in enhancing model performance while addressing harmful noise impacts.

The numerical results highlight the dual impact of RAG noise on LLMs. Beneficial noise, such as illegal sentence noise (ISN), consistently improves model accuracy by up to 3.32%, enhancing reasoning and response confidence. In contrast, harmful noise types, like counterfactual noise (CN) and orthographic noise (ON), degrade performance, disrupting fact discernment. The NoiserBench evaluation framework, supported by visual and statistical analysis, underscores the importance of managing noise types to optimize LLM performance in RAG systems.

In conclusion, the paper provides a comprehensive analysis of RAG noise in LLMs, defining seven distinct noise types and categorizing them as beneficial or harmful. A novel framework, including the NoiserBench benchmark, allows for systematic evaluation across multiple models. Notably, beneficial noise is found to enhance model performance by improving reasoning clarity and answer standardization. The paper advocates for future research to focus on leveraging beneficial noise while mitigating harmful effects, setting the foundation for more robust and adaptable RAG systems.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and LinkedIn. Join our Telegram Channel.

If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post Exploring the Dual Nature of RAG Noise: Enhancing Large Language Models Through Beneficial Noise and Mitigating Harmful Effects appeared first on MarkTechPost.