Artificial intelligence, particularly the development of large language models (LLMs), has been rapidly advancing, focusing on improving these models’ reasoning capabilities. As AI systems are increasingly tasked with complex problem-solving, it is crucial that they not only generate accurate solutions but also possess the ability to evaluate and refine their outputs critically. This enhancement in reasoning is essential for creating AI that can operate with greater autonomy and reliability in various sophisticated tasks. The ongoing research in this field reflects the growing demand for AI systems that can independently assess their reasoning processes and correct potential errors, thereby becoming more effective and trustworthy tools.

A significant challenge in advancing LLMs is the development of mechanisms that enable these models to critique their reasoning processes effectively. Current methods often rely on basic prompts or external feedback, which are limited in scope and effectiveness. These approaches typically involve simple critiques that point out errors but do not provide the depth of understanding necessary to improve the model’s reasoning accuracy substantially. This limitation results in errors going undetected or improperly addressed, restricting AI’s ability to perform complex tasks reliably. The challenge, therefore, lies in creating a self-critique framework that allows AI models to critically analyze and improve their outputs meaningfully.

Traditionally, AI systems have improved their reasoning capabilities through external feedback mechanisms, where human annotators or other systems provide corrective input. While these methods can be effective, they are also resource-intensive and need more scalability, making them impractical for widespread use. Moreover, some existing approaches incorporate basic forms of self-criticism, but these often need to be revised to improve model performance significantly. The key problem with these methods is that they do not sufficiently enhance the model’s intrinsic ability to evaluate and refine its reasoning, which is essential for developing more intelligent AI systems.

Researchers from the Chinese Information Processing Laboratory, the Chinese Academy of Sciences, the University of Chinese Academy of Sciences, and Xiaohongshu Inc. have developed a novel framework called Critic-CoT. This framework is designed to significantly improve the self-critique abilities of LLMs by guiding them toward more rigorous, System-2-like reasoning. The Critic-CoT framework leverages a structured Chain-of-Thought (CoT) format, allowing models to evaluate their reasoning steps and make necessary refinements systematically. This innovative approach reduces the need for costly human annotations while pushing the boundaries of what AI can achieve in self-evaluation and correction.

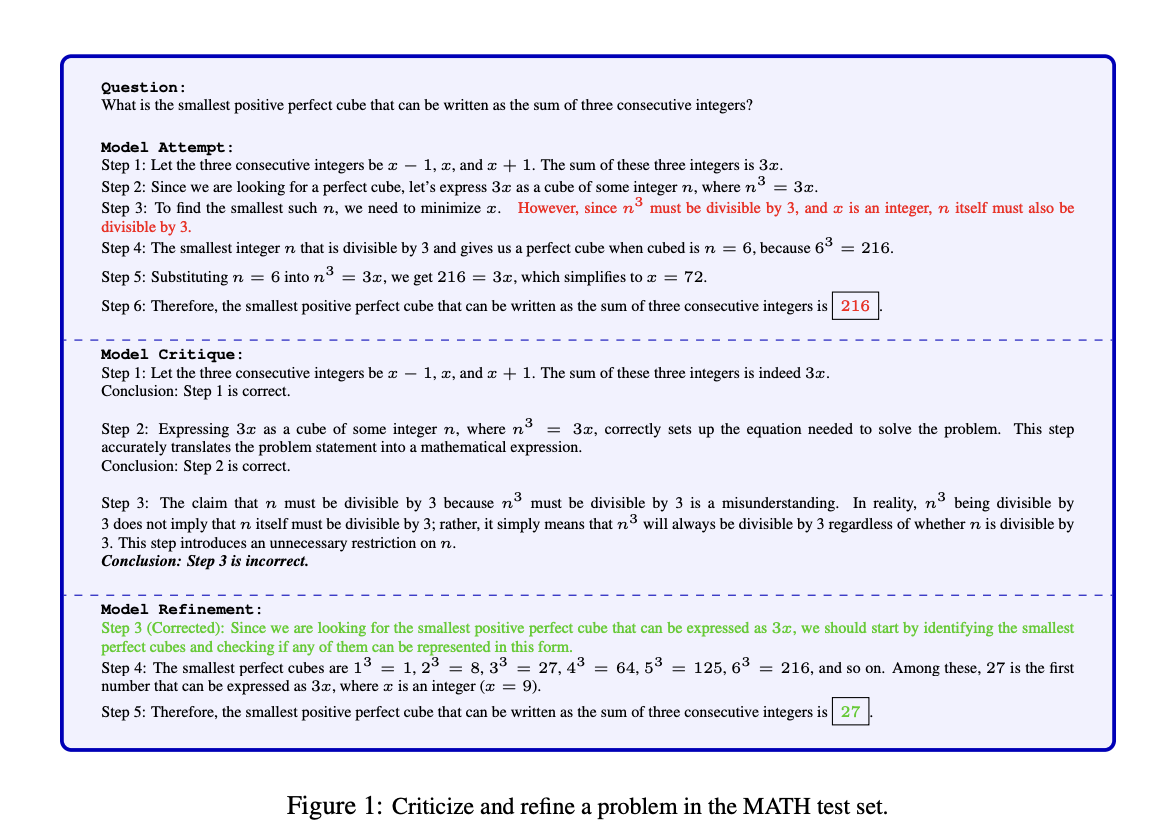

The Critic-CoT framework operates by engaging LLMs in a step-wise critique process. The model first generates a solution to a given problem and then critiques its output, identifying errors or areas of improvement. Following this, the model refines the solution based on the critique, and this process is repeated iteratively until the solution is either corrected or validated. For example, during experiments on the GSM8K and MATH datasets, the Critic-CoT model could detect and correct errors in its solutions with high accuracy. The iterative nature of this process allows the model to continuously improve its reasoning capabilities, making it more adept at handling complex tasks.

The effectiveness of the Critic-CoT framework was demonstrated through extensive experiments. On the GSM8K dataset, which consists of grade-school-level math word problems, the accuracy of the LLM improved from 89.6% to 93.3% after iterative refinement, with a critic filter further increasing accuracy to 95.4%. Similarly, on the more challenging MATH dataset, which includes high school math competition problems, the model’s accuracy increased from 51.0% to 57.8% after employing the Critic-CoT framework, with additional gains observed when applying the critic filter. These results highlight the significant improvements in task-solving performance that can be achieved through the Critic-CoT framework, particularly when the model is tasked with complex reasoning scenarios.

In conclusion, the Critic-CoT framework represents a substantial advancement in developing self-critique capabilities for LLMs. This research addresses the critical challenge of enabling AI models to evaluate and improve their reasoning by introducing a structured and iterative refinement process. The impressive gains in accuracy observed in both the GSM8K and MATH datasets demonstrate the potential of Critic-CoT to enhance the performance of AI systems across various complex tasks. This framework improves the accuracy and reliability of AI reasoning and reduces the need for human intervention, making it a scalable and efficient solution for future AI development.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and LinkedIn. Join our Telegram Channel. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post Critic-CoT: A Novel Framework Enhancing Self-Critique and Reasoning Capabilities in Large Language Models for Improved AI Accuracy and Reliability appeared first on MarkTechPost.