Large language models (LLMs) based on autoregressive Transformer Decoder architectures have advanced natural language processing with outstanding performance and scalability. Recently, diffusion models have gained attention for visual generation tasks, overshadowing autoregressive models (AMs). However, AMs show better scalability for large-scale applications and work more efficiently with language models, making them more suitable for unifying language and vision tasks. Recent advancements in autoregressive visual generation (AVG) have shown promising results, matching or outperforming diffusion models in quality. Despite this, there are still major challenges, especially in computational efficiency due to the high complexity of visual data and the quadratic computational demands of Transformers.

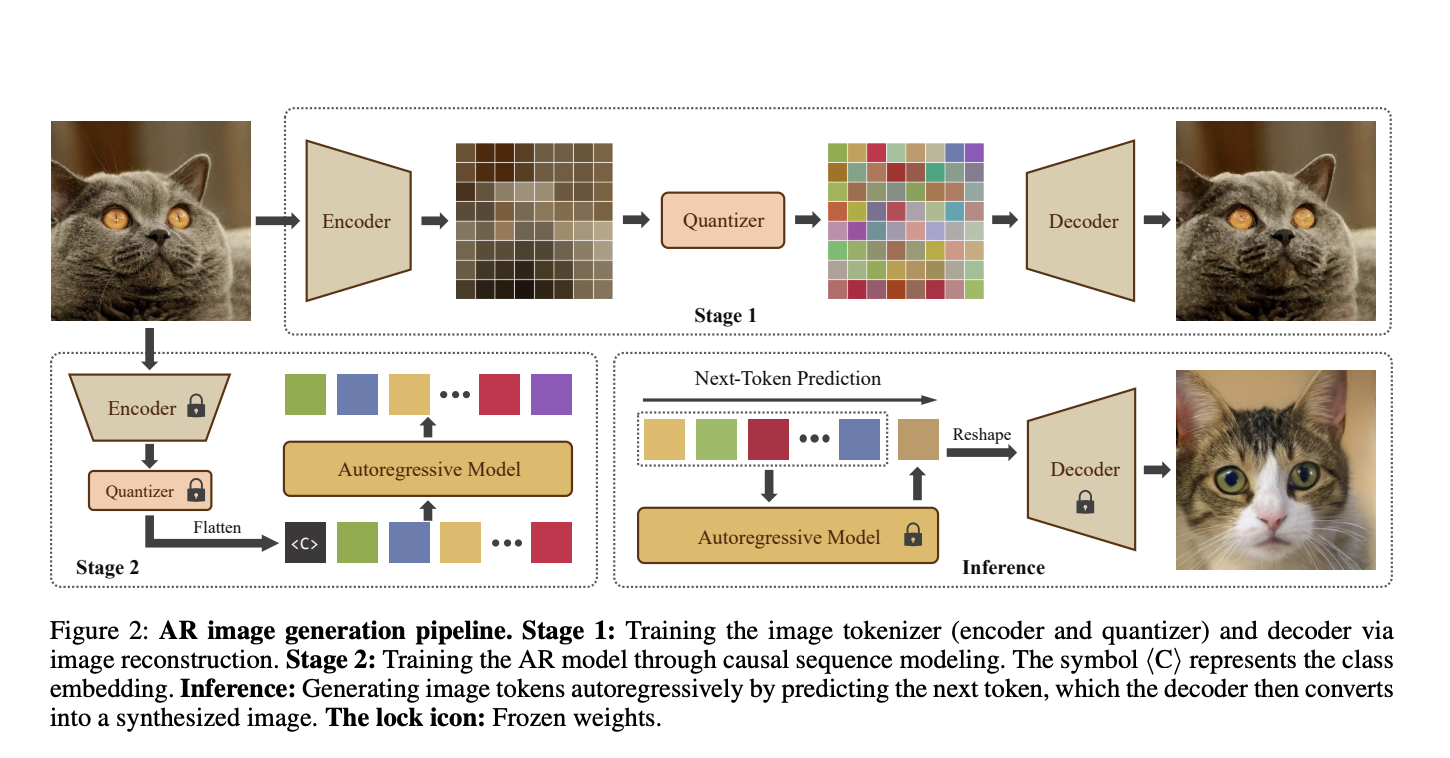

Existing methods include Vector Quantization (VQ) based models and State Space Models (SSMs) to solve the challenges in AVG. VQ-based approaches, such as VQ-VAE, DALL-E, and VQGAN, compress images into discrete codes and use AMs to predict these codes. SSMs, especially the Mamba family, have shown potential in managing long sequences with linear computational complexity. Recent adaptations of Mamba for visual tasks, like ViM, VMamba, Zigma, and DiM, have explored multi-directional scan strategies to capture 2D spatial information. However, these methods add additional parameters and computational costs, decreasing the speed advantage of Mamba and increasing GPU memory requirements.

Researchers from Beijing University of Posts and Telecommunications, University of Chinese Academy of Sciences, The Hong Kong Polytechnic University, and Institute of Automation, Chinese Academy of Sciences have proposed AiM, a new Autoregressive image generation model based on the Mamba framework. It is developed for high-quality and efficient class-conditional image generation, making it the first model of its kind. Aim uses positional encoding, providing a new and more generalized adaptive layer normalization method called adaLN-Group, which optimizes the balance between performance and parameter count. Moreover, AiM has shown state-of-the-art performance among AMs on the ImageNet 256×256 benchmark while achieving fast inference speeds.

AiM was developed in four scales and evaluated on the ImageNet1K benchmark to evaluate its architectural design, performance, scalability, and inference efficiency. It uses an image tokenizer with a 16 downsampling factor, initialized with pre-trained weights from LlamaGen. Each 256×256 image is tokenized into 256 tokens. The training was conducted on 80GB A100 GPUs using the AdamW optimizer with specific hyperparameters. The training epochs vary between 300 and 350 depending on the model scale, and a dropout rate of 0.1 was applied to class embeddings for classifier-free guidance. Evaluation metrics used Frechet Inception Distance (FID) as the primary metric to evaluate the model’s performance in image generation tasks.

AiM showed significant performance gains as the model size and training duration increased, with a strong correlation coefficient of -0.9838 between FID scores and model parameters. This proves the AiM’s scalability and the effectiveness of larger models in improving image generation quality. It achieved state-of-the-art performance among AMs such as GANs, diffusion models, masked generative models, and Transformer-based AMs. Moreover, AiM has a clear advantage in inference speed compared to other models, with Transformer-based models benefiting from Flash-Attention and KV Cache optimizations.

In conclusion, researchers have introduced Aim, a novel Autoregressive image generation model based on the Mamba framework. This paper explores the potential of Mamba in visual tasks, successfully adapting it to visual generation without any requirement for additional multi-directional scans. The effectiveness and efficiency of AiM highlight its scalability and wide applicability in autoregressive visual modeling. However, it focuses only on class-conditional generation, without exploring text-to-image generation, providing directions for future research for further advancements in the visual generation field using state space models like Mamba.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

Here is a highly recommended webinar from our sponsor: ‘Building Performant AI Applications with NVIDIA NIMs and Haystack’

The post AiM: An Autoregressive (AR) Image Generative Model based on Mamba Architecture appeared first on MarkTechPost.