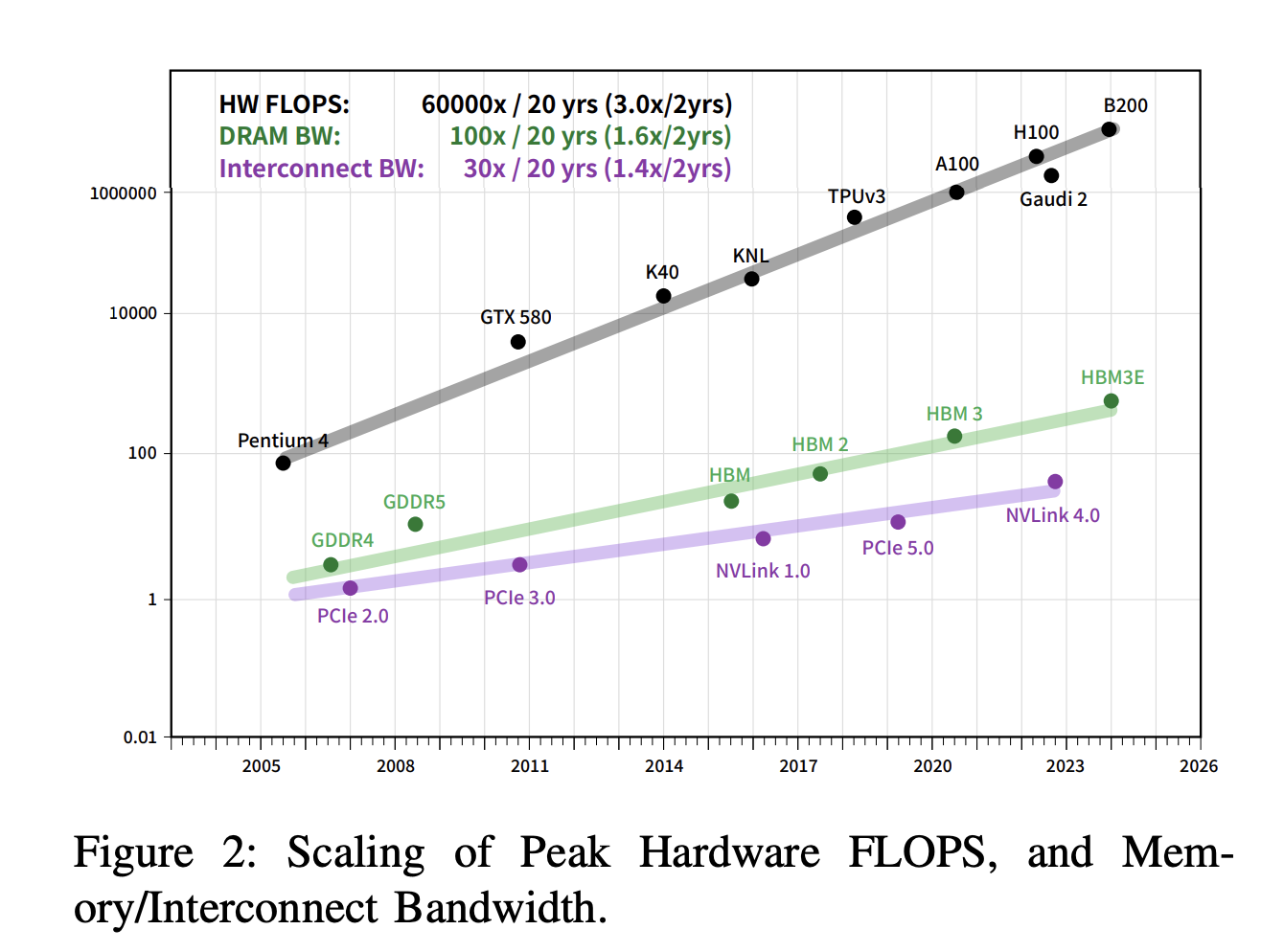

The demand for processing power and bandwidth has increased exponentially due to the rapid advancements in Large Language Models (LLMs) and Deep Learning. The complexity and size of these models, which need enormous quantities of data and computer power to train properly, are the main causes of this demand spike. However, building high-performance computing systems is much more expensive due to the high cost of faster processing cores and sophisticated interconnects. This poses a significant obstacle for companies trying to increase their AI capabilities while controlling expenses.

To address these limitations, a team of researchers from DeepSeek-AI has developed the Fire-Flyer AI-HPC architecture, a comprehensive framework that synergistically merges hardware and software design. This method prioritizes cost-effectiveness and energy conservation in addition to performance optimization. The team has implemented the Fire-Flyer 2, a state-of-the-art system with 10,000 PCIe A100 GPUs specifically built for DL training activities.

One of the Fire-Flyer 2’s most notable accomplishments is its ability to deliver performance levels comparable to the industry-leading NVIDIA DGX-A100. All of this has been done with a 50% cost reduction and a 40% energy consumption decrease. The savings can be attributed to careful engineering and deliberate design decisions that optimize the system’s hardware and software components.

HFReduce, a specially engineered method meant to speed up all-reduce communication, a crucial process in distributed training, is one of the architecture’s main innovations. Maintaining high throughput in large-scale training workloads requires dramatically improving the efficiency of data interchange across GPUs, which HFReduce greatly enhances. The team has also taken a number of other actions to guarantee that the Computation-Storage Integrated Network doesn’t experience any congestion, which will increase the system’s general dependability and performance.

Tools like HaiScale, 3FS, and the HAI-Platform are part of a strong software stack that supports the Fire-Flyer AI-HPC architecture. Together, these parts improve scalability by sharing computing and communication tasks, enabling the system to effectively manage workloads that become bigger and more complicated over time.

In conclusion, the Fire-Flyer AI-HPC architecture is a major advancement in the development of affordable, high-performance computing systems for Artificial Intelligence. With a significant focus on cost and energy efficiency, the team has developed a system that satisfies the expanding requirements of DL and LLMs by combining cutting-edge hardware and software solutions.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

Here is a highly recommended webinar from our sponsor: ‘Building Performant AI Applications with NVIDIA NIMs and Haystack’

The post DeepSeek-AI Introduces Fire-Flyer AI-HPC: A Cost-Effective Software-Hardware Co-Design for Deep Learning appeared first on MarkTechPost.