Neuro-symbolic artificial intelligence (NeSy AI) is a rapidly evolving field that seeks to combine the perceptive abilities of neural networks with the logical reasoning strengths of symbolic systems. This hybrid approach is designed to address complex tasks that require both pattern recognition and deductive reasoning. NeSy systems aim to create more robust and generalizable AI models by integrating neural and symbolic components. Despite limited data, these models are better equipped to handle uncertainty, make informed decisions, and perform effectively. The field represents a significant step forward in AI, aiming to overcome the limitations of purely neural or purely symbolic approaches.

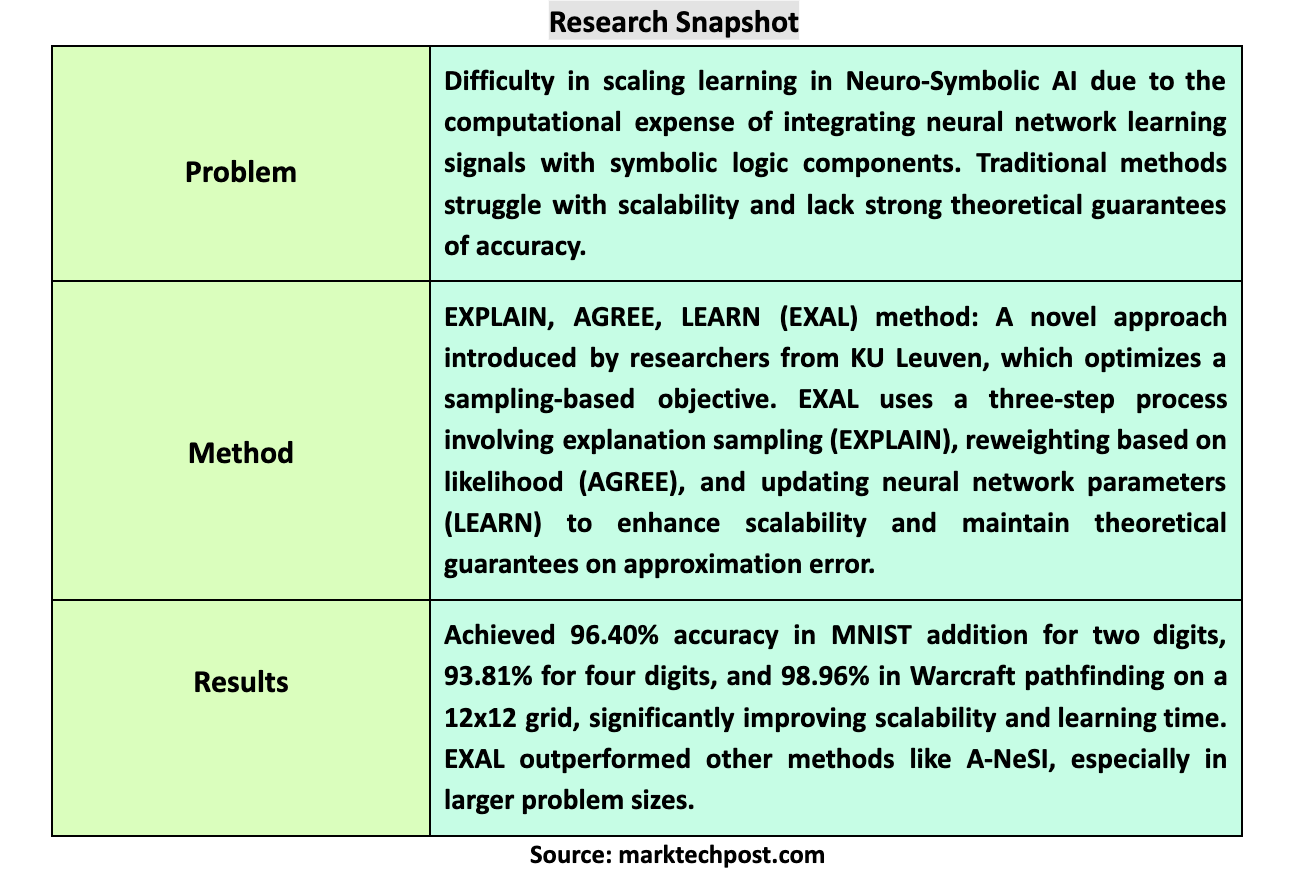

One of the major challenges facing the development of NeSy AI is the complexity involved in learning from data when combining neural and symbolic components. Specifically, integrating learning signals from the neural network with the symbolic logic component is a difficult task. Traditional learning methods in NeSy systems often rely on exact probabilistic logic inference, which is computationally expensive and needs to scale better to more complex or larger systems. This limitation has hindered the widespread application of NeSy systems, as the computational demands make them impractical for many real-world problems where scalability and efficiency are critical.

Several existing methods attempt to address this learning challenge in NeSy systems, each with limitations. For example, knowledge compilation techniques provide exact propagation of learning signals but need better scalability, making them impractical for larger systems. Approximation methods, such as k-best solutions or the A-NeSI framework, offer alternative approaches by simplifying the inference process. However, these methods often introduce biases or require extensive optimization and hyperparameter tuning, resulting in long training times and reduced applicability to complex tasks. Moreover, these approaches generally need stronger guarantees of the accuracy of their approximations, raising concerns about their outcomes’ reliability.

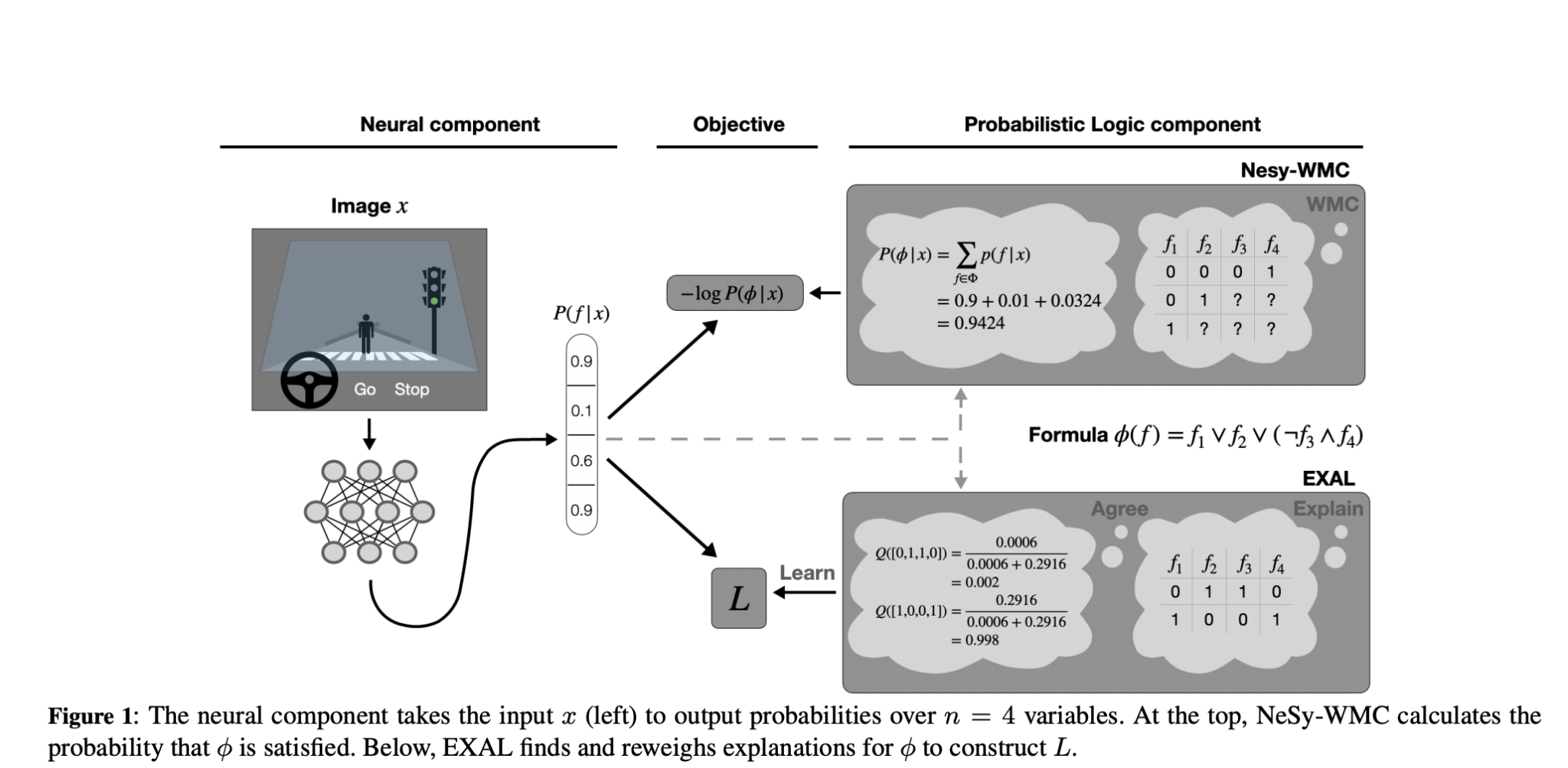

Researchers from KU Leuven have developed a novel method known as EXPLAIN, AGREE, LEARN (EXAL). This method is specifically designed to enhance the scalability and efficiency of learning in NeSy systems. The EXAL framework introduces a sampling-based objective that allows for more efficient learning while providing strong theoretical guarantees on the approximation error. These guarantees are crucial for ensuring that the system’s predictions remain reliable even as the complexity of the tasks increases. By optimizing a surrogate objective that approximates data likelihood, EXAL addresses the scalability issues that plague other methods.

The EXAL method involves three key steps:

- EXPLAIN

- AGREE

- LEARN

In the first step, the EXPLAIN algorithm generates samples of possible explanations for the observed data. These explanations represent different logical assignments that could satisfy the symbolic component’s requirements. For instance, in a self-driving car scenario, EXPLAIN might generate multiple explanations for why the car should brake, such as detecting a pedestrian or a red light. The second step, AGREE, involves reweighting these explanations based on their likelihood according to the neural network’s predictions. This step ensures that the most plausible explanations are given more importance, which enhances the learning process. Finally, in the LEARN step, these weighted explanations are used to update the neural network’s parameters through a traditional gradient descent approach. This process allows the network to learn more effectively from the data without needing exact probabilistic inference.

The performance of the EXAL method has been validated through extensive experiments on two prominent NeSy tasks:

- MNIST addition

- Warcraft pathfinding

In the MNIST addition task, which involves summing sequences of digits represented by images, EXAL achieved a test accuracy of 96.40% for sequences of two digits and 93.81% for sequences of four digits. Notably, EXAL outperformed the A-NeSI method, which achieved 95.96% accuracy for two digits and 91.65% for four digits. EXAL demonstrated superior scalability, maintaining a competitive accuracy of 92.56% for sequences of 15 digits, while A-NeSI struggled with a significantly lower accuracy of 73.27%. In the Warcraft pathfinding task, which requires finding the shortest path on a grid, EXAL achieved an impressive accuracy of 98.96% on a 12×12 grid and 80.85% on a 30×30 grid, significantly outperforming other NeSy methods in terms of both accuracy and learning time.

In conclusion, the EXAL method addresses the scalability and efficiency challenges that have limited the application of NeSy systems. By leveraging a sampling-based approach with strong theoretical guarantees, EXAL improves the accuracy and reliability of NeSy models and significantly reduces the time required for learning. EXAL is a promising solution for many complex AI tasks, particularly large-scale data and symbolic reasoning. The success of EXAL in tasks like MNIST addition and Warcraft pathfinding underscores its potential to become a standard approach in developing next-generation AI systems.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post EXPLAIN, AGREE, LEARN (EXAL) Method: A Transforming Approach to Scaling Learning in Neuro-Symbolic AI with Enhanced Accuracy and Efficiency for Complex Tasks appeared first on MarkTechPost.