Most advanced machine learning models, especially those achieving state-of-the-art results, require significant computational resources such as GPUs and TPUs. Deploying large models in resource-constrained environments like edge devices, mobile platforms, or other low-power hardware restricts the application of machine learning to cloud-based services or data centers, limiting real-time applications and increasing latency. Access to high-performance hardware is expensive, both in terms of acquisition and operation, which creates a barrier for smaller organizations and individuals who want to leverage machine learning.

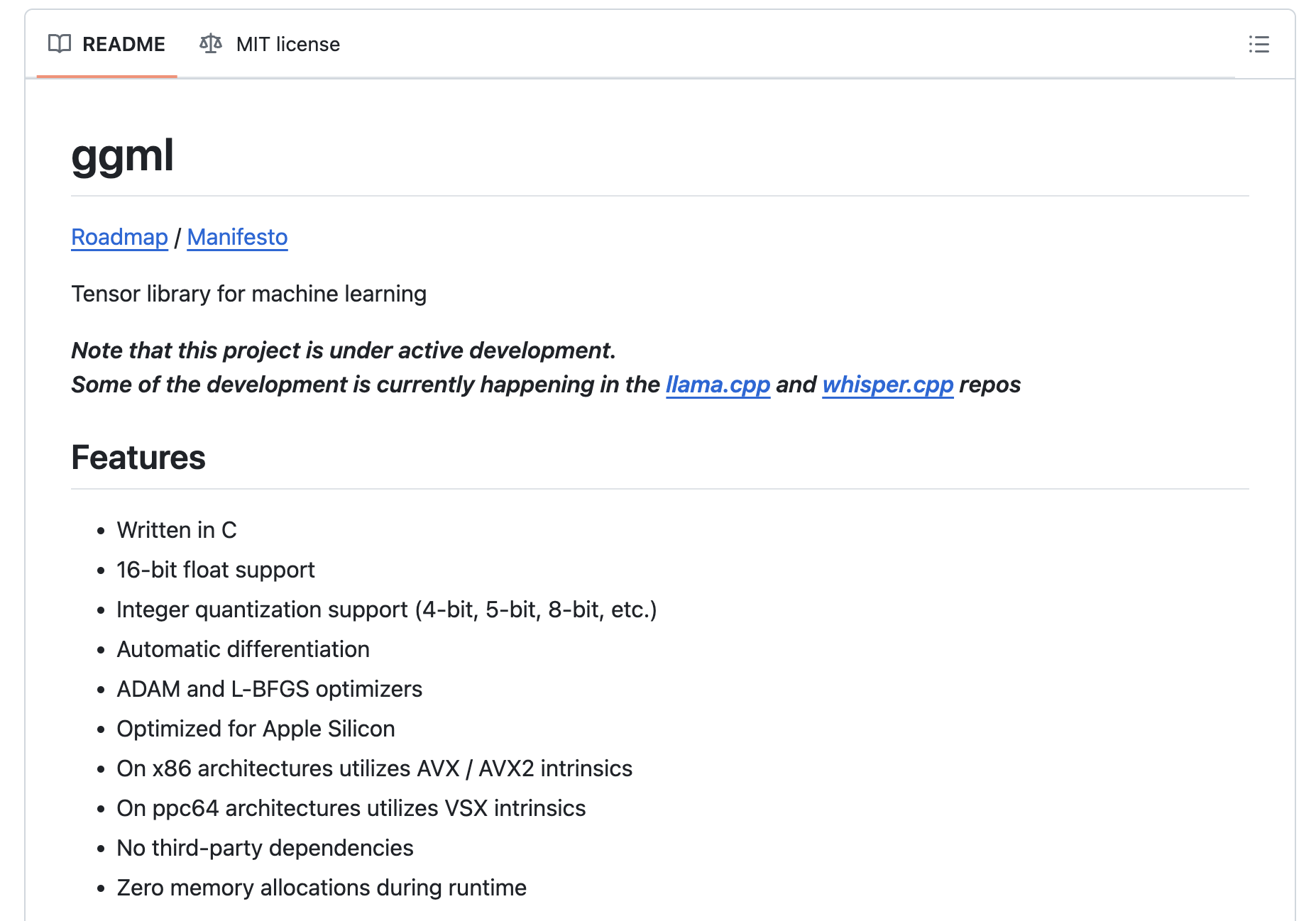

Researchers address the challenge of large models’ computational resource intensity. Current methods for running large language models typically rely on powerful hardware or cloud-based solutions, which can be costly and inaccessible for many applications. Existing solutions often struggle with optimizing performance on commodity hardware due to their heavy computational and memory demands. Researchers propose a lightweight and high-performance tensor library, ggml, designed to enable the efficient execution of large language models on commodity hardware. The ggml focuses on optimizing computations and memory usage to make these models more accessible across various platforms, including CPUs, GPUs, and WebAssembly. Additionally, ggml employs quantization techniques to reduce the size of models and improve inference times, all while maintaining accuracy.

The key innovation of ggml lies in its state-of-the-art data structures and computational optimizations. By utilizing optimized data structures, ggml minimizes memory access and computational overhead. The use of kernel fusion allows ggml to combine multiple operations into a single kernel, thereby reducing function call overhead and improving data locality. Moreover, ggml uses SIMD (Single Instruction, Multiple Data) instructions to fully utilize the parallel computation capabilities of contemporary processors. Another important aspect of ggml is its quantization technique, which reduces the precision of numerical representations in the model, resulting in a smaller memory footprint and faster computation times without sacrificing accuracy. These techniques collectively enable ggml to achieve low latency, high throughput, and low memory usage, making it possible to run large language models on devices like Raspberry Pi, smartphones, and laptops, which were previously considered unsuitable for such tasks.

In conclusion, ggml presents a significant advancement in the field of machine learning by overcoming the limitations associated with running large models on commodity hardware. The study effectively demonstrates how ggml’s innovative optimizations and quantization techniques enable the efficient deployment of powerful models on resource-constrained devices. By addressing the challenges of computational resource intensity, ggml paves the way for broader accessibility and deployment of advanced machine learning models across a wide range of environments.

Check out the GitHub and Details. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post ggml: A Machine learning (ML) Library Written in C and C++ with a Focus on Transformer Inference appeared first on MarkTechPost.