Llama-3-Nephilim-v3-8B and llama-3-Nephilim-v3-8B-GGUF are two innovative models released on Hugging Face. Although these models were never explicitly trained for roleplay, they exhibit remarkable capability in this domain, highlighting the potential of “found art” approaches in AI development.

The creation of these models involved merging several pre-trained language models using mergekit, a tool designed to combine the strengths of different models. The llama-3-Nephilim-v3-8B model, with 8.03 billion parameters and utilizing BF16 tensor types, was tested with a temperature setting of one and a minimum probability (minP) of 0.01. This configuration allowed the model to lean towards creative outputs, which can be adjusted as desired. Despite initial format consistency issues, the model’s performance can be enhanced through prompt steering and proper instruct prompts, ensuring more consistent and varied text generation outputs.

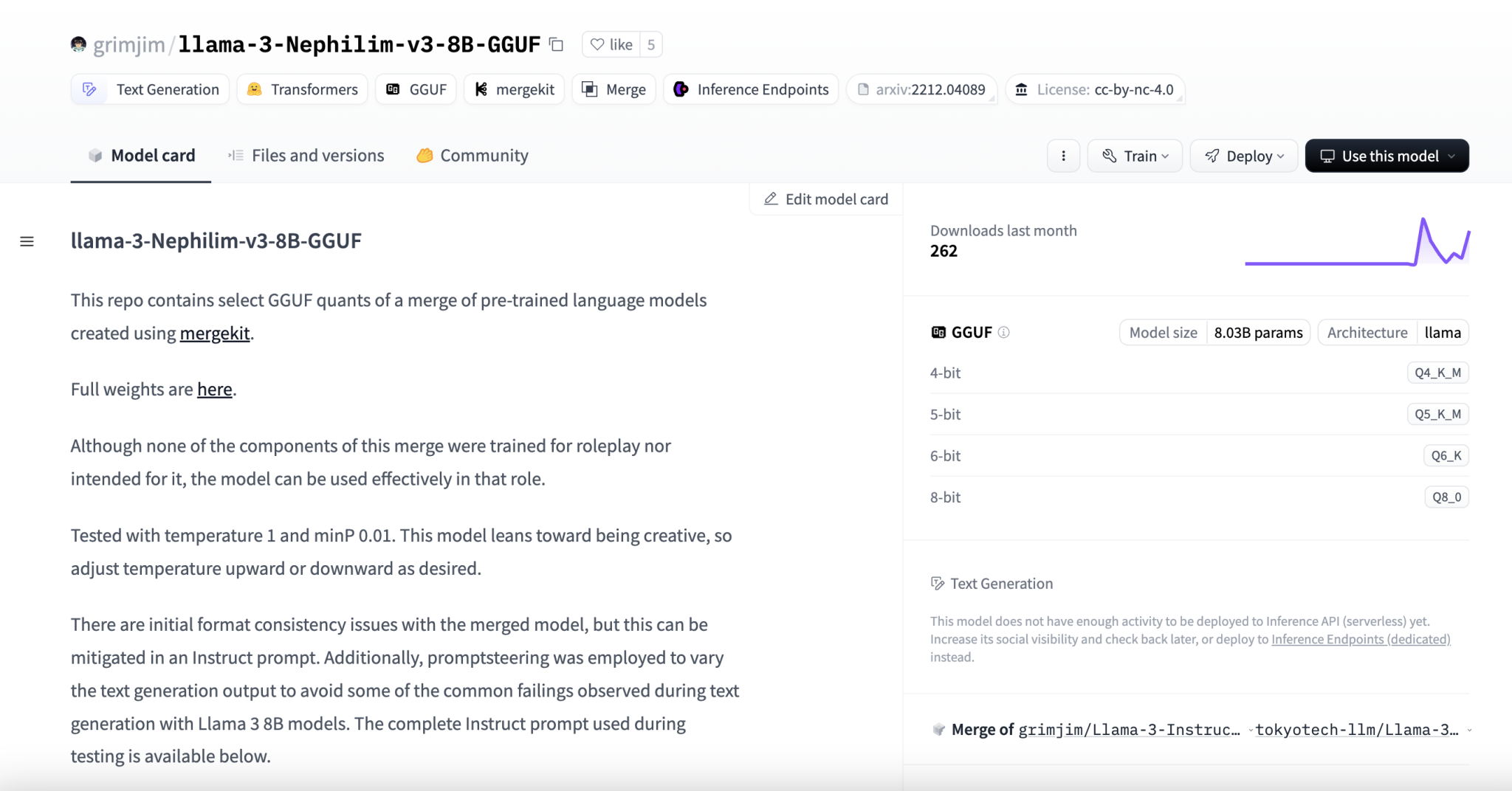

The llama-3-Nephilim-v3-8B-GGUF variant, also boasting 8.03 billion parameters, features multiple quantization options, including 4-bit, 5-bit, 6-bit, and 8-bit quantizations. This model was tested with its counterpart’s temperature and minP settings. Including GGUF quants in the merge was aimed at maintaining creativity while optimizing the model’s performance for roleplay scenarios.

The research utilized the task arithmetic merge method, which allowed for the combination of the strengths of several models. The base model for this merge was the grimjim/Llama-3-Instruct-8B-SPPO-Iter3-SimPO, complemented by the tokyotech-llm/Llama-3-Swallow-8B-Instruct-v0.1 model at a lower weight. This combination aimed to enhance chain-of-thought capabilities critical for roleplay and narrative consistency.

During testing, it was found that none of the merged models’ components were initially designed for roleplay. However, through rigorous testing, including RP interactions and ad hoc tests, the study identified three models that performed exceptionally well in roleplay scenarios. These included the models of SPPO (Self-Play Preference Optimization) and SimPO (Simple Preference Optimization with a Reference-Free Reward). Despite not being benchmarked on the Open LLM Leaderboard, these models demonstrated strong performance in maintaining narrative coherence and character consistency.

The methodology also highlighted the potential of prompt steering in the instruction system. This approach can improve text generation’s readability and stylistic appeal and bypass censorship limitations during roleplay. While some glitches, such as misattribution of utterances and spontaneous gender flips, were observed, the overall performance of the merged models was impressive.

In conclusion, the release of these models on Hugging Face marks a significant contribution by merging models not initially intended for roleplay. The research demonstrated that innovative approaches could yield highly effective results. The llama-3-Nephilim-v3-8B and llama-3-Nephilim-v3-8B-GGUF models stand as a testament to the potential of AI models to adapt and excel in unforeseen applications.

The post Nephilim v3 8B Released: An Innovative AI Approach to Merging Models for Enhanced Roleplay and Creativity appeared first on MarkTechPost.