Human reviewers or LLMs are often the only options for evaluating free-form material. However, their evaluation can be inaccurate and the process is time-consuming, costly, and arduous. The relief from this manual work comes with prompt engineering or the development of a unique optimization procedure, which is necessary for LLM evaluations to function as intended.

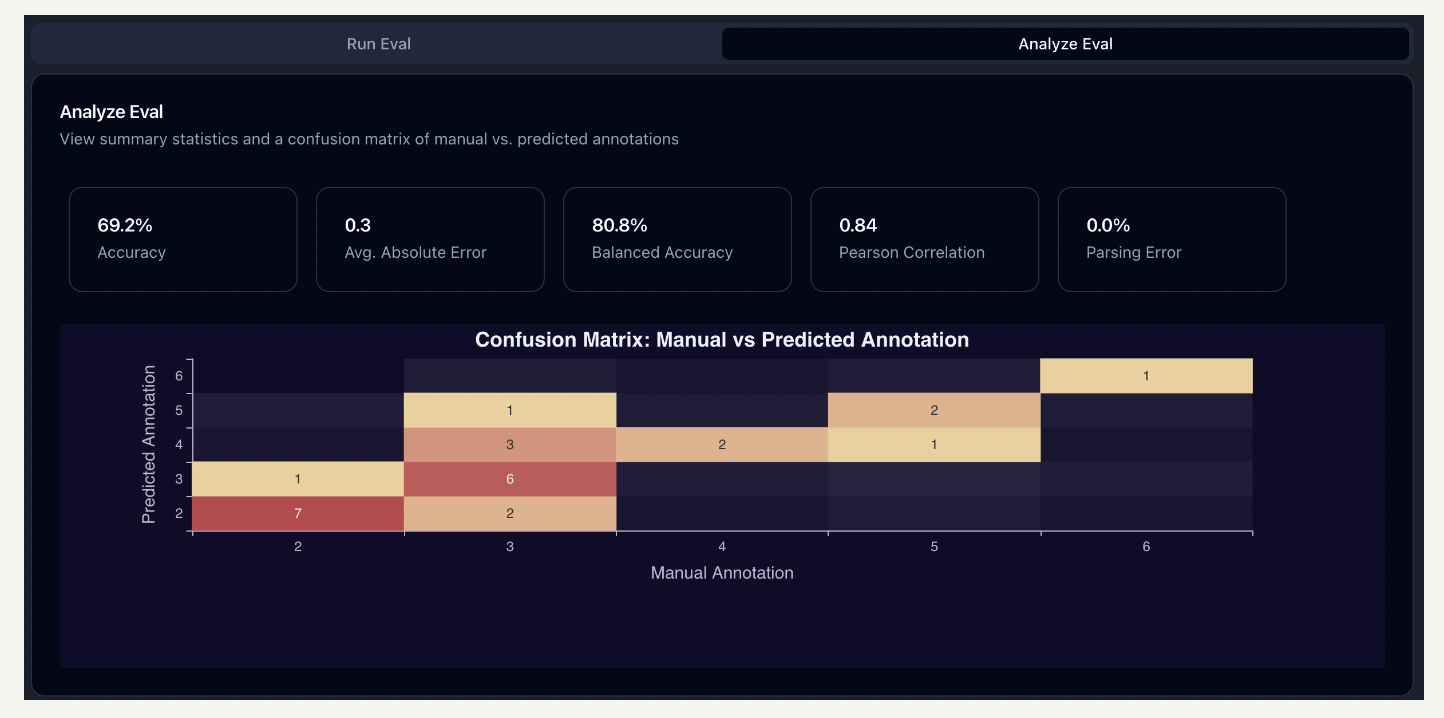

To get the most out of an LLM evaluation, tailor it to the company’s unique use case and facts. To empower users to automate the process of creating assessments for AI products, meet Parea AI. Parea AI achieves this by using human annotations to bootstrap an evaluation function and making it possible for you to transform ‘vibe checks’ into scalable, trustworthy assessments that are in line with human judgment completely automatically.

To help developers improve the performance of their LLM (Language Model) apps, Parea AI provides an advanced platform. To help developers make AI-powered products that wow consumers, the tool offers several essential capabilities that streamline the rapid engineering cycle. One of its primary features is Parea AI’s ability to try out several prompt versions and analyze their performance across various test situations.

Developers can then determine which prompts work best for their particular production use cases. Improving LLM results is a breeze with the platform’s quick optimization capabilities, which are accessible with a single click. The tool’s test hub provides an organized and thorough method for rapid comparison, allowing for the CSV input of test cases and the customization of assessment measures, making developers feel at ease.

Developers can access all prompts programmatically and gather analytics and observability data in this way. Developers can learn a lot about optimizing by measuring each alert’s latency, effectiveness, and cost. Parea AI provides specialized assistance and builds features according to user needs to ensure customers can get the most out of the product.

Parea AI is a great tool for developers who want to make their LLM apps faster because it immediately focuses on thorough testing, version control, and optimization. You can manage and build OpenAI functions in Parea AI’s studio and see all the prompt versions in one location. Access to APIs and data is another way Parea AI boosts efficiency.

In Conclusion

Parea AI is a platform that teams can use to monitor and assess LLMs. To confidently assist teams in deploying LLMs to production, it provides capabilities such as experiment tracking, human annotation, and observability. Parea AI is compatible with most LLM platforms and providers.

The post Meet Parea AI: An AI Startup that Automatically Creates LLM-based Evals Aligned with Human Judgement appeared first on MarkTechPost.