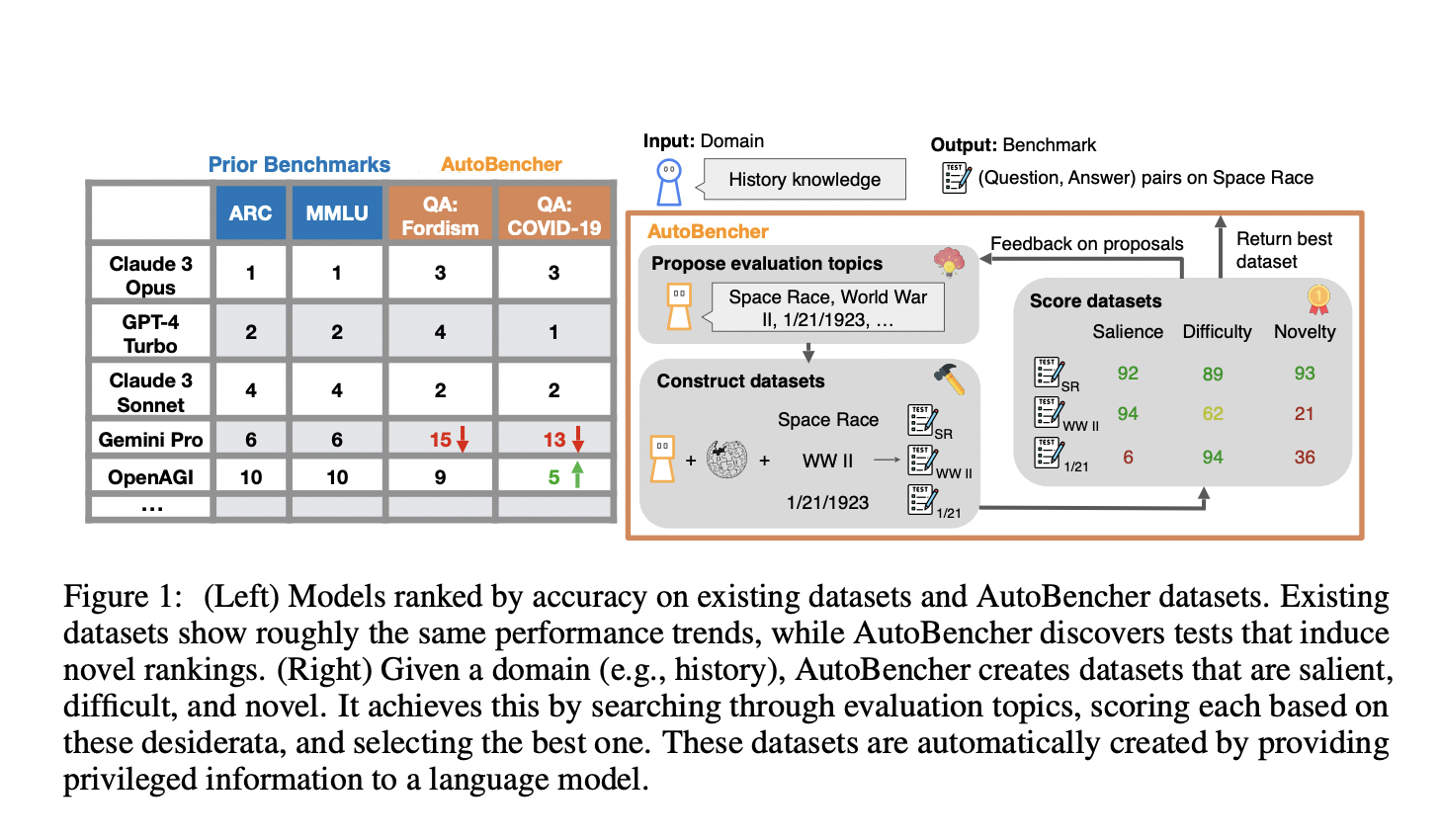

This paper addresses the challenge of effectively evaluating language models (LMs). Evaluation is crucial for assessing model capabilities, tracking scientific progress, and informing model selection. Traditional benchmarks often fail to highlight novel performance trends and are sometimes too easy for advanced models, providing little room for growth. The research identifies three key desiderata that existing benchmarks often lack: salience (testing practically important capabilities), novelty (revealing previously unknown performance trends), and difficulty (posing challenges for existing models).

Current methods for evaluating language models involve constructing benchmarks that test specific capabilities, such as mathematical reasoning or understanding academic subjects. Prior works have constructed high-quality benchmarks guided by salience and difficulty. While these benchmarks are valuable, they often yield similar performance trends across different models, limiting their ability to highlight unique strengths and weaknesses.

The researchers of this paper propose a new tool, AutoBencher, which automatically generates datasets that fulfill the three desiderata: salience, novelty, and difficulty. AutoBencher uses a language model to search for and construct datasets from privileged information sources. This approach allows creation of more challenging and insightful benchmarks compared to existing ones. For instance, AutoBencher can identify gaps in LM knowledge that are not captured by current benchmarks, such as performance discrepancies on less common topics like the Permian Extinction or Fordism.

AutoBencher operates by leveraging a language model to propose evaluation topics within a broad domain (e.g., history) and constructing small datasets for each topic using reliable sources like Wikipedia. The tool evaluates each dataset based on its salience, novelty, and difficulty, selecting the best ones for inclusion in the benchmark. This iterative and adaptive process allows the tool to refine its dataset generation to maximize the desired properties continuously.

Additionally, AutoBencher employs an adaptive search process, where the trajectory of past generated benchmarks is used to improve the difficulty of proposed topics. This allows AutoBencher to identify and select topics that jointly maximize novelty and difficulty, subject to a salience constraint specified by the user.

To ensure high-quality datasets, AutoBencher incorporates privileged information that the evaluated LMs cannot access, such as detailed documents or specific data relevant to the topic. This privileged information helps generate accurate and challenging questions. The results show that AutoBencher-created benchmarks are, on average, 27% more novel and 22% more difficult than existing human-constructed benchmarks. The tool has been used to create datasets across various domains, including math, history, science, economics, and multilingualism, revealing new trends and gaps in model performance.

The problem of effectively evaluating language models is critical for guiding their development and assessing their capabilities. AutoBencher offers a promising solution by automating the creation of salient, novel, and difficult benchmarks, thereby providing a more comprehensive and challenging evaluation framework for language models. The authors demonstrate the effectiveness of their approach by generating diverse benchmarks that uncover previously unknown performance trends across a range of language models, providing valuable insights to guide future model development and selection. This approach highlights existing gaps in model knowledge and paves the way for future improvements.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

The post AutoBencher: A Metrics-Driven AI Approach Towards Constructing New Datasets for Language Models appeared first on MarkTechPost.