Machine learning, particularly in training large language models (LLMs), has revolutionized numerous applications. These models necessitate substantial computational resources, typically concentrated within well-connected clusters, to parallelize workloads for distributed training efficiently. However, reducing communication overhead and enhancing scalability across multiple devices remains a significant challenge in the field.

Training large language models is inherently resource-intensive, requiring significant computational power and efficient communication between devices. Traditional methods need help with the frequent need for data exchange, which hampers the ability to train models effectively across devices with poor connectivity. This problem poses a substantial obstacle to utilizing global computational resources for scalable model training.

Existing methods like Distributed Data-Parallel (DDP) training depend heavily on well-connected clusters to minimize communication delays. These approaches often involve extensive bandwidth usage, making it challenging to scale training operations over a dispersed network of devices. Frameworks such as PyTorch and Hugging Face facilitate distributed training but are not optimized for scenarios with low communication needs.

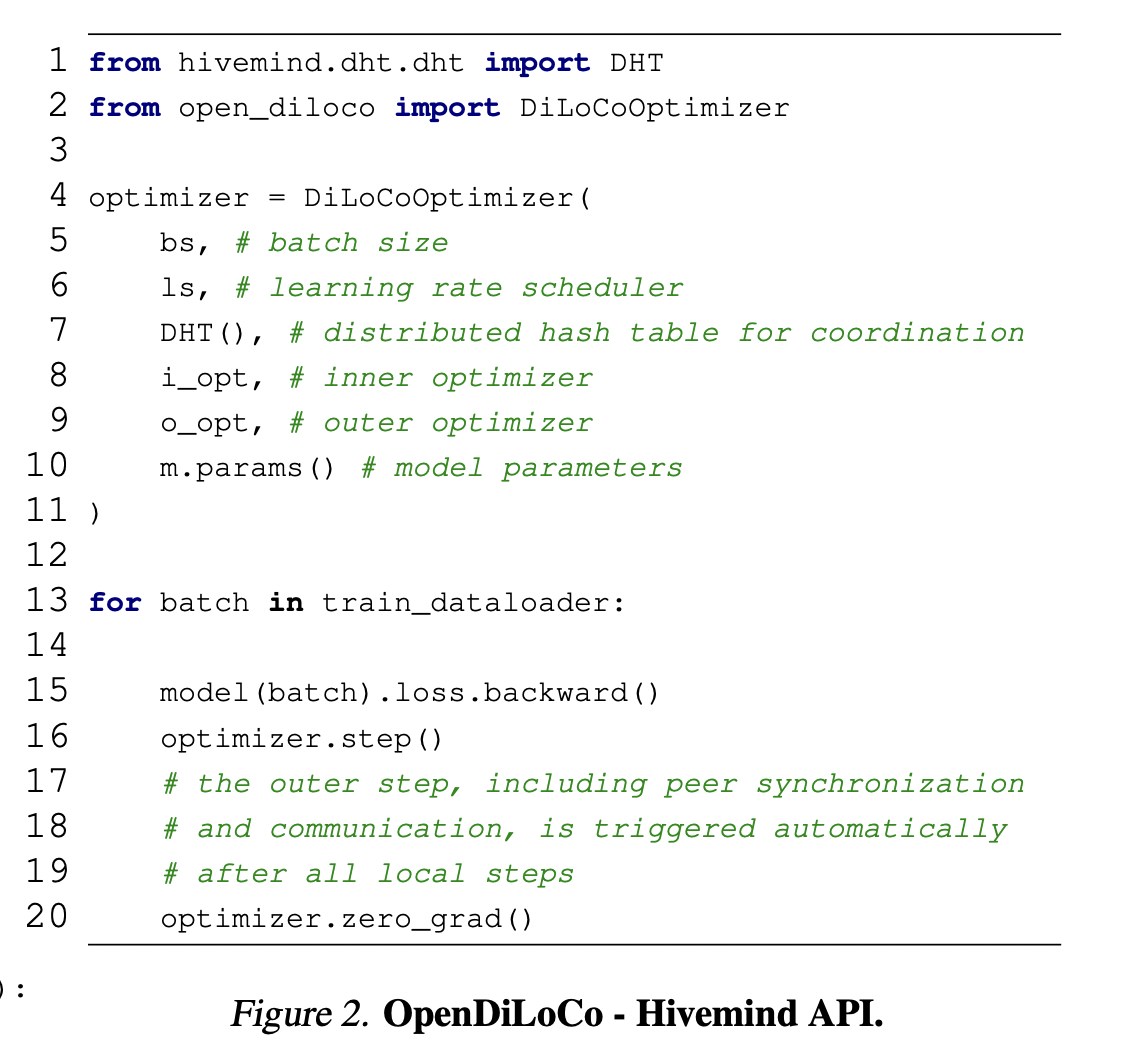

Prime Intellect, Inc. researchers introduced OpenDiLoCo, an open-source framework designed to enable distributed low-communication training of large language models. This method builds on the Hivemind library, allowing efficient model training across geographically dispersed devices. OpenDiLoCo offers a scalable solution by leveraging local SGD optimization to significantly reduce communication frequency, enhancing the feasibility of training models on a global scale.

The OpenDiLoCo framework utilizes a dual-optimizer approach, combining an inner optimizer (AdamW) for local updates with an outer optimizer (SGD with Nesterov momentum) to synchronize device weights. By computing pseudo-gradients and reducing communication steps by up to 500 times, OpenDiLoCo effectively manages bandwidth usage while maintaining high compute utilization. This approach involves creating two copies of the model: one for local updates and another for computing pseudo-gradients, which are all-reduced using FP16 without noticeable performance degradation.

In performance evaluations, OpenDiLoCo demonstrated impressive scalability, achieving 90-95% compute utilization while training models across two continents and three countries. Researchers conducted experiments using models up to 1.1 billion parameters, significantly expanding on the original 400 million parameters. The framework was tested with eight DiLoCo workers, achieving a final perplexity of 10.76 for a 1.1 billion parameter model, compared to 11.85 for the baseline without replicas—the use of FP16 for pseudo-gradient reduction further enhanced performance, cutting communication time in half. Furthermore, OpenDiLoCo maintained comparable performance with traditional methods while communicating 500 times less, highlighting its efficiency and scalability for large-scale model training.

The research underscores a promising solution to the challenges of distributed model training. OpenDiLoCo offers an efficient, scalable framework that reduces communication overhead and leverages global computational resources. This approach enables practical and extensive applications of large language models in real-world scenarios. Prime Intellect, Inc. researchers have successfully demonstrated the framework’s potential, paving the way for more widespread use of large language models across diverse geographical locations. Further developments in compute-efficient methods and sophisticated model merging techniques could enhance the stability and convergence speed, making decentralized training even more effective.

In conclusion, OpenDiLoCo presents a robust framework for distributed low-communication training of large language models, addressing the critical challenges of resource-intensive training and frequent data exchange. The research from Prime Intellect, Inc. offers an efficient, scalable solution that leverages global computational resources, enabling more practical and extensive applications of large language models. This approach represents a significant advancement in the field, providing a foundation for future developments in decentralized training methodologies.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

The post This AI Paper by Prime Intellect Introduces OpenDiLoCo: An Open-Source Framework for Globally Distributed Low-Communication Training appeared first on MarkTechPost.