Bilevel optimization (BO) is a growing field of research, gaining attention for its success in various machine learning tasks like hyperparameter optimization, meta-learning, and reinforcement learning. BO involves a two-level structure where the solution to the outer problem depends on the solution to the inner problem. However, BO is not widely used for large-scale problems, despite being flexible and applicable to many problems. The main challenge is the interdependence between the upper and lower levels of problems that hinder the scalability of BO. This mutual dependency introduces significant computational challenges, especially when handling large-scale problems.

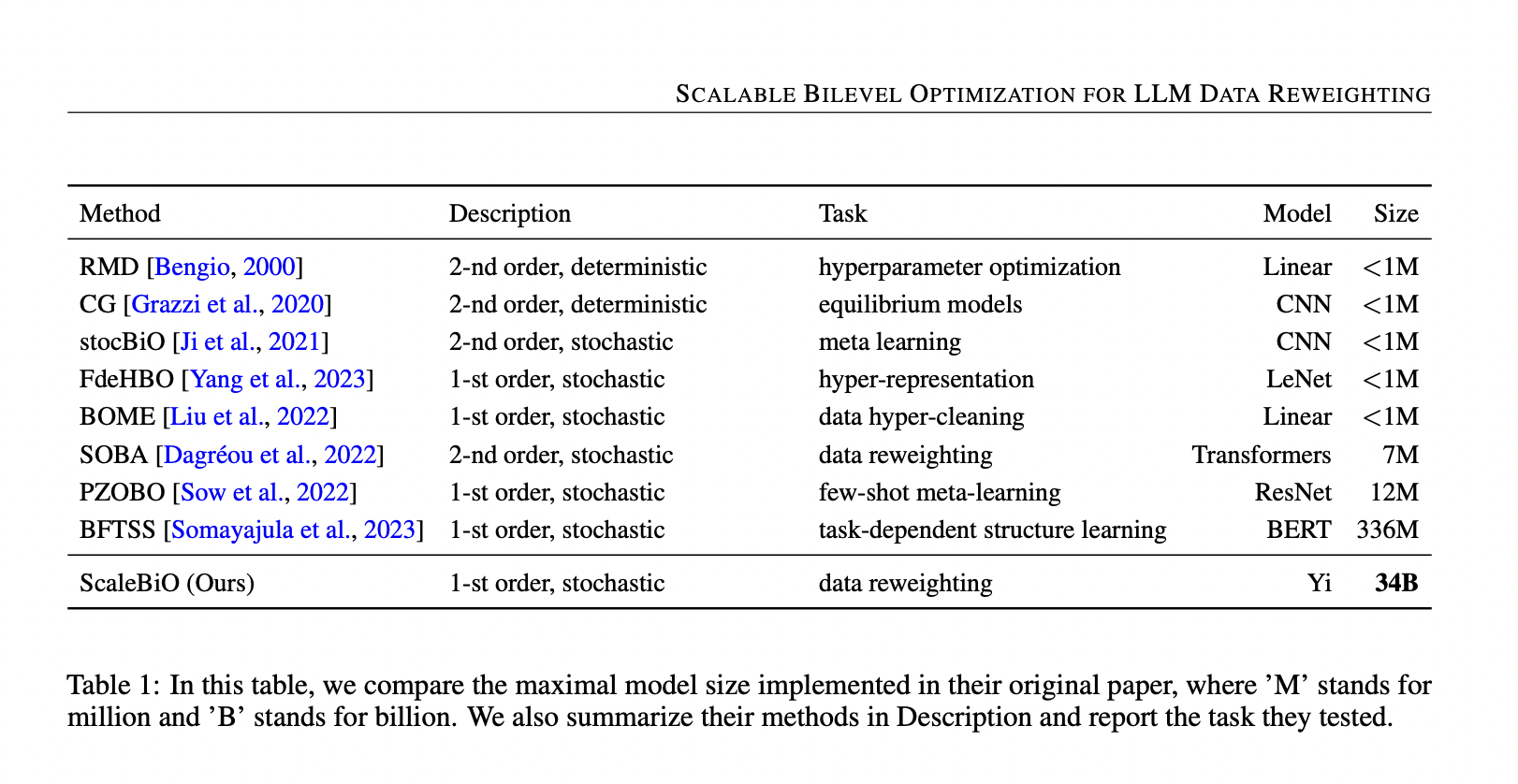

There are two main areas of related work discussed in this paper. The first is Bilevel Optimization, which can be divided into two types: (a) approximate implicit differentiation (AID) methods, and (b) iterative differentiation (ITD) methods. Both approaches follow a two-loop manner and need a lot of computational costs for large-scale problems. The second area is Data Reweighting, where the proportion of training data sources greatly impacts the performance of large language models (LLMs). Various methods are discussed in this paper to reweight data sources for optimal training data mixture. However, none of these methods guarantee optimal data weights, and there have been no scalable experiments on models larger than 30 billion parameters.

Researchers from The Hong Kong University of Science and Technology, and the University of Illinois Urbana-Champaign have introduced ScaleBiO, a new bilevel optimization method capable of scaling to 34B LLMs on data reweighting tasks. The ScaleBiO can run these large models on eight A40 GPUs by incorporating a memory-efficient training technique called LISA. This is the first time BO has been successfully applied to such large LLMs, showing its potential in real-world applications. ScaleBiO optimizes learned data weights effectively and provides a convergence guarantee similar to traditional first-order BO methods for smooth and strongly convex objectives.

Experiments on data reweighting show that ScaleBiO works well for different-sized models, such as GPT-2, LLaMA-3-8B, GPT-NeoX-20B, and Yi-34B, where BO effectively filters out irrelevant data and selects only the informative samples. The two experiments conducted are (a) Small Scale Experiments to understand ScaleBiO better and (b) Real-World Application Experiments to validate its effectiveness and scalability. To test ScaleBiO’s effectiveness on small-scale language models, experiments were carried out with GPT-2 (124M) on three synthetic data tasks: data denoising, multilingual training, and instruction-following fine-tuning.

To evaluate ScaleBiO, 3,000 data are sampled from each source for reweighting, and then 10,000 data are sampled based on the final weights from BO to train the model. To show the effectiveness of ScaleBiO, the learned sampling weights are applied to fine-tune the LLaMA-3-8B and LLaMA-3-70B models. The LLMs’ instruction-following abilities are evaluated using MT-Bench with single-answer grading, challenges chat assistants with complex, multi-turn, open-ended questions, and uses “LLM-as-a-judge” for evaluation. This benchmark is notable for its alignment with human preferences, containing 80 questions spread across 8 categories uniformly: Writing, Roleplay, Extraction, Reasoning, Math, Coding, Knowledge I (STEM), and Knowledge II (humanities/social science).

In summary, researchers have proposed ScaleBiO, a bilevel optimization instantiation capable of scaling to 34B LLMs on data reweighting tasks. ScaleBiO allows data reweighting on models with at least 7 billion parameters, creating an efficient way to filter and select pipelines to boost model performance on various tasks. Moreover, the sampling weights learned on LLaMA-3-8B can be applied to larger models like LLaMA-3-70B, resulting in significant performance improvements. However, ScaleBiO’s effectiveness in large-scale pre-training still needs to be tested, which requires extensive computational resources. Therefore, demonstrating its success in large-scale fine-tuning settings could be an important first step.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post ScaleBiO: A Novel Machine Learning Based Bilevel Optimization Method Capable of Scaling to 34B LLMs on Data Reweighting Tasks appeared first on MarkTechPost.