The crucial challenge of enhancing logical reasoning capabilities in Large Language Models (LLMs) is pivotal for achieving human-like reasoning, a fundamental step towards realizing Artificial General Intelligence (AGI). Current LLMs exhibit impressive performance in various natural language tasks but often need more logical reasoning, limiting their applicability in scenarios requiring deep understanding and structured problem-solving. Overcoming this challenge is essential for advancing AI research, as it would enable intelligent systems to tackle complex problem-solving, decision-making, and critical-thinking tasks with greater accuracy and reliability. The urgency of this challenge is underscored by the increasing demand for AI systems that can manage intricate reasoning tasks across diverse fields, including natural language processing, automated reasoning, robotics, and scientific research.

Current methods like Logic-LM and CoT have shown limitations in efficiently handling complex reasoning tasks. Logic-LM relies on external solvers for translation, potentially leading to information loss, while CoT struggles with balancing precision and recall, impacting its overall performance in logical reasoning tasks. Despite recent advancements, these methods still fail to achieve optimal reasoning capabilities due to inherent design limitations.

Researchers from the National University of Singapore, the University of California, and the University of Auckland introduce the Symbolic Chain-of-Thought (SymbCoT) framework, which combines symbolic expressions with CoT prompting to enhance logical reasoning in LLMs. SymbCoT overcomes the challenges of existing methods by incorporating symbolic representation and rules, leading to significant reasoning enhancement. The innovative design of SymbCoT offers a more versatile and efficient solution for complex reasoning tasks, surpassing existing baselines like CoT and Logic-LM in performance metrics.

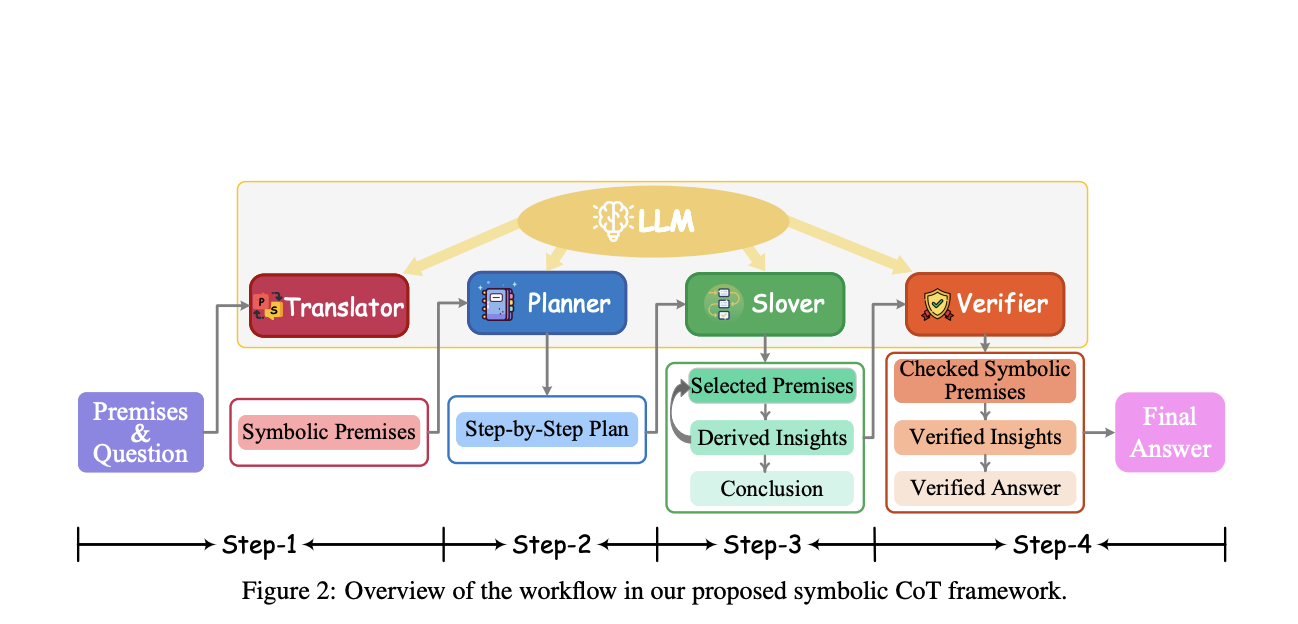

SymbCoT uses symbolic structures and rules to guide reasoning processes, enhancing the model’s ability to tackle complex logical tasks. The framework employs a plan-then-solve approach, dividing questions into smaller components for efficient reasoning. It details the computational resources required for implementation, showcasing the scalability and practicality of the proposed method.

SymbolCoT demonstrates significant improvements over Naive, CoT, and Logic-LM baselines, achieving gains of 21.56%, 6.11%, and 3.53% on GPT-3.5, and 22.08%, 9.31%, and 7.88% on GPT-4, respectively. The only exception occurs with the FOLIO dataset on GPT-3.5, where it does not surpass Logic-LM, indicating challenges in non-linear reasoning for LLMs. Despite this, the method consistently outperforms all baselines across both datasets with GPT-4, notably exceeding Logic-LM by an average of 7.88%, highlighting substantial improvements in complex reasoning tasks. Also, in CO symbolic expression tasks on two datasets, the method surpasses CoT and Logic-LM by 13.32% and 3.12%, respectively, underscoring its versatility in symbolic reasoning.

In conclusion, the SymbCoT framework represents a significant advancement in AI research by enhancing logical reasoning capabilities in LLMs. The paper’s findings have broad implications for AI applications, with potential future research directions focusing on exploring additional symbolic languages and optimizing the framework for wider adoption in AI systems. The research contributes to the field by overcoming a critical challenge in logical reasoning, paving the way for more advanced AI systems with improved reasoning capabilities.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 43k+ ML SubReddit | Also, check out our AI Events Platform

The post Symbolic Chain-of-Thought ‘SymbCoT’: A Fully LLM-based Framework that Integrates Symbolic Expressions and Logic Rules with CoT Prompting appeared first on MarkTechPost.