Salesforce AI Research has unveiled a groundbreaking development – the XGen-MM series. Building upon the success of its predecessor, the BLIP series, XGen-MM represents a leap forward in LLMs. This article delves into the intricacies of XGen-MM, exploring its architecture, capabilities, and implications for the future of AI.

The Genesis of XGen-MM:

XGen-MM emerges from Salesforce’s unified XGen initiative, reflecting a concerted effort to pioneer large foundation models. This development represents a major achievement in the pursuit of advanced multimodal technologies. With a focus on robustness and superiority, XGen-MM integrates fundamental enhancements to redefine the benchmarks of LLMs.

Key Features:

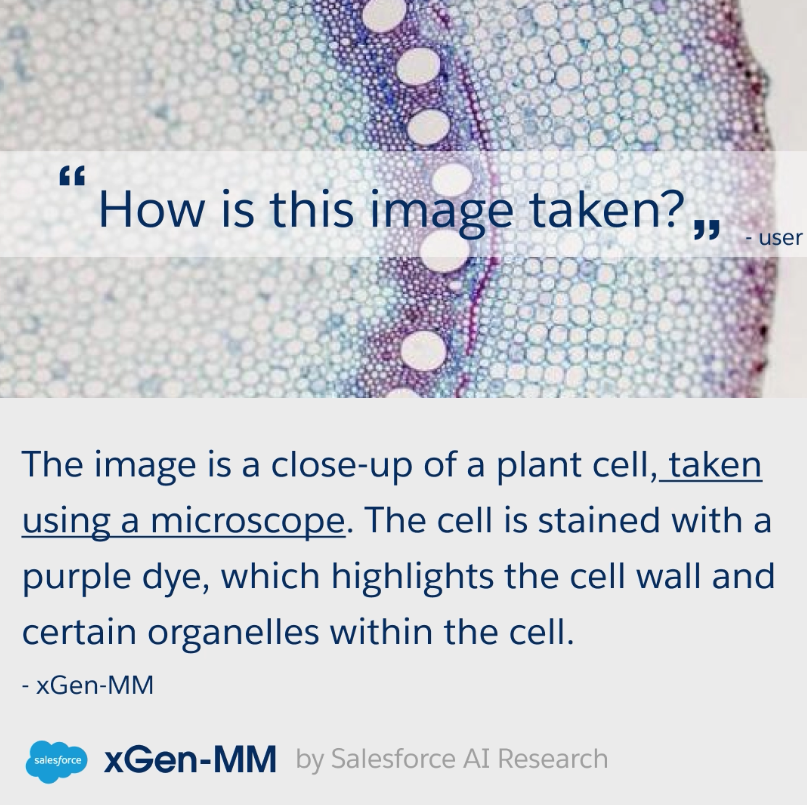

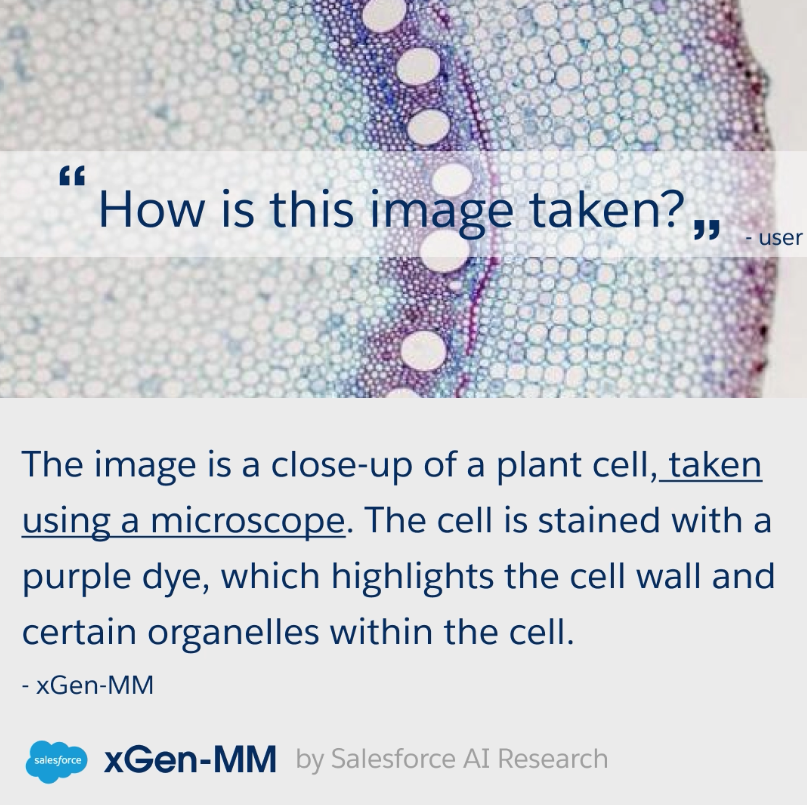

At the heart of XGen-MM lies its prowess in multimodal comprehension. Trained at scale on high-quality image caption datasets and interleaved image-text data, XGen-MM boasts several notable features:

- State-of-the-Art Performance: The pretrained foundation model, xgen-mm-phi3-mini-base-r-v1, achieves remarkable performance under 5 billion parameters, demonstrating strong in-context learning capabilities.

- Instruct Fine-Tuning: The xgen-mm-phi3-mini-instruct-r-v1 model stands out with its state-of-the-art performance among open-source and closed-source Visual Language Models (VLMs) under 5 billion parameters. Notably, it supports flexible high-resolution image encoding with efficient visual token sampling.

Technical Insights:

While detailed technical specifications will be unveiled in an upcoming technical report, preliminary results showcase XGen-MM’s prowess across various benchmarks. From COCO to TextVQA, XGen-MM consistently pushes the boundaries of performance, setting new standards in multimodal understanding.

Utilization and Integration:

The implementation of XGen-MM is facilitated through the transformers library. Developers can seamlessly integrate XGen-MM into their projects, leveraging its capabilities to enhance multimodal applications. With comprehensive examples provided, the deployment of XGen-MM is made accessible to the broader AI community.

Ethical Considerations:

Despite its remarkable capabilities, XGen-MM is not immune to ethical considerations. Drawing data from diverse internet sources, including webpages and curated datasets, the model may inherit biases inherent in the original data. Salesforce AI Research emphasizes the importance of assessing safety and fairness before deploying XGen-MM in downstream applications.

Conclusion:

In multimodal language models, XGen-MM emerges as a beacon of innovation. With its superior performance, robust architecture, and ethical considerations, XGen-MM paves the way for transformative advancements in AI applications. As researchers continue to explore its potential, XGen-MM stands poised to shape the future of AI-driven interactions and understanding.

The post XGen-MM: A Series of Large Multimodal Models (LMMS) Developed by Salesforce Al Research appeared first on MarkTechPost.