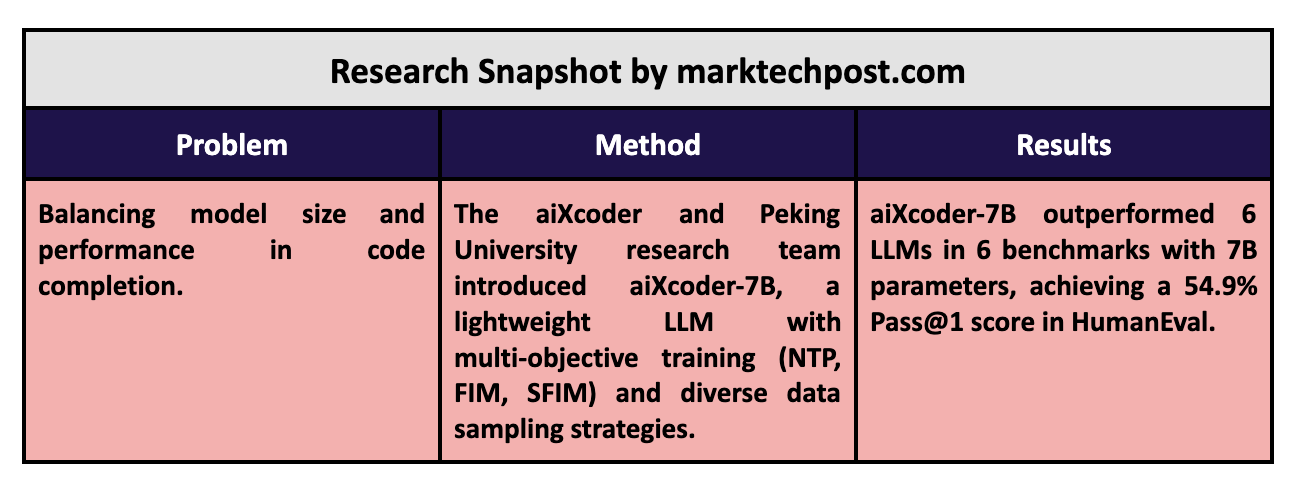

Large language models (LLMs) have revolutionized various domains, including code completion, where artificial intelligence predicts and suggests code based on a developer’s previous inputs. This technology significantly enhances productivity, enabling developers to write code faster and with fewer errors. Despite the promise of LLMs, many models struggle with balancing speed and accuracy. Larger models often have higher accuracy but introduce delays that hinder real-time coding tasks, leading to inefficiency. This challenge has spurred efforts to create smaller, more efficient models that retain high performance in code completion.

The primary problem in the field of LLMs for code completion is the trade-off between model size and performance. Larger models, while powerful, require more computational resources and time, leading to slower response times for developers. This diminishes their usability, particularly in real-time applications where quick feedback is essential. The need for faster, lightweight models that still offer high accuracy in code predictions has become a crucial research focus in recent years.

Traditional methods for code completion typically involve scaling up LLMs to increase prediction accuracy. These methods, such as those used in CodeLlama-34B and StarCoder2-15B, rely on enormous datasets and billions of parameters, significantly increasing their size and complexity. While this approach improves the models’ ability to generate precise code, it comes at the cost of higher response times and greater hardware requirements. Developers often find that these models’ size and computational demands hinder their workflow.

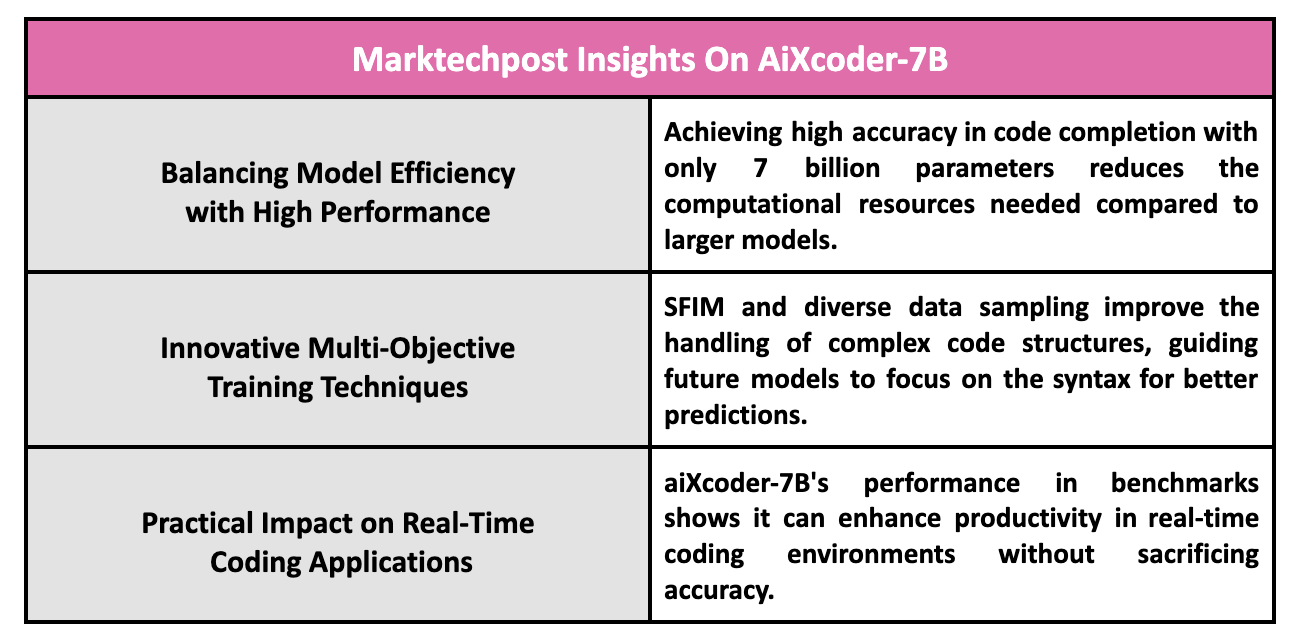

The research team from aiXcoder and Peking University introduced aiXcoder-7B, designed to be lightweight and highly effective in code completion tasks. With only 7 billion parameters, it achieves remarkable accuracy compared to larger models, making it an ideal solution for real-time coding environments. aiXcoder-7B focuses on balancing size and performance, ensuring that it can be deployed in academia and industry without the typical computational burdens of larger LLMs. The model’s efficiency makes it a standout in a field dominated by much larger alternatives.

The research team employed multi-objective training, which includes methods like Next-Token Prediction (NTP), Fill-In-the-Middle (FIM), and the advanced Structured Fill-In-the-Middle (SFIM). SFIM, in particular, allows the model to consider the syntax and structure of code more deeply, enabling it to predict more accurately across a wide range of coding scenarios. This contrasts with other models that often only consider code plain text without understanding its structural nuances. aiXcoder-7B’s ability to predict missing code segments within a function or across files gives it a unique advantage in real-world programming tasks.

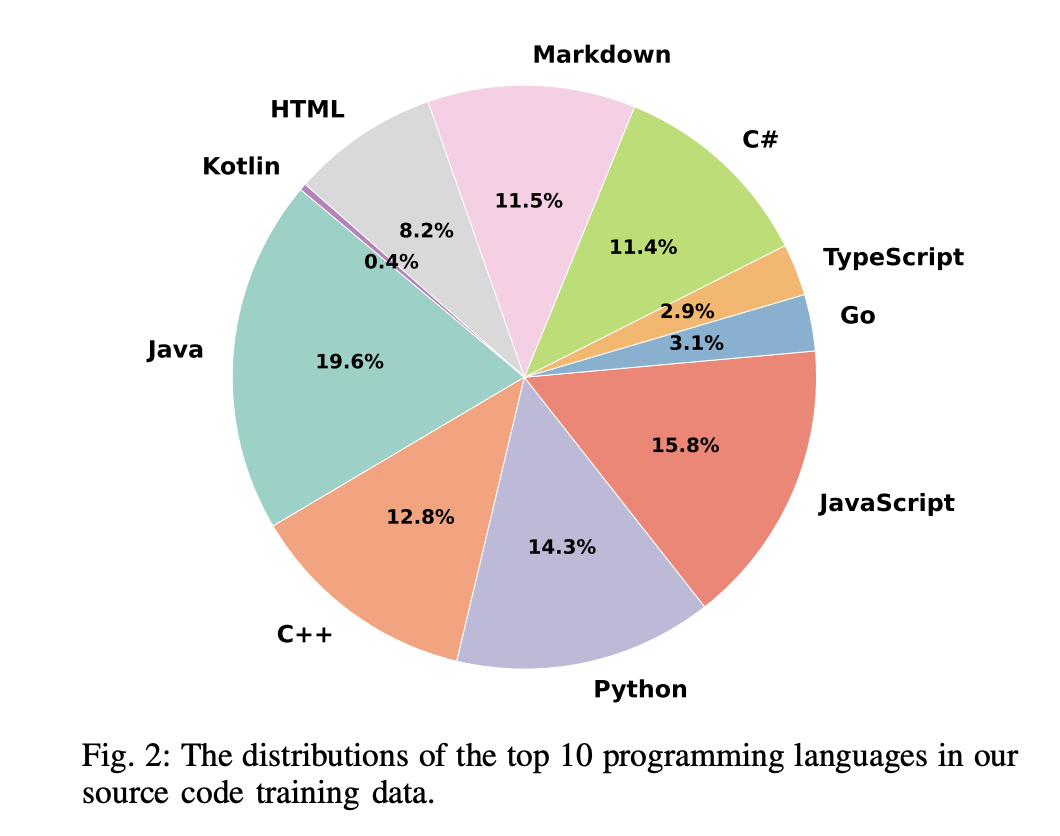

The training process for aiXcoder-7B involved using an extensive dataset of 1.2 trillion unique tokens. The model was trained using a rigorous data collection pipeline that involved data crawling, cleaning, deduplication, and quality checks. The dataset included 3.5TB of source code from various programming languages, ensuring the model could handle multiple languages, including Python, Java, C++, and JavaScript. To further enhance its performance, aiXcoder-7B utilized diverse data sampling strategies, such as sampling based on file content similarity, inter-file dependencies, and file path similarities. These strategies helped the model understand cross-file contexts, which is crucial for tasks where code completion depends on references spread across multiple files.

aiXcoder-7B outperformed six LLMs of similar size in six different benchmarks. Notably, the HumanEval benchmark achieved a Pass@1 score of 54.9%, outperforming even larger models like CodeLlama-34B (48.2%) and StarCoder2-15B (46.3%). In another benchmark, FIM-Eval, aiXcoder-7B demonstrated strong generalization abilities across different types of code, achieving superior performance in languages like Java and Python. Its ability to generate code that closely matches human-written code, both in style and length, further distinguishes it from competitors. For instance, in Java, aiXcoder-7B produced only 0.97 times the size of human-written code compared to other models that generated much longer code.

The aiXcoder-7B showcases the potential for creating smaller, faster, and more efficient LLMs without sacrificing accuracy. Its performance across multiple benchmarks and programming languages positions it as a great tool for developers who need reliable, real-time code completion. The combination of multi-objective training, a vast dataset, and innovative sampling techniques has allowed aiXcoder-7B to set a new standard for lightweight LLMs in this domain.

In conclusion, aiXcoder-7B addresses a critical gap in the field of LLMs for code completion by offering a highly efficient and accurate model. The research behind the model highlights several key takeaways that can guide future development in this area:

- Seven billion parameters ensure efficiency without sacrificing accuracy.

- Utilizes multi-objective training, including SFIM, to improve prediction capabilities.

- Trained on 1.2 trillion tokens with a comprehensive data collection process.

- Outperforms larger models in benchmarks, achieving 54.9% Pass@1 in HumanEval.

- Capable of generating code that closely mirrors human-written code in both style and length.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post aiXcoder-7B: A Lightweight and Efficient Large Language Model Offering High Accuracy in Code Completion Across Multiple Languages and Benchmarks appeared first on MarkTechPost.