Generative AI and Large Language Models (LLMs) have burst onto the scene, introducing us to “copilots,” “chatbots,” and the increasingly pivotal “AI agents.” These advancements unfold at breakneck speed, making it challenging to keep up.

We’ve been at the forefront of this revolution, witnessing how AI agents—or “agentic workflows,” as Andrew Ng refers to them—are becoming essential components of LLM-based applications. These agents are not just theoretical concepts; they’re practical tools enabling everything from natural language interactions with databases to generating entire software projects from simple requirements.

In this article, we’ll dive into AI agents’ fundamentals and explore their vast possibilities. Whether you’re a business leader aiming to stay ahead of the curve or a technical professional seeking deeper insights, we’ll provide a concrete and engaging look into how AI agents can be leveraged effectively.

TL;DR – What You Should Remember from This Article

We believe everyone will feel more confident about AI agents after reading this blogpost, regardless of the level of expertise and more business or technical roles. Still, if you consider yourself an AI Agents Jedi, at least check our main insights gathered while implementing Agentic systems in both internal R&D and commercial projects, it might be interesting for you.

- We are already seeing many use cases where AI agents surpass pure LLM applications. One such use case is interacting with databases using natural language.

- AI agents have unlocked new, previously impossible applications, such as performing a broad spectrum of tasks in the browser and generating entire software projects from requirements.

- Artificial General Intelligence (AGI) is not there yet and very general, completely AI autonomous systems don’t perform reliably enough. Nevertheless, a well designed product with a certain level of autonomy (when it’s reliable) and human supervision proves to be very successful.

- Anticipate breakthroughs in AI agents: their rapid improvement suggests a trajectory of increasing capability. Design your products with this potential in mind to stay ahead.

- As we also stated in our previous blog post: Specialization beats Generalization. A group of specialized agents performs much better than one general agent. More specific agents require less powerful LLMs, leading to cost savings.

- While some Agentic system design patterns are no-brainer, others present trade-offs between performance gains and costs in complexity, time, or resources. Add them to your system one by one after assessing improved performance and accepting the costs and complexity.

- Without a proper evaluation pipeline and clearly defined goals, creating AI Agents is like alchemy, you never know if what you did improved the performance.

What Is an AI Agent

As more companies integrate LLMs into their workflows, they encounter various limitations such as low reliability, unpredictability, and high costs. Fortunately, the community has introduced numerous remedies to address these challenges. Over the two years since ChatGPT’s introduction, a plethora of LLM design patterns have emerged, with one of the most recent and hot topics being AI Agents.

When we refer to an “AI agent,” we’re describing a system that integrates multiple components to achieve superior results. The main element is the LLM Controller, which acts as the “brain” of the agents, making decisions and interacting with other components, such as:

- Planner: Creates step-by-step plans by decomposing complex tasks into smaller ones. Many planning techniques exist offering trade offs between flexibility and reliability. For more adaptable solutions, we can use LLM planners, while completely predefined plans provide greater reliability. We can also combine both to achieve a balanced approach.

- Memory: The history of previous steps and additional user or environment context that was learned along the way.

- Tools: The agent has the capability to call external APIs or functions to get extra information or to perform given tasks, e.g., filling out and submitting a form on a registration page.

It’s also worth mentioning several AI Agents Design Patterns that have emerged in recent months, helping us take our AI agent-based applications to the next level. Here are the three most common:

- Supervision: This pattern is built with two types of Agents: a Supervisor (also called Router) and Worker/Specialist agents. The Router agent picks which of the workers will perform the next task and decides when to finish processing.

- Reflection: We prompt the LLM to reflect on and critique its past action, so it can improve its output.

- Collaboration: Agents share common memories and work together on specific problems, each contributing as a specialist in a given area.

LLM Agentic Systems are built from a mix of LLM Agents and Agentic Design Patterns which both consist of various techniques that are rapidly developed.

Agentic systems represent the next step in the utilization of Large Language Models. By incorporating agentic workflows into your business:

- Existing applications using LLMs can benefit significantly.

- Completely new use cases can emerge.

- The performance of non-LLM pipelines can be improved.

Differences Between AI Copilots, Chatbots, and Autonomous Agents, and Decision Recommendation Systems

Plethora of options and techniques related to AI Agents can be overwhelming. However, from a business perspective, it is more about what these Agents can do and not how they do this. Understanding the possibilities and limitations becomes easier after examining the different types of AI agents being developed.

We often encounter terms like co-pilot, chatbots, and agents, but what truly sets them apart? Let’s explore the distinctions.

Just as backend and frontend separation is beneficial for web applications, it is also advantageous for LLM-based applications. The backend handles the heavy lifting and is an ideal place to use agentic systems to achieve previously impossible tasks. The frontend’s role is to constantly:

- Allow users to provide input.

- Send this input to the backend.

- Parse backend output and display the results.

At the third step, the system can:

- Interact with the user environment (web page or application’s front-end , user database info)

- Collaborate with the user helping them to solve their problem

Given these 2 criteria, we can imagine a 2D space where all LLM based applications reside

When designing LLM based applications we can think of how collaborative it can be and how it should influence the environment of the user, which facilitates the planning phase.

Imagine you want to build an LLM application for your video processing software. In the corners of our 2D space, you could have:

- Copilot: Suggests different effects, helps with splitting the video, and answers questions about software functionality. It assists during the process but does not automate it.

- Chatbot: Similar to a copilot, it helps but only resides in a chat window, providing text or image hints.

- Autonomous Agentic System: Takes a query like “Take these 5 clips and create 20 second Instagram reel that is…” and outputs a ready video clip, using video processing software interface

- Decision Recommendation System: Provides functionality like virality scores, taking a video as input and outputting a score.

Difference between different LLM frontends, on the stock exchange example. The same backend data generated by another Agentic System can be used differently to provide different user experience.

Use cases

Now that we understand what AI agents are, let’s explore some of their most interesting applications.

Web Agents

Nowadays, almost anything can be accomplished with an internet connection and a web browser: ordering food, starting a company, managing financial transactions, conducting market research, or even collaborating on complex projects. Whether it’s personal tasks or business operations, the possibilities are virtually endless!

But all of these tasks usually require you to go to the page, browse through available options, make payments, fill in all of the necessary data, and it takes time. And I think all of us value our time. But there is good news. With LLM-powered agents, most of these boring tasks could be automated.

We’ve already seen a couple of available solutions such as:

- WebVoyager is an innovative Large Multimodal Model powered web agent that can complete user instructions end-to-end by interacting with real-world websites.

- Agent-E is an agent-based system that aims to automate actions on the user’s computer. At the moment, it focuses on automation within the browser. Agent-E is a newer agent and currently being SOTA solution for WebAgents.

They both have been evaluated on the WebVoyager dataset. You can see example tasks and results below.

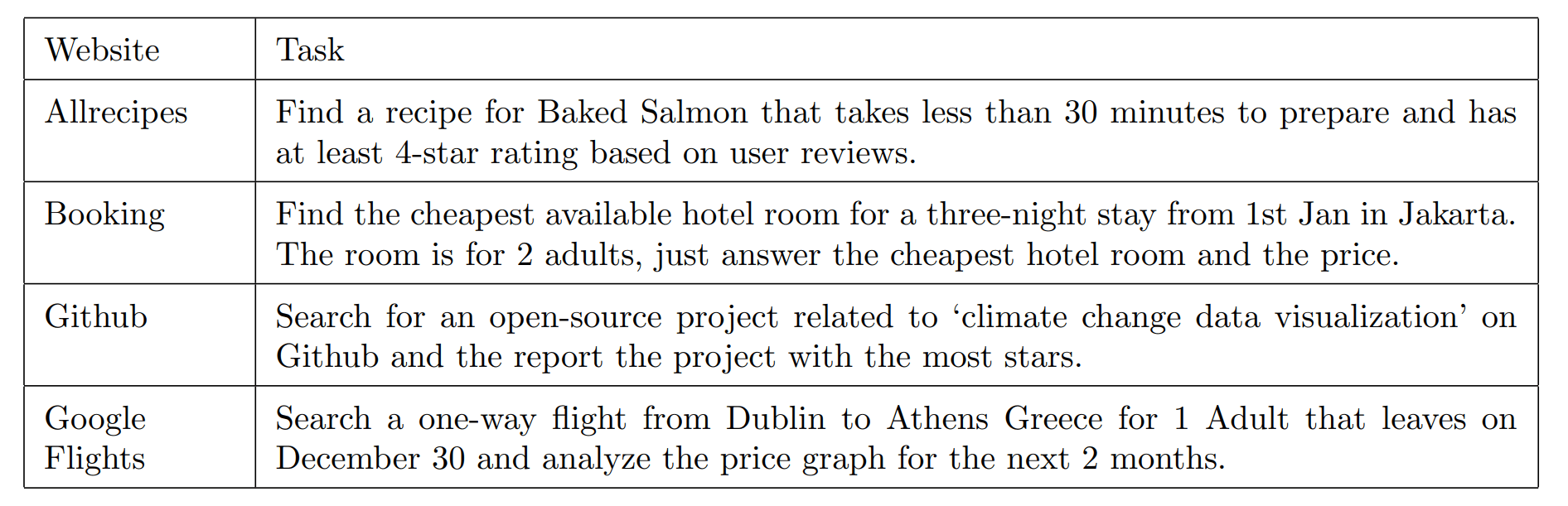

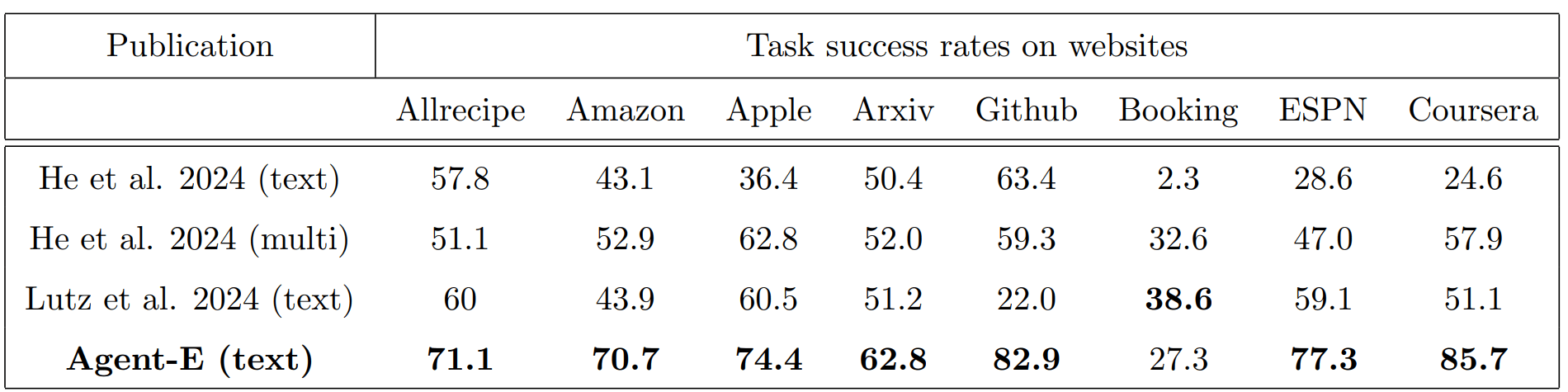

Example tasks from WebVoyager dataset. Source: https://arxiv.org/pdf/2407.13032

Performance of different WebAgents on the WebVoyager dataset. WebVoyager (He et al. 2024), WILBUR (Lutz et al. 2024) and Agent-E. Source: https://arxiv.org/pdf/2407.13032

Remember that these AI agents are still evolving; even though they are not perfect, they are improving. Importantly, their current design is quite broad in scope, which enhances their flexibility as they can handle a wide range of tasks and queries across various domains. This broad capability is a significant advantage, and with ongoing improvements, their reliability will continue to increase. Currently, a much more reliable system can be achieved by limiting agent’s scope to the most important business operations and developing more specialized agents that focus on specific tasks. For instance, we could create an agent dedicated solely to conducting market research.

Sequence diagram representing interaction between user, Agentic System and gooddoctor.com page during the task of making an appointment.

We have extensive experience building these types of agents. If you’re interested in learning more, we’ll be sharing details about WebAgents in our next article. Stay tuned!

Coding Agents

Another promising application of LLM-based agents is going one step further than collaborative tools like Copilot or ChatGPT and building an autonomous agent capable of creating an entire software engineering project only from the requirements written in natural language. We already have solutions such as:

- Genie – developed by Cosine.

- SWE-agent – built and maintained by researchers from Princeton University.

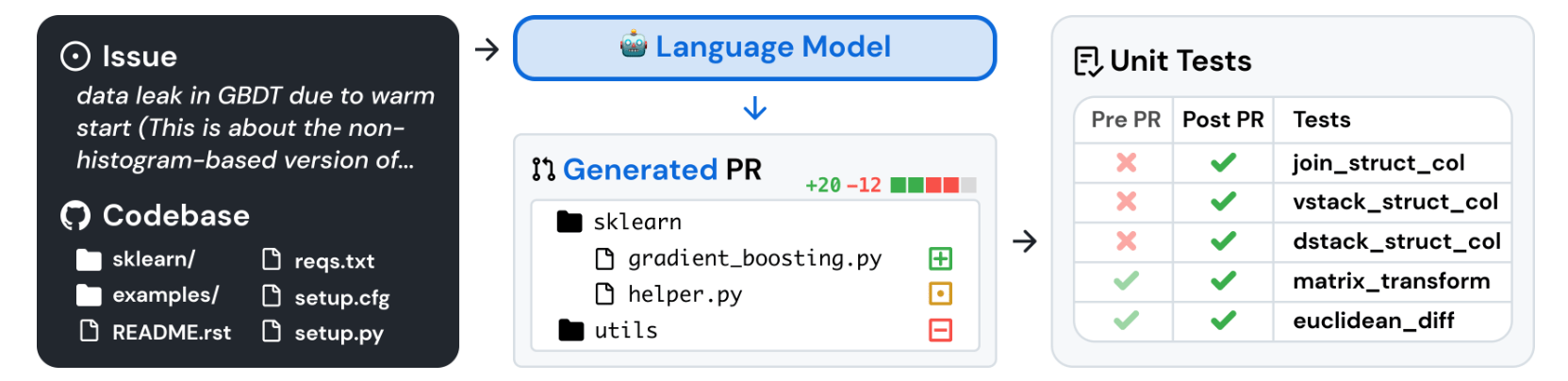

They have been evaluated on the SWE-bench. SWE-bench is a benchmark for evaluating large language models on real-world software issues collected from GitHub. Given a codebase and an issue, a language model is tasked with generating a patch that resolves the described problem.

SWE-bench collects 2,294 Issue-Pull Request pairs from 12 popular Python repositories. Source: https://www.swebench.com

Genie got over 30% on this benchmark, while SWE-agent powered by GPT-4 got nearly 12.5%. But bear in mind that the former is a closed, enterprise solution while the latter is open-sourced, MIT-licensed, and free to use. So, as these numbers show us, while completing projects end-to-end solely from requirements using an autonomous agent holds great promise, we’re not there yet, and there is still a lot of work to be done.

Note: OpenAI recently dropped a new benchmark, SWE-bench Verified , so we might see some new, interesting results!

We also have several other popular solutions available, such as AutoGPT, gpt-engineer, and the recently announced Replit Agent. While they seem very promising, they don’t provide benchmark results, so we don’t know their true value, and each use case has to be evaluated individually.

In our opinion, currently, tools like Copilot, which helps the developer and not replaces them, offer the most value. IDEs like Zed and Cursor, which are natively LLM-powered, exemplify this approach. Although the mentioned solutions are the best available now, we believe that very soon, solutions with more autonomous agents, like Genie or SWE-agent, will surpass Copilots and provide even more value.

If you want to learn more about Coding Agents, We encourage you to read our previous blog posts on this topic: AI Agents in LLMs: Introduction to Coding Agents and How to Build Your Own Coding Agent: Our Process.

Specialized Database Agent

General-purpose AI agents that can be prompted to solve any task often prove unreliable and can perform unwanted, potentially dangerous actions. When creating Text2SQL-based solutions (a system that translates a query in natural language into a query in database language) for clients, we found that solving specific cases reliably brings more business value than general, unreliable cases.

To achieve high reliability, we utilized very concrete implementations of agentic behaviors:

- A set of tools for interacting with the database that the LLM can automatically select. Instead of generating pure SQL, which can be dangerous, the LLM selects predefined business actions.

- A contextualization component that remembers facts about users and later utilizes them to disambiguate questions, such as, “Does anyone from my department take holidays this week?”This can be thought of as a memory component.

- Encapsulation of an expert’s domain knowledge and domain vocabulary inside the tools.

- A system utilizing vector similarity to exchange synonyms (e.g., recognizing that “New York” is represented as “NY” in the database).

- LLM based reflection provides feedback and potential solutions to the main agent when the query it generated doesn’t execute correctly.

- LLM agent summarizing the table returned by the database into a user-friendly message.

To sum up, the most reliable solutions always incorporate multi- agent collaboration between non LLM and LLM agents equipped with tools and memory, utilizing patterns like reflection.

Looking for a way to build a reliable AI agent for database queries? Given this experience, we created db-ally, an efficient, consistent, and secure library for creating agents for querying structured data with natural language. It’s open-source, so you can use it in your project freely.

db-ally plays a role of a proxy between LLM and database ensuring security and reliability

The Future of AI Agents

It’s really impossible to predict the future with 100% confidence. But we can make assumptions, and this is what we think about agents:

- There is a new player in the game that can power our agents: LMM—large multimodal models. By enabling access to new modalities like vision and speech, they open up a whole new realm of possibilities. For example, instead of relying solely on text representation of the website, we can now feed a screenshot to the model, providing it with more information that can be helpful in achieving a certain goal.

- They will become more and more present in our lives and our systems. It seems like the natural order of things as AI agents are currently a remedy to the shortcomings of pure LLM applications, such as:

- LLMs lack awareness of events or developments that occur after their training cut-off date

- LLMs often struggle with performing accurate mathematical calculations or solving complex mathematical problems, leading to incorrect results.

- LLMs cannot interact directly with external environments, such as web browsers or other software, limiting their ability to execute tasks that require such interactions.

- Breakthroughs will be occurring, and limits will be pushed. It’s important to prepare for a new wave of agent-powered applications by:

- Identifying business processes that can be automated or facilitated by them

- Thinking about gathering data that can help develop and evaluate agents on these tasks

Conclusions

As AI continues to evolve, the role of AI agents will become even more essential in shaping how businesses operate and innovate. These agents, with their ability to handle complex workflows and execute tasks autonomously, offer a glimpse into the future of technology—a future where specialization and human supervision coexist to create powerful, efficient systems. Whether you’re building advanced applications or exploring new possibilities for automation, understanding and leveraging AI agents will be key to staying competitive in this rapidly advancing landscape.

Interested in learning more about how AI agents can enhance your workflows? Keep an eye out for our upcoming articles, where we’ll explore specific use cases and best practices to help you stay ahead in this evolving space.

The post Why and How to Build AI Agents for LLM Applications appeared first on deepsense.ai.