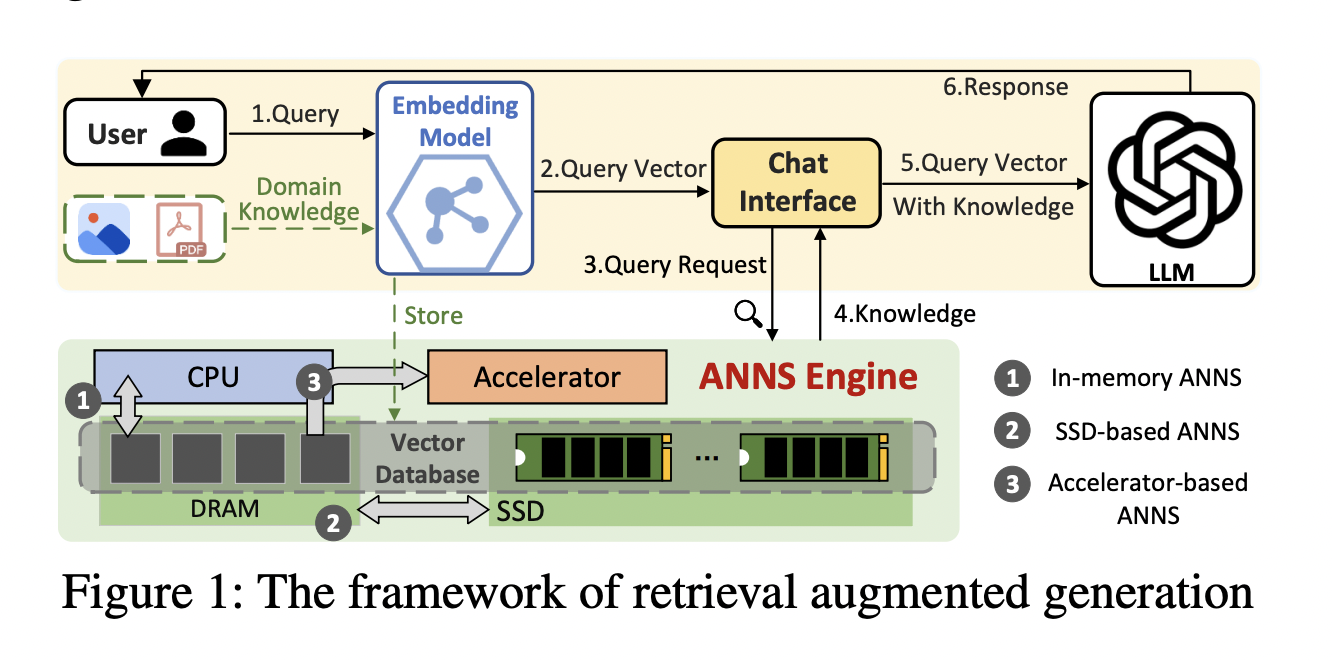

Approximate nearest neighbor search (ANNS) is a critical technology that powers various AI-driven applications such as data mining, search engines, and recommendation systems. The primary objective of ANNS is to identify the closest vectors to a given query in high-dimensional spaces. This process is essential in contexts where finding similar items quickly is crucial, such as image recognition, natural language processing, and large-scale recommendation engines. However, as data sizes increase to billions of vectors, ANNS systems face considerable challenges in terms of performance and scalability. Efficiently managing these datasets requires significant computational and memory resources, making it a highly complex and costly endeavor.

The main issue this research addresses is that existing ANNS solutions often need help to handle the immense scale of modern datasets while maintaining efficiency and accuracy. Traditional approaches are inadequate for billion-scale data because they demand high memory usage and computational power. Techniques like inverted file (IVF) and graph-based indexing methods have been developed to address these limitations. Still, they often require terabyte-scale memory, which makes them expensive and resource-intensive. Furthermore, the computational complexity of conducting massive distance calculations between high-dimensional vectors in such large datasets is a bottleneck for current ANNS systems.

In the current state of ANNS technology, memory-intensive methods such as IVF and graph-based indices are frequently employed to structure the search space. While these methods can improve query performance, they also significantly increase memory consumption, particularly for large datasets containing billions of vectors. Hierarchical indexing (HI) techniques and Product Quantization (PQ) have optimized memory usage by storing indices on SSDs and using compressed representations of vectors. However, these solutions can cause severe performance degradation due to the overhead introduced by data compression and decompression operations, which may lead to accuracy losses. Current systems like SPANN and RUMMY have demonstrated varying degrees of success but remain limited by their inability to balance memory consumption and computational efficiency.

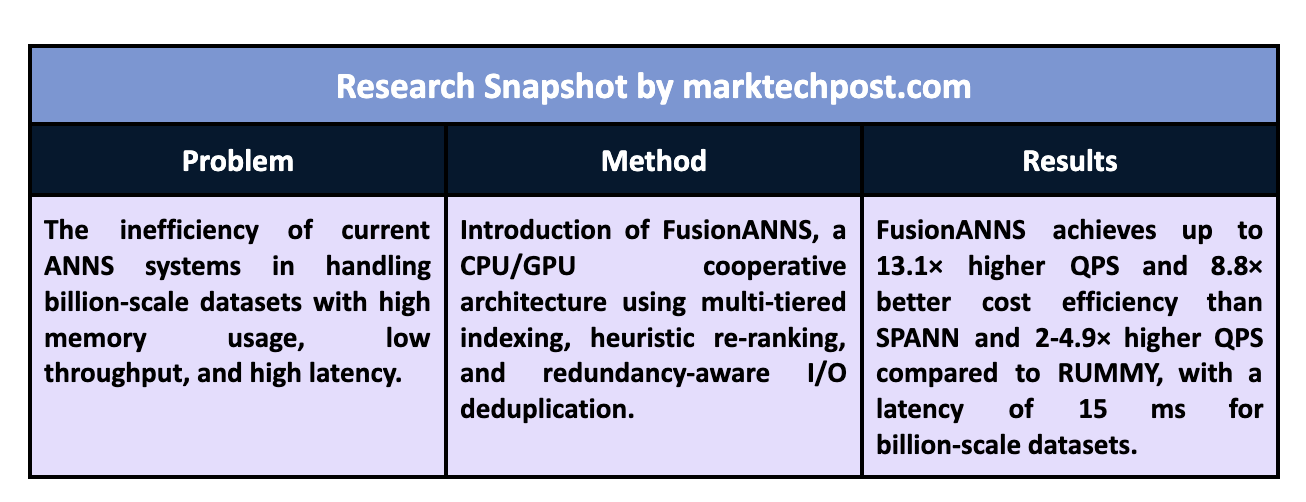

Researchers from Huazhong University of Science and Technology and Huawei Technologies Co., Ltd introduced FusionANNS, a new CPU/GPU collaborative processing architecture designed specifically for billion-scale datasets to tackle these challenges. FusionANNS utilizes an innovative multi-tiered index structure that leverages the strengths of both CPUs and GPUs. This architecture allows for high throughput and low-latency approximate nearest neighbor searches using only a single, entry-level GPU, making it a cost-effective solution. The researchers’ approach centers around three core innovations: multi-tiered indexing, heuristic re-ranking, and redundancy-aware I/O deduplication, which minimize data transmission across CPUs, GPUs, and SSDs to eliminate performance bottlenecks.

FusionANNS’s multi-tiered indexing structure enables CPU/GPU collaborative filtering by storing raw vectors on SSDs, compressed vectors in the GPU’s high-bandwidth memory (HBM), and vector identifiers in the host memory. This structure prevents excessive data swapping between CPUs and GPUs, significantly reducing I/O operations. Heuristic re-ranking further improves query accuracy by breaking the re-ranking process into multiple mini-batches and employing a feedback control mechanism to terminate unnecessary computations early. The final component, redundancy-aware I/O deduplication, groups vectors with high similarity into optimized storage layouts, reducing the number of I/O requests during re-ranking by 30% and eliminating redundant I/O operations through effective caching strategies.

Experimental results indicate that FusionANNS outperforms state-of-the-art systems like SPANN and RUMMY across various metrics. The system achieves up to 13.1× higher queries per second (QPS) and 8.8× higher cost efficiency compared to SPANN, and 2-4.9× higher QPS and 6.8× better cost efficiency compared to RUMMY. For a dataset containing one billion vectors, FusionANNS can handle the query process with a QPS of over 12,000 while keeping latency as low as 15 milliseconds. These results demonstrate that FusionANNS is highly effective for managing billion-scale datasets without extensive memory resources.

Key takeaways from this research include:

- Performance Improvement: FusionANNS achieves up to 13.1× higher QPS and 8.8× better cost efficiency than the state-of-the-art SSD-based system SPANN.

- Efficiency Gain: It provides 5.7-8.8× higher efficiency in handling SSD-based data access and processing.

- Scalability: FusionANNS can manage billion-scale datasets using only one entry-level GPU and minimal memory resources.

- Cost-effectiveness: The system shows a 2-4.9× improvement in cost efficiency compared to existing in-memory solutions like RUMMY.

- Latency Reduction: FusionANNS maintains a query latency of 15 milliseconds, significantly lower than other SSD-based and GPU-accelerated solutions.

- Innovation in Design: Using multi-tiered indexing, heuristic re-ranking, and redundancy-aware I/O deduplication are groundbreaking contributions that set FusionANNS apart from existing methods.

In conclusion, FusionANNS represents a breakthrough in ANNS technology by delivering high throughput, low latency, and superior cost efficiency. The researchers’ novel approach to CPU/GPU collaboration and multi-tiered indexing offers a practical solution for scaling ANNS to support large datasets. FusionANNS sets a new standard for handling high-dimensional data in real-world applications by reducing the memory footprint and eliminating unnecessary computations.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit.

We are inviting startups, companies, and research institutions who are working on small language models to participate in this upcoming ‘Small Language Models’ Magazine/Report by Marketchpost.com. This Magazine/Report will be released in late October/early November 2024. Click here to set up a call!

The post FusionANNS: A Next-Gen ANNS Solution that Combines CPU/GPU Cooperative Processing for Enhanced Performance, Scalability, and Cost Efficiency appeared first on MarkTechPost.