Reinforcement Learning (RL) excels at tackling individual tasks but struggles with multitasking, especially across different robotic forms. World models, which simulate environments, offer scalable solutions but often rely on inefficient, high-variance optimization methods. While large models trained on vast datasets have advanced generalizability in robotics, they typically need near-expert data and fail to adapt across diverse morphologies. RL can learn from suboptimal data, making it promising for multitask settings. However, methods like zeroth-order planning in world models face scalability issues and become less effective as model size increases, particularly in massive models like GAIA-1 and UniSim.

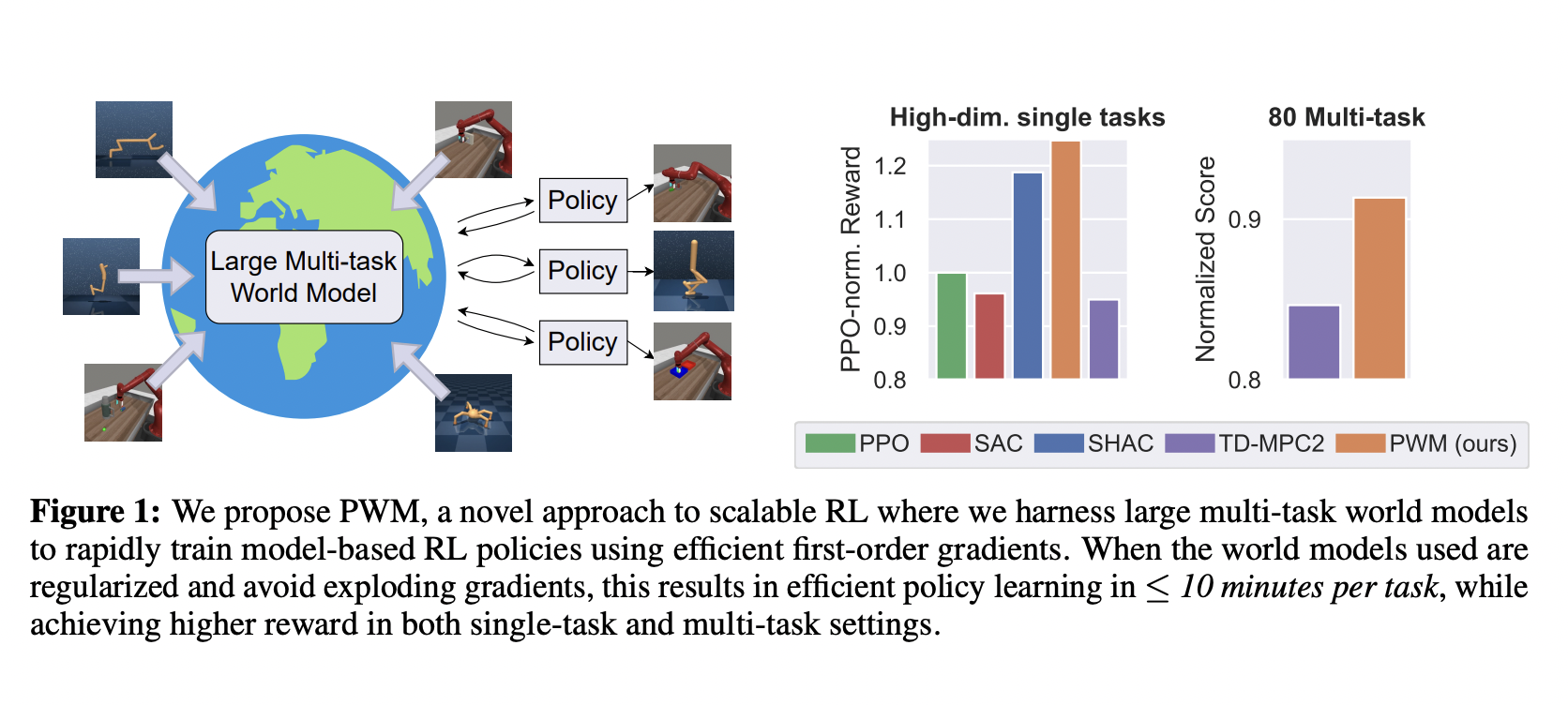

Researchers from Georgia Tech and UC San Diego have introduced Policy learning with large World Models (PWM), an innovative model-based reinforcement learning (MBRL) algorithm. PWM pretrains world models on offline data and uses them for first-order gradient policy learning, enabling it to solve tasks with up to 152 action dimensions. This approach outperforms existing methods by achieving up to 27% higher rewards without costly online planning. PWM emphasizes the utility of smooth, stable gradients over long horizons rather than mere accuracy. It demonstrates that efficient first-order optimization leads to better policies and faster training than traditional zeroth-order methods.

RL splits into model-based and model-free approaches. Model-free methods like PPO and SAC dominate real-world applications and employ actor-critic architectures. SAC uses First-order Gradients (FoG) for policy learning, offering low variance but facing issues with objective discontinuities. Conversely, PPO relies on zeroth-order gradients, which are robust to discontinuities but prone to high variance and slower optimization. Recently, the focus in robotics has shifted to large multi-task models trained via behavior cloning. Examples include RT-1 and RT-2 for object manipulation. However, the potential of large models in RL still needs to be explored. MBRL methods like DreamerV3 and TD-MPC2 leverage large world models, but their scalability could be improved, particularly with the growing size of models like GAIA-1 and UniSim.

The study focuses on discrete-time, infinite-horizon RL scenarios represented by a Markov Decision Process (MDP) involving states, actions, dynamics, and rewards. RL aims to maximize cumulative discounted rewards through a policy. Commonly, this is tackled using actor-critic architectures, which approximate state values and optimize policies. In MBRL, additional components such as learned dynamics and reward models, often called world models, are used. These models can encode true states into latent representations. Leveraging these world models, PWM efficiently optimizes policies using FoG, reducing variance and improving sample efficiency even in complex environments.

In evaluating the proposed method, complex control tasks were tackled using the flex simulator, focusing on environments like Hopper, Ant, Anymal, Humanoid, and muscle-actuated Humanoid. Comparisons were made against SHAC, which uses ground truth models, and TD-MPC2, a model-free method that actively plans at inference time. Results showed that PWM achieved higher rewards and smoother optimization landscapes than SHAC and TD-MPC2. Further tests on 30 and 80 multi-task environments revealed PWM’s superior reward performance and faster inference time than TD-MPC2. Ablation studies highlighted PWM’s robustness to stiff contact models and higher sample efficiency, especially with better-trained world models.

The study introduced PWM as an approach in MBRL. PWM utilizes large multi-task world models as differentiable physics simulators, leveraging first-order gradients for efficient policy training. The evaluations highlighted PWM’s ability to outperform existing methods, including those with access to ground-truth simulation models like TD-MPC2. Despite its strengths, PWM relies heavily on extensive pre-existing data for world model training, limiting its applicability in low-data scenarios. Additionally, while PWM offers efficient policy training, it requires re-training for each new task, posing challenges for rapid adaptation. Future research could explore enhancements in world model training and extend PWM to image-based environments and real-world applications.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

The post Policy Learning with Large World Models: Advancing Multi-Task Reinforcement Learning Efficiency and Performance appeared first on MarkTechPost.