Multi-modal Large Language Models (MLLMs) have various applications in visual tasks. MLLMs rely on the visual features extracted from an image to understand its content. When a low-resolution image containing fewer pixels is provided as input, it translates less information to these models to work with. Due to this limitation, these models often need to be more accurate to identify the objects, scenes, or actions in the image. This behavior of MLLMs affects their effectiveness in visual tasks.

Researchers from the Shanghai Jiaotong University, Shanghai AI Laboratory, and S-Lab, Nanyang Technological University have introduced a novel MLLM model, MG-LLaVA to address the limitations of current Multi-modal Large Language Models (MLLMs) in processing low-resolution images. The key challenge lies in enhancing these models to capture and utilize high-resolution and object-centric features for improved visual perception and comprehension.

Current MLLMs typically use pre-trained Large Language Models (LLMs) to process concatenated visual and language embeddings, with models like LLaVA adopting low-resolution images as inputs. While these models have shown promise, they rely on low-resolution inputs limiting their ability to process fine-grained details and recognize small objects in complex images. Researchers have proposed various enhancements to address this, including training on diverse datasets, using high-resolution images, and employing dynamic aspect ratios. However, these approaches often need the integration of object-level features and multi-granularity inputs, which are crucial for comprehensive visual understanding.

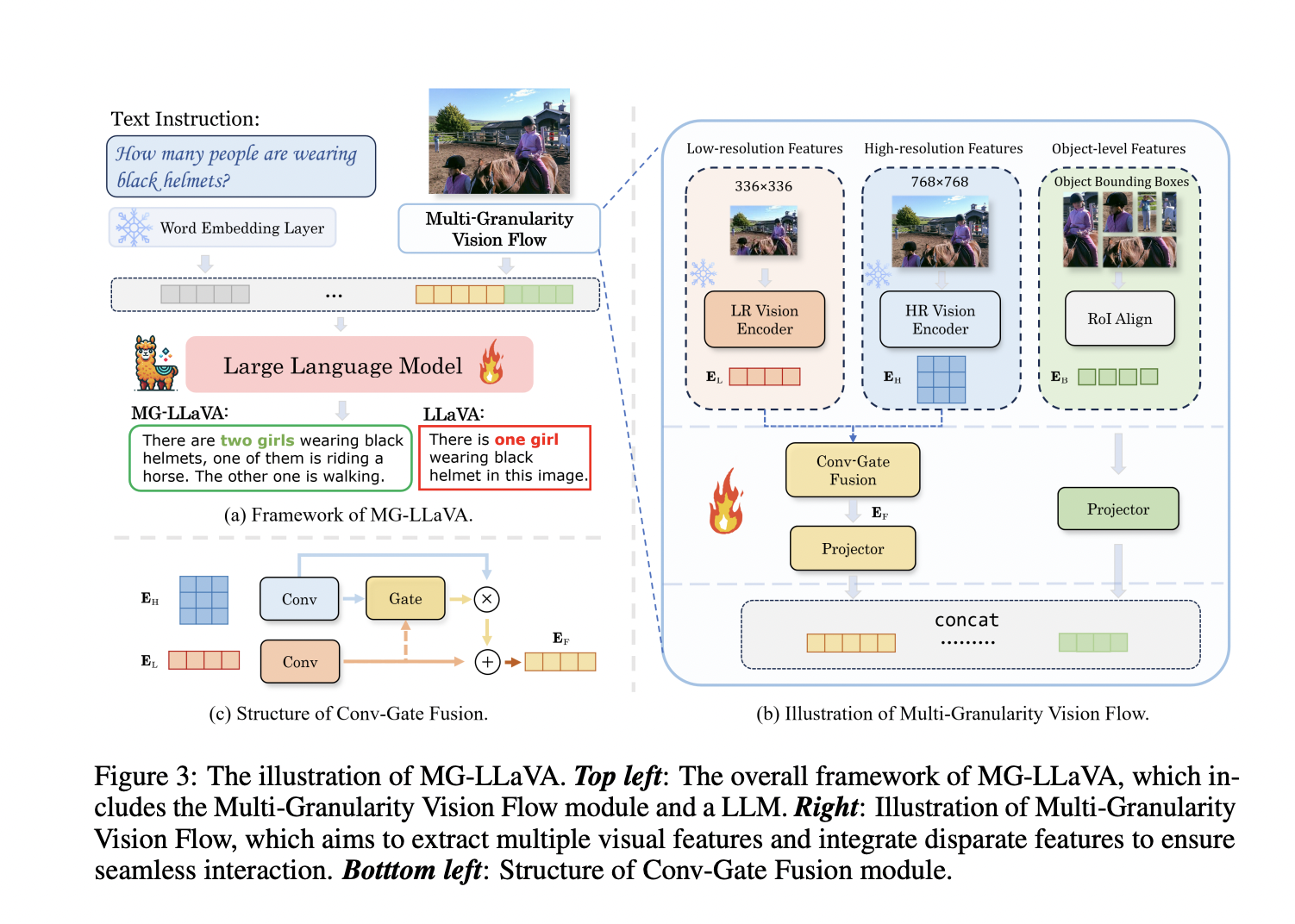

The proposed model, MG-LLaVA is an innovative MLLM that significantly improves visual processing by incorporating a multi-granularity vision flow. This includes low-resolution, high-resolution, and object-centric features, enhancing the model’s ability to capture fine-grained details and improve object recognition. The MG-LLaVA framework builds on the architecture of LLaVA that integrates a high-resolution visual encoder, a Conv-Gate fusion network for feature integration, and object-level features derived from bounding boxes identified by open-vocabulary detectors.

The MG-LLaVA architecture comprises two key components: the Multi-Granularity Vision Flow framework and a large language model. The Vision Flow framework processes images at different resolutions, using a CLIP-pretrained Vision Transformer (ViT) for low-resolution features and a CLIP-pretrained ConvNeXt for high-resolution features. To fuse these features effectively, the Conv-Gate fusion network aligns the features’ channel widths and modulates semantic information, maintaining computational efficiency.

Object-level features are incorporated using Region of Interest (RoI) alignment to extract detailed features from identified bounding boxes, which are then concatenated with other visual tokens. This multi-granularity approach enhances the model’s ability to capture comprehensive visual details and integrate them with textual embeddings. MG-LLaVA is trained on publicly available multimodal data and fine-tuned with visual instruction tuning data.

Extensive evaluations across multiple benchmarks, including MMBench and SEEDBench, demonstrate that MG-LLaVA outperforms existing MLLMs of comparable parameter sizes. The model significantly improves perception and visual comprehension, surpassing models like GPT-4V and GeminiPro-V. The study also includes comprehensive ablation experiments, confirming the effectiveness of the object-level features and Conv-Gate fusion network.

In conclusion, MG-LLaVA addresses the limitations of current MLLMs by introducing a multi-granularity vision flow that effectively processes low-resolution, high-resolution, and object-centric features. This innovative approach significantly enhances the model’s visual perception and comprehension capabilities, demonstrating superior performance across various multimodal benchmarks.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post MG-LLaVA: An Advanced Multi-Modal Model Adept at Processing Visual Inputs of Multiple Granularities, Including Object-Level Features, Original-Resolution Images, and High-Resolution Data appeared first on MarkTechPost.