Artificial neural networks (ANNs) show a remarkable pattern when trained on natural data irrespective of exact initialization, dataset, or training objective; models trained on the same data domain converge to similar learned patterns. For example, for different image models, the initial layer weights tend to converge to Gabor filters and color-contrast detectors. Many such features suggest global representation that goes beyond biological and artificial systems, and these features are observed in the visual cortex. These findings are practical and well-established in the field of machines that can interpret literature but lack theoretical explanations.

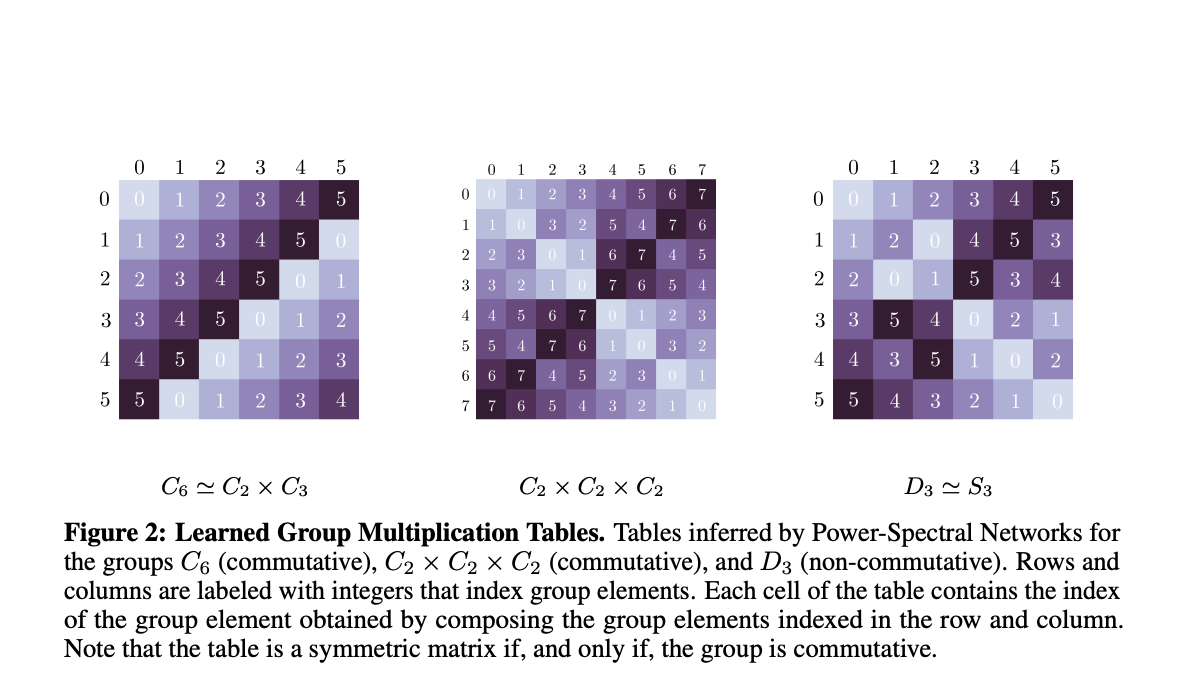

Localized versions of canonical 2D Fourier basis functions are the most observed universal features in image models, e.g. Gabor filters or wavelets. When vision models are trained on tasks like efficient coding, classification, temporal coherence, and next-step prediction goals, these Fourier features pop up in the model’s initial layers. Apart from this, Non-localized Fourier features have been observed in networks trained to solve tasks where cyclic wraparound is allowed, for example, modular arithmetic, more general group compositions, or invariance to the group of cyclic translations.

Researchers from KTH, Redwood Center for Theoretical Neuroscience, and UC Santa Barbara introduced a mathematical explanation for the rise of Fourier features in learning systems like neural networks. This rise is due to the downstream invariance of the learner that becomes insensitive to certain transformations, e.g., planar translation or rotation. The team has derived theoretical guarantees regarding Fourier features in invariant learners that can be used in different machine-learning models. This derivation is based on the concept that invariance is a fundamental bias that can be injected implicitly and sometimes explicitly into learning systems due to the symmetries in natural data.

The standard discrete Fourier transform is a special case of more general Fourier transforms on groups, which can be defined by replacing the basis of harmonics with different unitary group representations. A set of previous theoretical works is formed for sparse coding models, deriving the conditions under which sparse linear combinations are used to recover the original bases that generate data with the help of a network. The proposed theory covers various situations and neural network architectures that help to set a foundation for a learning theory of representations in artificial and biological neural systems.

The team gave two informal theorems in this paper, the first one states that if a parametric function of a certain kind is invariant in the input variable to the action of a finite group G, then each component of its weights W coincides with a harmonic of G up to a linear transformation. The second theorem states that if a parametric function is almost invariant to G according to some functional bounds and the weights are orthonormal, then the multiplicative table of G can be recovered from W. Moreover, a model is implemented to satisfy the need of the proposed theory and trained through different learning on a goal that supports invariance and extraction of the multiplicative table of G from its weights.

In conclusion, researchers introduced a mathematical explanation for the rise of Fourier features in learning systems like neural networks. Also, they proved that if a machine learning model of a specific kind is invariant to a finite group, then its weights are closely related to the Fourier transform on that group, and the algebraic structure of an unknown group can be recovered from an invariant model. Future work includes the study of analogs of the proposed theory on real numbers which is an interesting area that will be aligned more towards the current practices in the field.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post Harmonics of Learning: A Mathematical Theory for the Rise of Fourier Features in Learning Systems Like Neural Networks appeared first on MarkTechPost.