Multitask learning (MLT) involves training a single model to perform multiple tasks simultaneously, leveraging shared information to enhance performance. While beneficial, MLT poses challenges in managing large models and optimizing across tasks. Optimizing the average loss may lead to suboptimal performance if tasks progress unevenly. Balancing task performance and optimization strategies is critical for effective MLT.

Existing solutions for mitigating the under-optimization problem in multitask learning involve gradient manipulation technics. These methods compute a new update vector to the average loss, ensuring that all task losses decrease more evenly. However, while these approaches show improved performance, they can become computationally expensive with many tasks and model size. This is due to the need to compute and store all task gradients at each iteration, resulting in significant space and time complexities. In contrast, computing the average gradient is more efficient, requiring less computational overhead per iteration.

To overcome these limitations, a research team from The University of Texas at Austin, Salesforce AI Research, and Sony AI recently published a new paper. In their work, they introduced Fast Adaptive Multitask Optimization (FAMO), a method designed to address the under-optimization issue in multitask learning without the computational burden associated with existing gradient manipulation techniques.

FAMO dynamically adjusts task weights to ensure a balanced loss decrease across tasks, leveraging loss history instead of computing all task gradients. Key contributions include introducing FAMO, an MTL optimizer with O(1) space and time complexity per iteration, and demonstrating its comparable or superior performance to existing methods across various MTL benchmarks, with significant computational efficiency improvements.

The proposed approach comprises two main ideas: achieving a balanced loss decrease across tasks and amortizing computation over time.

- Balanced Rate of Loss Improvement:

- FAMO aims to decrease all task losses at an equal rate as much as possible. It defines the rate of improvement for each task based on the change in loss over time.

- By formulating an optimization problem, FAMO seeks an update direction that maximizes the worst-case improvement rate across all tasks.

- Fast Approximation by Amortizing over Time:

- Instead of solving the optimization problem at each step, FAMO performs a single-step gradient descent on a parameter representing task weights, amortizing computation over the optimization trajectory.

- This is achieved by updating the task weights based on the change in log losses and approximating the gradient.

Practically, FAMO reparameterizes the task weights to ensure they stay within a valid range and introduces regularization to focus more on recent updates. The algorithm iteratively updates task weights and parameters based on the observed losses to find a balance between task performance and computational efficiency.

Overall, FAMO offers a computationally efficient approach to multitask optimization by dynamically adjusting task weights and amortizing computation over time. This leads to improved performance without the need for extensive gradient computations.

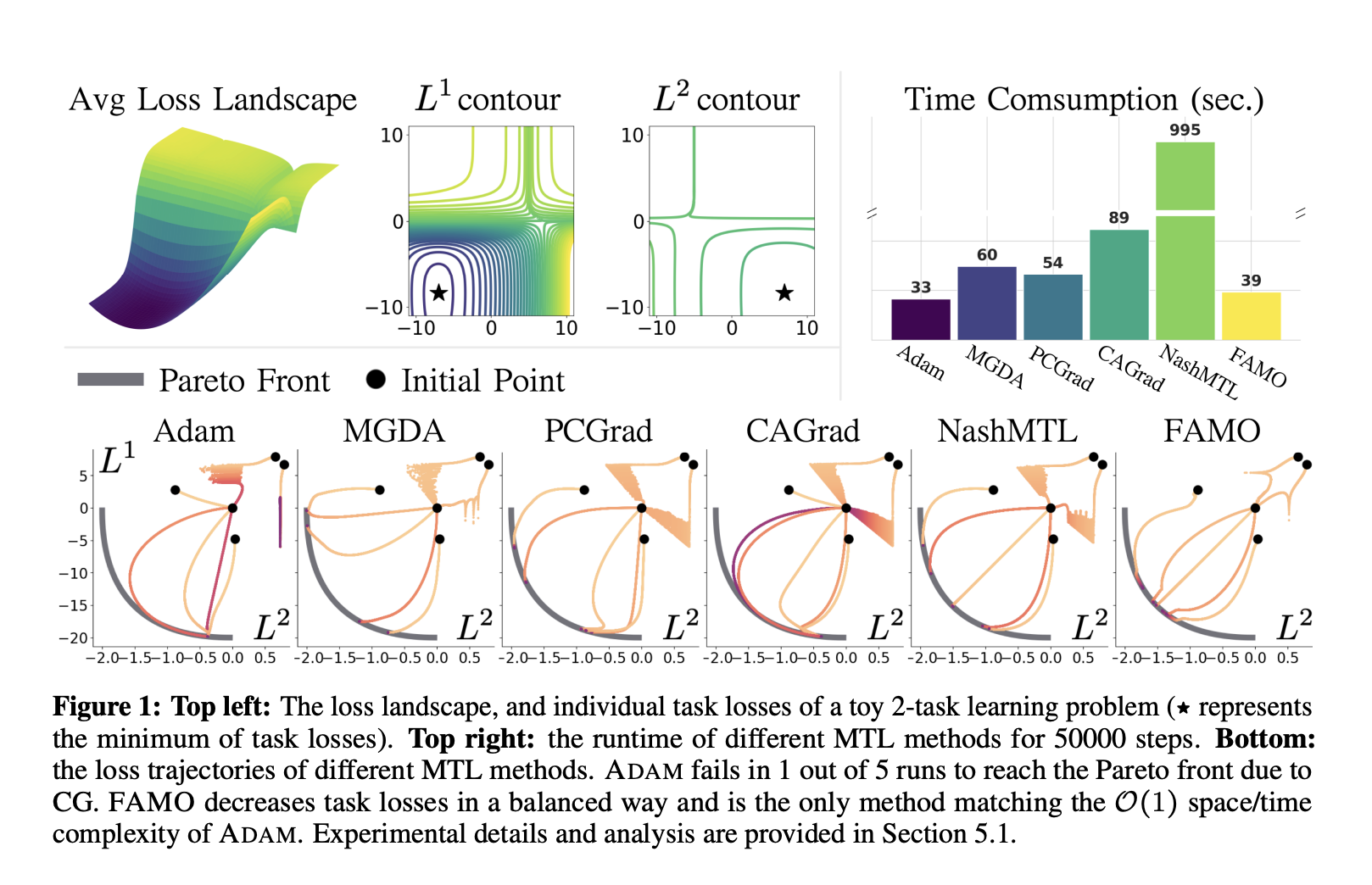

To evaluate Famo, the authors conducted empirical experiments across various experiment settings. They started with a toy 2-task problem, demonstrating Famo’s ability to efficiently mitigate conflicting gradients (CG). Compared to state-of-the-art methods in MLT supervised and reinforcement learning benchmarks, Famo consistently performed well. It showcased significant efficiency improvements, particularly in training time, compared to methods like NASHMTL. Additionally, an ablation study on the regularization coefficient γ highlighted Famo’s robustness across different settings, except for specific cases like CityScapes, where tuning γ could stabilize performance. The evaluation emphasized Famo’s effectiveness and efficiency across diverse multitask learning scenarios.

In conclusion, FAMO presents a promising solution to the challenges of MLT by dynamically adjusting task weights and amortizing computation over time. The method effectively mitigates under-optimization issues without the computational burden associated with existing gradient manipulation techniques. Through empirical experiments, FAMO demonstrated consistent performance improvements across various MLT scenarios, showcasing its effectiveness and efficiency. With its balanced loss decrease approach and efficient optimization strategy, FAMO offers a valuable contribution to the field of multitask learning, paving the way for more scalable and effective machine learning models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 41k+ ML SubReddit

The post FAMO: A Fast Optimization Method for Multitask Learning (MTL) that Mitigates the Conflicting Gradients using O(1) Space and Time appeared first on MarkTechPost.