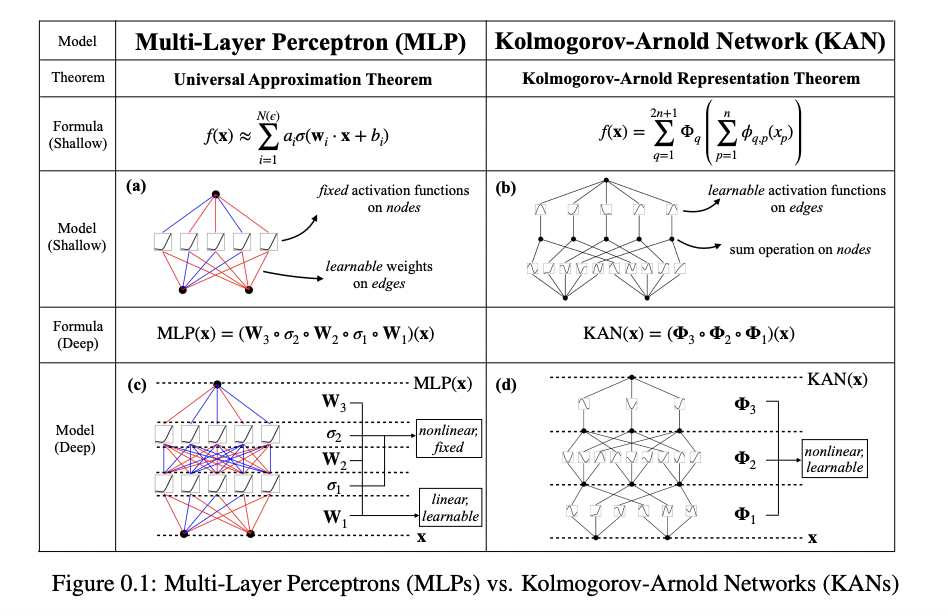

Multi-Layer Perceptrons (MLPs), also known as fully-connected feedforward neural networks, have been significant in modern deep learning. Because of the universal approximation theorem’s guarantee of expressive capacity, they are frequently employed to approximate nonlinear functions. MLPs are widely used; however, they have disadvantages like high parameter consumption and poor interpretability in intricate models like transformers.

Kolmogorov-Arnold Networks (KANs), which are inspired by the Kolmogorov-Arnold representation theorem, give a possible substitute to address these drawbacks. Similar to MLPs, KANs have a fully connected topology, but they use a different approach by placing learnable activation functions on edges (weights) as opposed to learning fixed activation functions on nodes (neurons). A learnable 1D function parametrized as a spline takes the role of each weight parameter in a KAN. As a result, KANs do away with conventional linear weight matrices, and their nodes aggregate incoming signals without undergoing nonlinear transformations.

Compared to MLPs, KANs are more efficient in producing smaller computation graphs, which helps counterbalance their potential computational cost. Empirical data, for example, demonstrates that a 2-layer width-10 KAN can achieve better accuracy (lower mean squared error) and parameter efficiency (fewer parameters) than a 4-layer width-100 MLP.

When it comes to accuracy and interpretability, using splines as activation functions in KANs has several advantages over MLPs. When it comes to accuracy, smaller KANs can perform as well as or better than bigger MLPs in tasks like partial differential equation (PDE) solving and data fitting. Both theoretically and experimentally, this benefit is shown, with KANs exhibiting faster scaling laws for neural networks in comparison to MLPs.

KANs also do exceptionally well in interpretability, which is essential for comprehending and utilizing neural network models. Because KANs employ structured splines to express functions in a more transparent and comprehensible way than MLPs, they may be intuitively visualized. Because of its interpretability, the model and human users may collaborate more easily, which leads to better insights.

The team has shared two examples that show how KANs can be useful tools for scientists to rediscover and comprehend intricate mathematical and physical laws: one from physics, which is Anderson localization, and one from mathematics, which is knot theory. Deep learning models can more effectively contribute to scientific inquiry when KANs improve the understanding of the underlying data representations and model behaviors.

In conclusion, KANs present a viable substitute for MLPs, utilizing the Kolmogorov-Arnold representation theorem to overcome important constraints in neural network architecture. Compared to traditional MLPs, KANs exhibit better accuracy, faster scaling qualities, and increased interpretability because of their use of learnable spline-based activation functions on edges. This development expands the possibilities for deep learning innovation and enhances the capabilities of current neural network architectures.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 41k+ ML SubReddit

The post How Does KAN (Kolmogorov–Arnold Networks) Act As A Better Substitute For Multi-Layer Perceptrons (MLPs)? appeared first on MarkTechPost.