Iterative preference optimization methods have shown efficacy in general instruction tuning tasks but yield limited improvements in reasoning tasks. These methods, utilizing preference optimization, enhance language model alignment with human requirements compared to sole supervised fine-tuning. Offline techniques like DPO are gaining popularity due to their simplicity and efficiency. Recent advancements advocate the iterative application of offline procedures, such as Iterative DPO, Self-Rewarding LLMs, and SPIN, which construct new preference relations to augment model performance further. However, preference optimization remains unexplored in this domain despite the successful application of other iterative training methods like STaR and RestEM to reasoning tasks.

Iterative alignment methods encompass both human-in-the-loop and automated strategies. While some rely on human feedback for reinforcement learning (RLHF), others, like Iterative DPO, optimize preference pairs autonomously, generating new pairs for subsequent iterations using updated models. SPIN, a variant of Iterative DPO, utilizes human labels and model generations for preference construction but faces limitations when model performance matches human standards. Self-Rewarding LLMs also employ Iterative DPO, with the model itself as a reward evaluator, yielding gains in instruction following but modest improvements in reasoning. Conversely, Expert Iteration and STaR focus on sample curation and training data refinement, diverging from pairwise preference optimization.

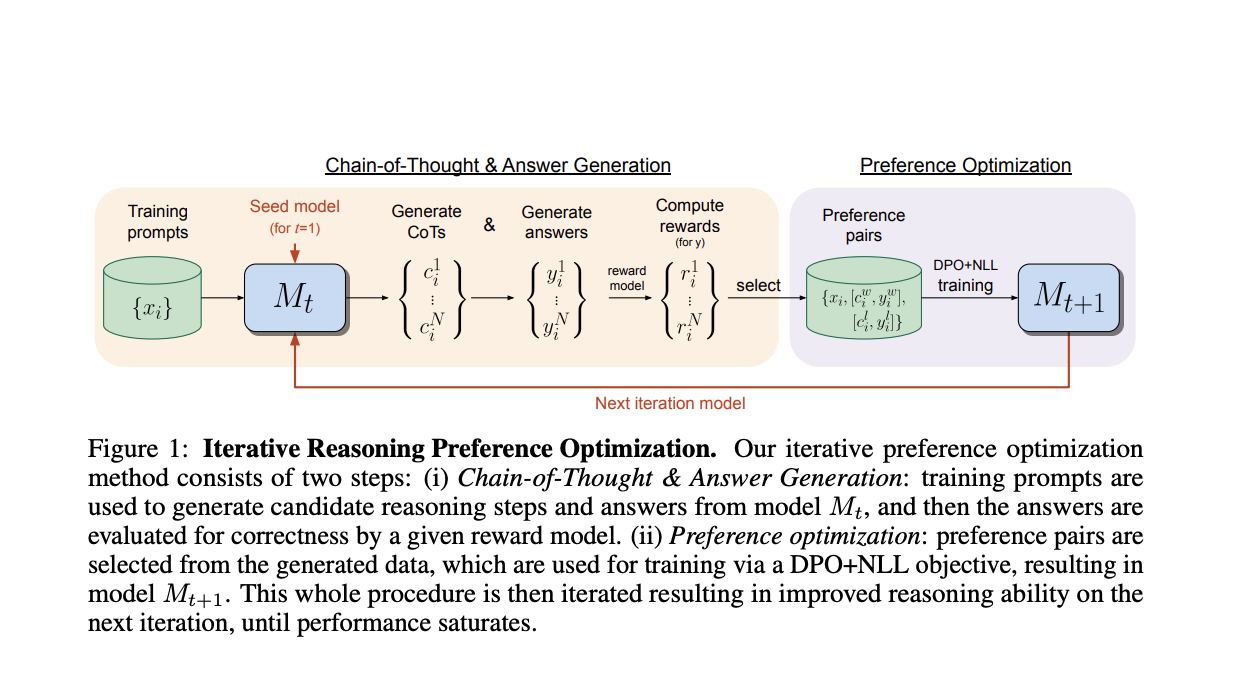

Researchers from FAIR at Meta and New York University introduce an approach targeting iterative preference optimization for reasoning tasks, specifically Chain-of-Thought (CoT) reasoning. Each iteration involves sampling multiple CoT reasoning steps and final answers, constructing preference pairs where winners possess correct answers and losers hold incorrect ones. Training entails a DPO variant incorporating a negative log-likelihood (NLL) loss term for pair winners, which is essential for performance enhancement. The iterative process iterates by generating new pairs and retraining the model from the previously trained iteration, thus refining model performance incrementally.

Their approach relies on a base language model, typically pre-trained or instruction-tuned, and a dataset of training inputs, with the ability to evaluate final output correctness. Given a training input, the model generates (i) a sequence of reasoning steps (Chain-of-Thought) and (ii) a final answer. While the correctness of final answers can be assessed, reasoning step accuracy is not considered. Experiments utilize datasets with gold labels for training inputs, deriving a binary reward from exact matches between labels and final answers. The method comprises two steps per iteration: (i) Chain-of-Thought & Answer Generation and (ii) Preference Optimization.

In the experimentation, the researchers were trained to utilize a modified DPO loss with an added negative log-likelihood term that was deemed essential. Reasoning prowess is enhanced over successive iterations of this method. Solely drawing from training set examples, the approach yields escalating accuracy for Llama-2-70B-Chat, elevating from 55.6% to 81.6% on GSM8K (and 88.7% with a majority voting out of 32 samples), from 12.5% to 20.8% on MATH, and from 77.8% to 86.7% on ARC-Challenge. These improvements surpass the performance of other Llama-2-based models that do not utilize additional datasets.

In conclusion, This study introduces an iterative training algorithm, Iterative Reasoning Preference Optimization, targeting enhanced performance in chain-of-thought-based reasoning tasks for LLMs. Each iteration generates multiple responses and constructs preference pairs based on the correctness of the final answer, employing a modified DPO loss with an additional NLL term for training. The method doesn’t necessitate human-in-the-loop or extra training data, retaining simplicity and efficiency. Experimental outcomes exhibit substantial enhancements on GMS8K, MATH, and ARC-Challenge compared to various baselines utilizing the same base model and training data. These findings underscore the efficacy of the iterative training approach in enhancing LLMs’ reasoning capabilities.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post Iterative Preference Optimization for Improving Reasoning Tasks in Language Models appeared first on MarkTechPost.